33 Seven Fallacies of Highly-Human Thinkers

Many sources have identified a host of fallacies we are prone to commit, but much of the discussion stems from a highly influential book, Thinking, Fast and Slow, by Daniel Kahneman. Kahneman provides his own list of fallacies we are prone to use. The following list overlaps a bit with his but also offers some other fallacies that seem to me very common and more relevant to this introduction to epistemology.

1. Anchoring. The thing we learn first often has an overly strong effect over the rest of what we learn, and we make decisions about what to accept or reject on the basis of that first thing. For example, perhaps we learn from Grandpa that the candy store charges too much, and thereafter, we insist that this is true. Every bit of contrary evidence that comes in (“But the chocolate bars are, in fact, cheaper than anywhere else!”) is brushed aside as just a fluke, or a ploy by the candy store to lure in more customers and then overcharge them for other items. But what reason or evidence do we have for believing that the candy store is so devious? Really, it is just that we first learned one thing from grandpa, and then we stuck with it. Our Storymaker makes the first thing a crucial element in the story and uses it as a criterion for deciding what else to add to the stories we make. But of course, it can easily be that the first thing we hear or learn is not reliable—even if it comes from Grandpa!—and is not entitled to this kind of authority.

2. Confirmation bias. We seem to be wired to look greedily for evidence that supports whatever we already believe and to ignore any evidence that suggests otherwise. But this is, if anything, the opposite of what we would want to do if we wanted to be sure that what we believe is true. If we were to try to follow something like the scientific method in forming our own beliefs, we should look instead for good evidence that what we believe is false. And if we find none, then we may tentatively hold on to our belief until further evidence comes in. This bias toward trying to confirm our beliefs seems to result from the way our Checker interacts with our Guesser: the Checker looks to see if a guess is correct and does not look for evidence against the guess.

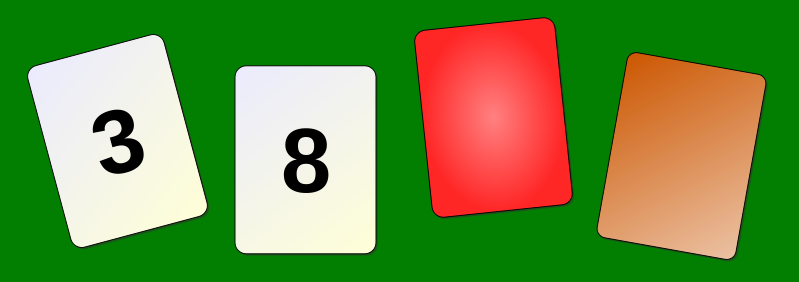

One illustration of our inclination toward confirmation bias is the “Wason selection task.” We are shown the cards in the diagram below. Each card has a color on one side and a number on the other side. We are asked to determine whether the following rule is true: every card with an even number on one side is red on the other side. To determine whether this rule is true, we are allowed to turn over only two cards. Which two cards should we turn over?

Most people think immediately of turning over the “8” card and the red card, probably because the rule we are thinking of combines even numbers and red cards. So, we want to see whether that connection holds. But in fact, the only way to test the rule is to turn over the “8” card and the brown card. The red card will not tell us anything because the rule does not tell us whether cards with odd numbers might also have red on the other side. The rule also doesn’t tell us what happens with odd numbers. The rule only says that if we have an even card, then there’s red on the other side. So, to test the rule, we had better make sure the brown card does not have an even number on its other side.

It is hard for us to think this way because we are prone to think in terms of confirming a claim rather than disproving a claim or looking for contrary evidence. We look for the “even + red” combination to be true and do not think through what we would have to see in order for it to be proven false.

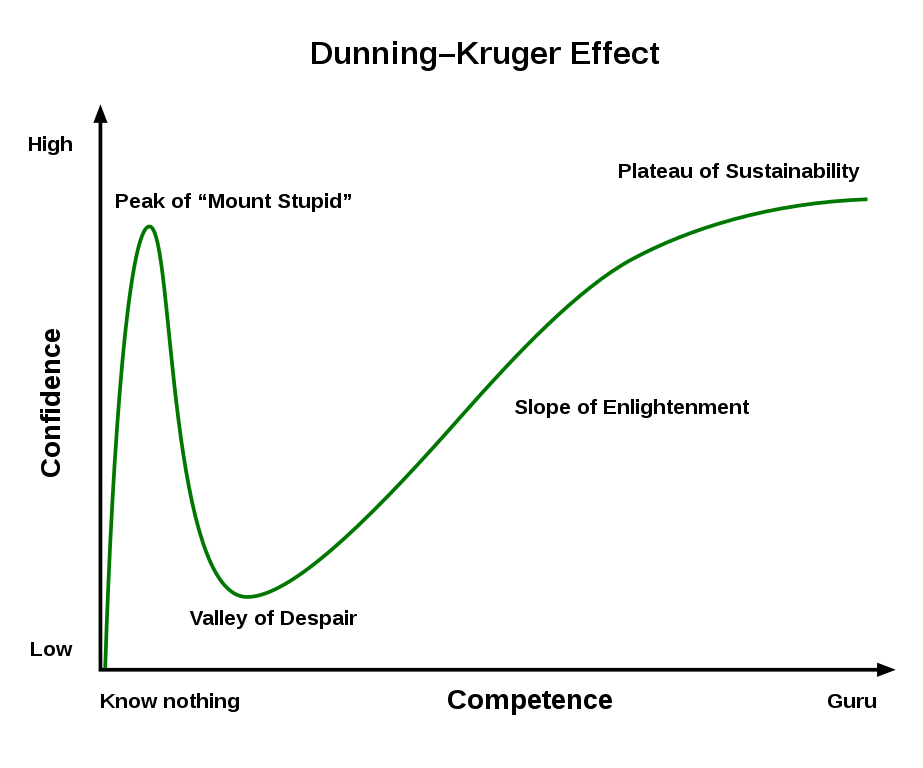

3. Dunning-Kruger effect. We tend to be more confident about our own expertise the less we know about something. As the philosopher Bertrand Russell said, “The whole problem with the world is that fools and fanatics are always so certain of themselves, but wiser people so full of doubts.” As you might imagine just from its name, the Dunning-Kruger effect has been studied in research settings by psychologists and has been put in the form of a graph that shows the relation between how much people know about something and how confident they are about their knowledge:

So, people who know next to nothing about a subject are very confident in their beliefs about it. Then, with a little more knowledge, people realize that they know very little, and gradually, as they learn more and more, they become more “sustainably” confident in their knowledge.

The Dunning-Kruger effect is due to both the Guesser or the Storymaker in our model. If a topic comes up about which I know very little, my Guesser will go to work making guesses about the topic. My Checker has nothing to contribute since the topic is not about my immediate surroundings. My Storymaker does not have much to offer since (again) this is a topic about which I know very little. So, the guesses I make will get a “free pass,” particularly if they happen to cohere nicely with somewhat-related beliefs I already have. No resistance is offered by any component of my cognitive system, and so I feel very confident of my guesses, like I am an expert. But in this case, my so-called knowledge really consists only in my not knowing any better.

4. In-group bias. We give greater weight to the experiences and reports of those who belong to our groups. The people in our groups are friends, family members, or co-workers whom we know and trust; it is hard for us not to trust and believe them. So, for example, I might read study after study that shows that vaccinations prevent disease, but the fact that my mother’s second cousin became extremely sick after receiving a vaccination when she was a little girl outweighs all of the evidence of the studies, and my entire family is set against any vaccinations as a result. Or, for another example, if I see on social media that all of my friends seem to share a political view, it will be difficult for me not to want to share that view with them. I trust them; they are like me; how can I disagree with them?

But of course anyone can be wrong, and some of these people may be our friends and family. There are excellent reasons for trusting family and friends, but such strong trust becomes a liability in cases where what our group says is at odds with what stronger evidence suggests. In terms of our model, in-group bias seems closely related to Anchoring. The knowledge of what my group believes does not have to come to me first, but I give it a stronger voice or greater authority than other beliefs or considerations that come my way because it is coming from my group. My Storymaker regards it as a “vital element” to the story because my group, and belonging to my group, is a vital element of my story.

5. Out-group anti-bias. This comes along with in-group bias but is important enough to merit special attention. Just as we are likely to place too much trust in those who belong to our group, we are likely to place not enough trust in those outside our group. This is clearly demonstrated by the level of hostility on the internet toward people who are not in our groups. Anything that supports an outside group is seen as a threat to our group. The reasoning and evidence that supports the views of an outside group is rarely considered impartially and honestly, just as support for the views of our group is seldom subjected to critical assessment. Both in-group bias and out-group bias are products of an “us vs. them” mentality which skews our reasoning and ultimately puts us all in a weaker position with regard to knowledge. Forming epistemic groups makes us all ignorant.

6. Availability heuristic. It is difficult for us to continuously process all the information that comes our way. One shortcut for processing it is to make a “snap” judgment that what we are experiencing fits some sort of model or template (or heuristic) that we already have available. An obvious example of this is employing a stereotype, or making use of a ready-made list of characteristics in order to make judgments about an individual on the basis of their race, sex, or ethnicity. Unfortunately, our culture provides a very handy set of heuristics to use in judging people which allow us to draw false conclusions rapidly and easily.

In our model, this is the Storymaker’s fault. Rather than take the time and effort to compose an accurate story from the available information, the Storymaker slaps on some handy story that is available and moves on to the next task.

7. Barnum effect. This effect is named after the great American huckster P. T. Barnum. Barnum realized that, in trying to deceive someone, you can count on the other person to meet you halfway. In some cases, we join in the effort to deceive ourselves—perhaps because we are being sold a flattering story or because the misinformation being presented to us fits so neatly with preconceived opinions we hold or allows us to draw conclusions we are already eager to draw. On some topics, we really don’t mind being fooled.

This is seen most clearly in the business of telling fortunes or writing horoscopes. The fortune teller only needs to provide a vague outline, and most people will fill in the details for themselves. I can demonstrate this to you by showing off my own psychic powers: I know you, the person reading this book right now. You feel a strong need for other people to like and admire you. You have a great deal of unused capacity which you have not yet turned to your advantage. You pride yourself as an independent thinker and do not accept others’ statements without satisfactory proof. Yet, at times, you have serious doubts as to whether you have made the right decision or done the right thing.

Are my psychic powers not astounding?! But of course those last four sentences apply to anyone and everyone. If you were thinking about them as you read them, you probably thought of features in your life that fit the description. That is natural since, as we try to understand anything, we think about how the new information fits with what we already know. If the “new” information is about us, and if it is suitably general and vague, we will have no difficulty in thinking of ways in which the information fits with our knowledge of ourselves—particularly when the information sounds flattering—and we are deceived into believing that someone else has uncanny knowledge of our own private lives.

But the fallacy does not happen only in the presence of fortune tellers. Our Guesser and Storyteller love to fill in the blanks in any explanation. If someone provides us with a partial explanation, we will automatically start to fill in the gaps with whatever guesses seem to us to be plausible or to fit our other beliefs or suspicions. But this means that significant parts of the story we end up with have been invented by us and may have no real connection to the truth.

Media Attributions

- Figure 8.2 Huenemann © Life of Riley is licensed under a CC BY-SA (Attribution ShareAlike) license

- Figure 8.3 is licensed under a Public Domain license