The John and Marcia Price College of Engineering

10 Medical Imaging Using Near-Infrared Waves

Luis Lorenzo Delos Reyes

Faculty Mentor: Rajesh Menon (Electrical and Computer Engineering, University of Utah)

Abstract

With the growing demand for more non-invasive based treatments in the medical field paired with the rise of COVID-19 which came with it the widespread adoption of more contactless technologies, the emergence of infrared technologies and therapy might prove beneficial as it not only provides a contactless, non-invasive way of measuring many health biometric information, but it also has the potential of being utilized as a way of providing valuable imaging through its unique properties and wavelength which makes it optimal for some applications. Infrared light is usually utilized in PPG or Photoplethysmogram which can be seen in most hospitals and clinics wherein its main use is to measure blood attributes and blood changes in volume by shining infrared light into a patient’s finger and then observing the changes in the amount of light being detected at the other side of the finger with a light sensor. Infrared waves also find extensive use in Thermal Imaging Cameras which use lenses to focus infrared energy into the sensor and, more recently, in vein finding devices which can help image vein networks to ease the administering of shots and blood extraction proving the great potential that this technology has. The proposed project aims to explore various ways in which infrared use can be expanded into more applications such as utilizing infrared and near-infrared wave imaging on specific vital points to help in diagnosing blood clots and blood clot related viruses and diseases. One of the many diseases wherein the symptoms consist of blood clots in the blood network going to the brain is COVID-19.

Index Terms— Near-Infrared Waves, Raspberry Pi, Image Segmentation, Signal Processing, Vein-Finding, Hemoglobin

I. Introduction

While compared to the other forms of imaging such as ultrasound and X-Rays, infrared might not provide as much detail, or propagate as freely within the body, one area where this technology really stands out is in its accessibility and convenience on top of its potential to image blood flow in veins and arteries. In recent years, there has also been a surge of interest in NIR with some applications even going as far as providing cerebral oxygen saturation data and other cerebral applications [7] but most of these are still relatively new and very costly. Most NIR (Near Infrared) vein finders typically image veins and blood patters for about 10 to 15mm of depth.[2] One of the reasons why NIR proves promising when it comes to its blood related applications comes down to the properties of blood, to be specific, the properties of hemoglobin makes it so that when NIR is directed towards its blood flow, the NIR light is absorbed by the flow of blood, while the tissue surrounding the vein reflects the light back into a sensor wherein some signal processing takes place in order to map out and project where veins are located.[3]

Recent research and developments may have increased the capabilities of NIR light and driven down the costs associated with utilizing this technology, but more can be done with the improvement of NIR as well. The ability to use lenses and non-imaging optics may prove useful in trying to maximize the NIR when it comes to concentrating and transferring light into the desired imaging location.[6] Before doing these manipulations however, the right infrared light must be chosen, and from a study by Garcia and Horche, it was identified that a wavelength between 585nm-670nm would be optimal for vein visualization.[4] After the reflected light has been collected, there is also a potential to improve the signal received through post processing similar to that seen in ultrasound signal conditioning in order to improve the accuracy and resolution of the image.

Having an improved NIR imaging system could also bring a whole new level of benefits for infrared technology. Expanding its capabilities could help in diagnosing more and more blood related diseases especially those relating to blood and blood clots such as potentially COVID-19 which can be identified through blood clots present in the arteries surrounding the brain.[1] One of these arteries near the brain is the Basilar and facial arteries which are vital arteries which could lead to various disorders within the body and the nervous system should they have any abnormalities.[5] This was pointed out because their location within the face makes it so that NIR has the potential to propagate through and actually analyze areas where these arteries reside which is the area surrounding the nose which is perfect because this is also an opening where bone does not serve as an obstruction.

II. Technical Introduction

A. Near-Infrared Waves

Near-Infrared wave is a segment of the electromagnetic spectrum, lying just beyond the visible spectrum of light. These waves, with wavelengths ranging from approximately 700 nanometers (nm) to 1,400 nm, possess unique properties and find diverse applications in various fields such as astronomy, medicine, agriculture, and more. In this project, we will explore the characteristics, uses, and significance of nearinfrared waves.[8]

Near-infrared waves, often abbreviated as NIR, have wavelengths that are slightly longer than those of visible light. This means they are not visible to the human eye, but they share some common properties with both visible light and longer-wavelength infrared radiation. NIR waves are characterized by their ability to penetrate certain materials and interact with the molecular vibrations of substances.

One of the key properties of NIR waves is their ability to interact with the chemical composition of objects. When NIR radiation interacts with a substance, it causes the molecules within that substance to vibrate at specific frequencies. These vibrations produce a unique absorption spectrum that can be measured and analyzed. This property forms the basis for many applications of NIR waves.[9]

NIR spectroscopy also plays a crucial role in the pharmaceutical industry. It is used for quality control and the identification of chemical compounds in drugs. The technique is rapid, non-destructive, and highly accurate, making it an essential tool for ensuring the safety and efficacy of pharmaceutical products.

In the field of medicine, NIR waves find application in non-invasive imaging techniques like near-infrared spectroscopy (NIRS). NIRS is used to measure oxygen levels in tissues and detect anomalies such as tumors. It has become an indispensable tool for diagnosing medical conditions and monitoring the health of patients. Scholkmann et al. (2014) discuss these applications in their article “A Review on Continuous Wave Functional Near-Infrared Spectroscopy and Imaging Instrumentation and Methodology.”[10]

B. Raspberry Pi

A small, affordable, and versatile single-board computer that has captured the imagination of hobbyists, educators, and innovators around the world. Introduced in 2012 by the Raspberry Pi Foundation, this credit-card-sized computer has had a profound impact on various fields, from education to DIY electronics and even professional applications. In this section, we will explore the history, features, applications, and significance of the Raspberry Pi. The Raspberry Pi’s appeal lies in its simplicity and affordability. Despite its diminutive size, it packs impressive features:

- Processing Power: Raspberry Pi models come with various processors, with the latest versions offering significant computing power. The Raspberry Pi 4, for instance, boasts a quad-core ARM Cortex-A72 CPU, making it capable of handling a wide range of tasks.

- Connectivity: Raspberry Pi boards offer multiple USB ports, HDMI outputs, audio jacks, and network connectivity options like Ethernet and Wi-Fi. This connectivity allows users to connect various peripherals and use the Raspberry Pi as a versatile computing platform.

- GPIO Pins: The Raspberry Pi includes a set of General-Purpose Input/Output (GPIO) pins, which enable users to interface with external hardware and electronics, making it a favorite for DIY electronics projects.

- Operating Systems: Raspberry Pi supports various operating systems, including the Raspberry Pi OS (formerly Raspbian), Linux distributions, and even Windows 10 IoT Core, providing flexibility for different applications.

- Community and Accessories: An active and passionate community has formed around the Raspberry Pi, creating a wealth of resources, tutorials, and accessories. From camera modules to touchscreen displays, users can expand the capabilities of their Raspberry Pi to suit their needs.

Applications of the Raspberry Pi: The Raspberry Pi has found applications in a multitude of domains:

- Education: Its original purpose remains a core focus. Raspberry Pi computers are used in schools and educational programs worldwide to teach programming, electronics, and computer science.

- DIY Electronics: Hobbyists and makers use Raspberry Pi for projects ranging from home automation and robotics to retro gaming consoles and media centers.

- Professional Prototyping: It serves as a cost-effective tool for prototyping and proof-of-concept development in the professional world. Engineers and researchers use it to create innovative solutions quickly and inexpensively.

- Server and Network Applications: Raspberry Pi is employed as a low-power server for various purposes, including web hosting, file storage, and even as a network-attached storage (NAS) device.

- IoT (Internet of Things): Its small form factor, GPIO pins, and connectivity options make it an ideal choice for IoT projects. It can be used to build smart home devices and sensors.

The Raspberry Pi’s significance lies in its democratization of computing and innovation. It has made computing accessible to a wider audience, breaking down barriers to entry into technology and fostering creativity. Students can learn programming and electronics without the need for expensive hardware, and professionals can rapidly prototype ideas without large budgets. [11]

As technology continues to evolve, the Raspberry Pi is likely to play a significant role in shaping the future of computing and innovation. Its community-driven development and continuous improvements in hardware and software ensure that it remains a relevant and powerful tool for years to come.

C. Image Segmentation and Signal Processing

Image segmentation is a fundamental technique in computer vision and image processing that plays a pivotal role in identifying and extracting meaningful regions or objects within an image. This process involves partitioning an image into multiple distinct segments, each representing a specific region of interest. In this section, we will delve into the principles, methods, applications, and significance of image segmentation. At its core, image segmentation aims to group pixels or regions in an image based on their visual characteristics. This involves distinguishing objects from the background, identifying boundaries between objects, and dividing the image into homogeneous regions. The primary principles guiding image segmentation include color, intensity, texture, and spatial proximity. A variety of techniques and algorithms are employed for image segmentation, each suited to different scenarios and challenges:

- Thresholding: This straightforward method involves selecting a threshold value and categorizing pixels as foreground or background based on their intensity or color values. It is simple yet effective for binary segmentation tasks.

- Region-based Segmentation: This approach groups pixels into regions by examining their similarity in terms of color, texture, or other features. Region growing and region splitting/merging are common techniques in this category.

- Edge Detection: Edge-based segmentation detects edges and contours in an image, using techniques like the Canny edge detector or the Sobel operator. Edges often correspond to object boundaries.

- Clustering Algorithms: K-means clustering, and hierarchical clustering are utilized for segmenting images based on pixel similarity. These methods are versatile and can handle various types of data.

- Machine Learning: Deep learning methods, particularly convolutional neural networks (CNNs), have revolutionized image segmentation. U-Net, Mask R-CNN, and FCN (Fully Convolutional Networks) are popular architectures for semantic and instance segmentation.[12]

Image segmentation finds applications across a wide range of fields:

- Medical Imaging: It is vital for detecting and analyzing anatomical structures and abnormalities in medical images, including MRI, CT scans, and X-rays.

- Autonomous Vehicles: In self-driving cars, image segmentation is crucial for identifying pedestrians, other vehicles, and road signs.

- Object Recognition and Tracking: In robotics and surveillance, image segmentation helps in identifying and tracking objects of interest.

- Satellite and Remote Sensing: Image segmentation assists in land cover classification, crop monitoring, and environmental analysis.

- Biomedical Imaging: It is used in cell and tissue analysis for tasks such as counting cells, identifying nuclei, and tracking cell movement.

- Natural Language Processing: In document processing, image segmentation is used to isolate text regions for optical character recognition (OCR).

Image segmentation is integral to understanding and extracting information from images in the era of big data and artificial intelligence. Its significance lies in its ability to simplify complex visual data, making it more manageable and interpretable. With the advent of deep learning techniques, image segmentation has seen significant advancements in accuracy and efficiency [13].

The future of image segmentation holds promise as it continues to evolve with advancements in computer vision and machine learning. Enhanced algorithms, real-time processing capabilities, and improved accuracy will expand its applications and make it even more integral in fields ranging from healthcare to autonomous systems.

III. Methodology

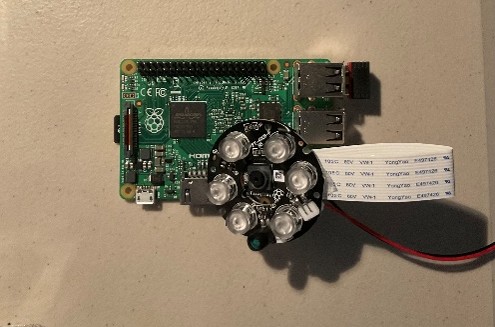

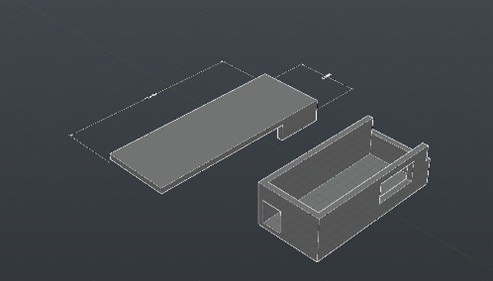

Now that a background has been established for the related information and technologies for the Near-Infrared vein finder, the development of the vein finder system consists of three main sections: the Near-Infrared array/sensor, the Image Segmentation and processing software, and the Pico projector to project the image to the desired area all of which working hand in hand in conjunction with the Raspberry Pi which acts as the main microprocessor for the device. In the case of this study, a Raspberry Pi 2 was used along with a Near-Infrared led array and the NoIR sensor (Figure 1.a). This sensor was used because of its ability to block unwanted light waves which are not in the Near-Infrared spectrum. A 36800mAh battery was also used as a means of powering the device to ensure its portability and ease of use, all of which are housed inside of a 3D printed enclosure to protect the entire system (Figure 1.b). A black material was also placed around the NIR array and the sensor in order to reduce the reflected unwanted light which could compromise the image.[14]

Figure 1.a. Raspberry Pi Microcontroller with NoIR sensor attachment.

Figure 1.b. 3D model of the Vein Finder Enclosure on AutoCAD.

Figure 1.b. 3D model of the Vein Finder Enclosure on AutoCAD.

The first thing to consider was to make sure that the camera doing what it was intended to do, which in this case was to read and sense the Near-Infrared waves coming from the Near-Infrared LED array, which in this case utilizes an 850nm wavelength, being reflected by the hemoglobin flowing inside the veins. Originally, it was intended to use just a regular camera or sensor to do this task and to just modify that sensor by essentially removing the infrared filter from the sensor which would open the range of wavelengths being seen by the sensor to include those wavelengths on the Infrared spectrum. Initially, the OV2640 sensor was chosen for its affordability running at just around $10.00, although after removing its infrared filter and testing it out for the first time the image quality turned out to be less than sufficient for accurate vein imaging. It was then decided that the best option would be to use an actual sensor that is specifically manufactured for sensing infrared wavelengths to begin with, this is why the NoIR camera by Adafruit was chosen to be the main sensor. The result for this sensor shows vast improvements from the initial sensor clearly showing the location of veins in the wrist. Pictured below are the results from both sensors.

Figure 2.a. Image from the initial sensor used, the OV2640 with the infrared filter removed.

Figure 2.b. Image from the NoIR camera sensor

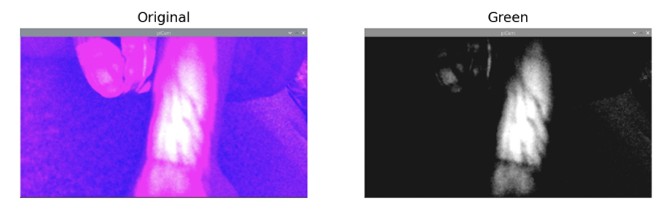

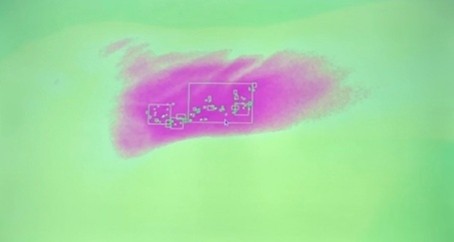

Now that a sensor has been confirmed to be working with Raspberry Pi that also has NIR sensing capabilities, the next logical step would be to try and improve the image seen and make the veins more prominent. There are many different image segmentation techniques which can be beneficial to achieving this goal. For the purposes of this research study, the image segmentation software was developed in Python because of its ease of accessibility and its extensive support. The first method used made use of color detection to essentially filter out all the pixels in the image apart from the ones which have a specific color value which corresponds to that of the veins. (figure 3.a) After that, thresholding was also done which essentially converts the image into a specific color space wherein an area of interest can be chosen while the rest of the image is ignored. (figure 3.b) Tone curves were also explored in this research study, tone curves allow for ease of manipulation of an image’s exposure, amount of light and tone of the image. This can be very useful in making the specific adjustments in making the veins in an image more prominent, and giving the image the ability to show more detail which would not have originally been seen without the implementation of tone mapping by removing unwanted intensities. Edge detection was also implemented by looking for sudden changes in pixel intensity throughout the image which greatly improves delineating different sections of the image such as where the veins start and stop differentiating them from the arms. Implementing all of these functions results in a much more improved version of the original image that allows the user to locate the veins much more accurately, but there’s still more that can be done to improve this device’s capabilities.

Figure 3. Resulting image after image was converted to the green color space.

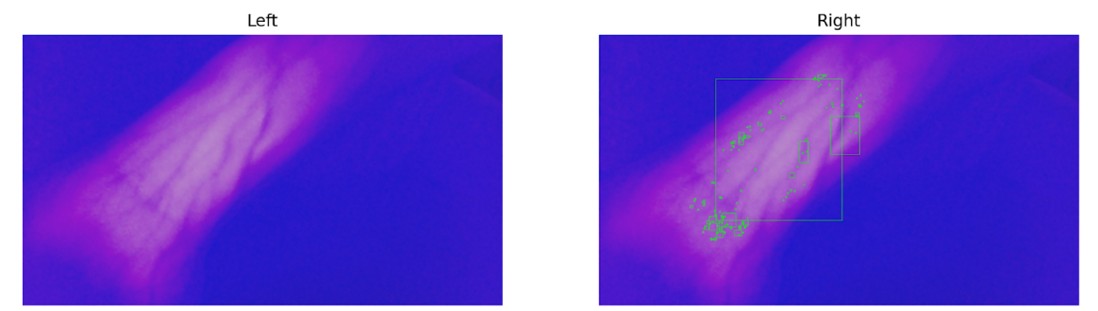

The next aspect of image segmentation used in this project was the use of image annotations as well as implementing a machine learning algorithm to the code. First, for the image annotations, a contour analysis must be done to correctly determine bounding boxes around the masks which were done during thresholding. Morphing is also done to the image to improve its ability to learn and delineate which structure counts towards having an annotation assigned to it. Once the annotations have been implemented, filtering out unwanted boxes around areas which don’t correspond to vein areas were also done. Finally, once all of that had been accomplished, all that was left was to make sure that the image segmentation code that was put together could work flawlessly with the Pico projector so that the image can be projected into the desired area instead of just having the live feed on a screen. After making a few adjustments to the Raspberry Pi, mainly adding the file path of the image segmentation code to rtc.local, the Vein Finding program starts immediately after starting up when plugging in the Raspberry Pi to the Pico Projector. A few adjustments were also made to the code to make sure that when the live video feed was being projected, it would do so in full screen and without any title bars or any other obstruction.

IV. Results

The results of Near-Infrared Vein Finder System prove to be very effective at helping in locating where the veins are located at a specific area. With the NIR sensor at a range of about 305mm away from the arm or the desired area, the effective surface depicting an accurate representation of where the veins are is obtained to be about 533mm2 . From the specified range, a depth of about 2.5mm is penetrated by the NIR light which is enough to reflect the NIR light to the sensor. From the results pictured below, this set up can image the essential veins such as the median cubical and the radial artery which is the preferred vein that phlebotomists use to draw blood. When it comes to machine learning and drawing annotations on the image, the system can accurately place annotations on most of the veins visually seen from the image segmentation being done on the image. In the case of this Vein Finder when compared to other similar devices in the market, the results show a similar result in terms of functionality and which veins are accurately delineated for a fraction of the price as well.

Figure 4.a. Image with all the image segmentation techniques applied compared to the same image but with the annotations.

Figure 4.b. Live Image being Projected.

Figure 4.c. Image with the tone mapping applied.

V. Discussion and Conclusion

The results of this research through image segmentation show very promising results for NIR as a means of accurately locating veins which will only improve as more machine learning aspects are improved and integrated into this system. Although the results may be promising, there is much to be said about the significance of this research and its implications.

A. A New Way of Imaging

When looking into this research, the most noteworthy implication that this hold is that it will advance the use of NIR in the medical field and that this research will hopefully lead to more research to be done in this specific area. This project has opened the gates in terms of what’s possible and what can be achieved with NIR when used in conjunction with various image segmentation techniques and machine learning. In the case of this project, it was determined that even with a limited budget one can achieve results like that of vein finders which cost ten times more than the Vein Finder system used through NIR and image segmentation.

Through the pairing of NIR technology and software based image processing and segmentation, it is possible to unlock a whole new plethora of applications for this type of technology such as imaging on different levels such as potentially with areas such as the cerebral arteries which can affect the brains day to day function especially with monitoring oxygen levels in those areas, observing abnormal tissues within a specified areas, or at a more intricate level such as with facial veins which will really benefit dermatologists with their procedures. It’s also worth mentioning that the use of NIR imaging, particularly in functional brain imaging, raises ethical questions about consent, data security, and potential risks associated with prolonged exposure to NIR radiation. It is also worth mentioning that these applications are not just limited to the medical field but have a lot of potential in other fields as well. In agriculture, NIR imaging has revolutionized farming practices. By analyzing the reflectance of near-infrared light from crops, it is possible to assess plant health, nutrient content, and moisture levels. This information aids farmers in optimizing crop management, reducing waste, and increasing overall agricultural productivity. The implications for global food security and sustainable agriculture are substantial. NIR imaging also plays a vital role in environmental monitoring and remote sensing. Satellites equipped with NIR sensors can track changes in vegetation cover, analyze soil moisture levels, and assess the impact of climate change. By providing a bird’s-eye view of the Earth’s surface in the NIR spectrum, this technology aids in disaster management, biodiversity conservation, and land-use planning. Astronomy is another field where NIR imaging has proven invaluable. Since near-infrared light can penetrate cosmic dust clouds, it allows astronomers to observe celestial objects that are obscured in visible light. This capability has led to the discovery of distant galaxies, the study of exoplanets, and the characterization of stellar atmospheres. NIR imaging telescopes have broadened our understanding of the universe and the existence of potentially habitable planets. In the realm of security and surveillance, NIR imaging has significant implications. Law enforcement and military agencies use this technology for night vision and surveillance purposes. It enables enhanced situational awareness in low-light conditions, contributing to public safety and national security.

In conclusion, near-infrared imaging is a transformative technology with far-reaching implications in diverse fields. It enhances medical diagnostics, revolutionizes agriculture, advances our understanding of the universe, and contributes to environmental monitoring. However, its adoption also necessitates careful consideration of ethical, privacy, and safety concerns. As NIR imaging continues to evolve, its impact on society and the various sectors it touches will only become more profound, necessitating ongoing ethical and regulatory discussions to ensure its responsible and beneficial use.

B. Overlooked Complications and Future Work

There is also some room for improvements and things which could’ve been changed in this project. One of these improvements is to alter the live image feed coming from the Pico projector. The reason why this still needs to be altered is because since the projector is located about 2 inches away from the sensor, this distance may seem insignificant, but since the system projects the live feed directly to where the veins are trying to be located, i.e., what the NIR sensor is picking up, this minor 2-inch space means that the image is not being projected exactly at the desired area. This can easily be mitigated through altering the design of the system to make sure that the projector and the sensor are located as close as possible to each other or by altering the projector software to make up for the small distance by altering the image to compensate for the gap. One more improvement which could be made lies in the annotations being made on the image. From the results we see that at some of the veins, the annotations are consistent and yet at the other ones, its more sporadic and even nonexistent at the more obscure veins, this could potentially be improved through altering the morphing section of the image segmentation code. Finally, being able to gather a large dataset for the system to work on will streamline machine learning algorithms in the future, especially when making use of clustering which is a type of algorithm which would greatly benefit this project in terms of an improved machine learning capability.

Bibliography

1. P. Galiatsatos and R. Brodsky, “What Does COVID Do to Your Blood?,” Hopkins Medicine, 03-Mar-2022. [Online]. Available: https://www.hopkinsmedicine.org/health/conditions-and-diseases/coronavirus/what-does-covid-do-to-your-blood. [Accessed: 02-Feb-2023].

2. “Veinviewer is clinically proven to – carestream america,” VEINVIEWER FREQUENTLY ASKED QUESTIONS, 2013. [Online]. Available: https://www.carestreamamerica.com/wp-content/uploads/2019/12/Christie_VeinViewer_Vision2_Flyer.pdf. [Accessed: 02-Feb-2023].

3. “How does vein finder work?,” BLZ Tech, 2015. [Online]. Available: http://www.veinsight.com/en/how-does-vein-finder-work.html. [Accessed: 02-Feb2023].

4. M. Garcia and P. R. Horche, “Light source optimizing in a biphotonic vein finder device: Experimental and theoretical analysisM,” Results in Physics, 03-Nov2018. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S2211379718318874. [Accessed: 02-Feb-2023].

5. L. Welsh, J. Welsh, and B. Lewin, “Basilar artery and Vertigo,” SAGE Journals, 2016. [Online]. Available: https://journals.sagepub.com/doi/10.1177/000348940010900701. [Accessed: 02-Feb-2023].

6. W. Jia, R. Menon, and B. Sensale-Rodriguez, “Visible and near-infrared programmable multi-level diffractive lenses with phase change material Sb2S3,” Optica Publishing Group, 2022. [Online]. Available: https://opg.optica.org/oe/fulltext.cfm?uri=oe-30-5-6808&id=469497. [Accessed: 02-Feb-2023].

7. G. S. Umamaherwara and S. Bansal, “Neurological critical care,” Essentials of Neuroanesthesia, 31-Mar-2017. [Online]. Available: https://www.sciencedirect.com/science/article/pii/B9780128052990000348. [Accessed: 02-Feb-2023].

8. L. Sun, “The difference between light therapy and near Infrared therapy,” Sunlighten, https://www.sunlighten.com/blog/difference-light-therapy-near-infraredtherapy/#:~:text=Near%20infrared%20light%2C%20with%20wavelengths,tissue%20repair%2C%20and%20reduce%20inflammation. (accessed Oct. 26, 2023).

9. R. Osibanjo, R. Curtis, and Z. Lai, “Infrared Spectroscopy,” Chemistry LibreTexts, https://chem.libretexts.org/Bookshelves/Physical_and_Theoretical_Chemistry_Textbook_Maps/Supplemental_Modules_(Physical_and_Theoretical_Chemistry)/Spectrosc opy/Vibrational_Spectroscopy/Infrared_Spectroscopy/Infrared_Spectroscopy (accessed Oct. 26, 2023).

10. F. Scholkmann F;Kleiser S;Metz AJ;Zimmermann R;Mata Pavia J;Wolf U;Wolf M;, “A review on continuous wave functional near-infrared spectroscopy and Imaging Instrumentation and methodology,” NeuroImage, https://pubmed.ncbi.nlm.nih.gov/23684868/ (accessed Jun. 26, 2023).

11. “About Us,” Raspberry Pi, https://www.raspberrypi.com/about/ (accessed Oct. 26, 2023).

12. “Image segmentation detailed overview [updated 2023],” SuperAnnotate, https://www.superannotate.com/blog/image-segmentation-for-machine-learning (accessed Oct. 26, 2023).