35 Using Generative AI to Perform Stacked Evaluations of Educational Documents: Provoking Students to Think on Successively Higher Levels

Susan Codone

Abstract

This chapter describes the use of ChatGPT in a graduate class assignment and explores how AI can scaffold student work to promote successively higher levels of thinking. The assignment asked students to compose a “stacked evaluation” of school district technology plans, which included a rubric generated by ChatGPT, evaluation data generated by ChatGPT using the rubric criteria, and the students’ evaluation of both the technology plan and of the ChatGPT evaluation. Student deliverables were more thorough than in previous semesters and included clear demarcation of AI-generated text and original writing. Because students asked ChatGPT to act in the persona of a school superintendent, their own writing took on a more formal tone as well, and using AI caused students to think on successively higher levels.

Keywords: Generative AI, evaluation, assessment, assignment, taxonomies

Introduction

Using AI in education is a complex endeavor. Applying and interpreting the results can take surprising turns, creating tension in the achievement of learning outcomes, construction of student deliverables, and preservation of the originality of student work. In a teaching and learning setting, any use of AI that provokes students to think on successively higher levels of Bloom’s Taxonomy can be a valuable addition to the instructional strategies used in a course. Two practical applications of generative AI are to summarize a document and evaluate it according to specific criteria. This chapter represents the purposeful use of ChatGPT to do both in an assignment. Pedagogical lessons for in-class use of AI are discussed, particularly the use of an AI persona and its effect on writing, as well as the students’ level of thinking while using ChatGPT to complete the assignment. The primary assignment goal was to elevate student level of thinking to the highest levels of Bloom’s Taxonomy by activating synthesis and evaluative thinking.

The assignment required doctoral students in curriculum and instruction, most of whom were active K-12 teachers, to use ChatGPT to evaluate school district technology plans. School district technology plans are public documents that describe the technology used by schools in a district. Students were asked to generate evaluations both by using ChatGPT and by writing together in groups, stacking their evaluations together, and then crafting a comprehensive and synthesized final deliverable combining both. This chapter will also discuss how the professor used AI to scaffold the assignment with the students and how their work changed compared to that of students in previous courses. Overall, this chapter describes the assignment, its steps, the prompts used with ChatGPT, and the evaluative methodology used by the students.

Literature Review

Use of chatbots in higher education, particularly ChatGPT, is still in an exploratory stage as of this writing although research suggests ChatGPT can be valuable for writing and enabling topical evaluations (Halaweh, 2023). Because users control the chatbot conversation, students can decide the conversation’s path and need to be equipped with competencies to manage effective inquiries (Dai et al., 2023). Metacognitive and self-regulation skills are essential so that students can evaluate their own progress and reflect on their interactions with ChatGPT (Dai et al., 2023). Some researchers are skeptical of ChatGPT’s ability to provide meaningful help. In a 2023 review of AI use in pathology education, researchers suggested that questions requiring critical thinking, reasoning, and interpretation may be beyond AI’s current capabilities (Sinha, et al., 2023).

Higher education students engaged in class-specific work with guided reflection, like in the assignment discussed in this chapter, can use AI to help focus their efforts (Overono & Ditta, 2023). Getting started on an assignment can be difficult for students. ChatGPT can partly alleviate this difficulty by acting as a scaffolding tool to create an initial draft which students can then build upon (Lo, 2023). Researchers studied in a meta-analysis by Lo (2023) concluded that assessments in higher education should focus on the higher levels of Bloom’s Taxonomy given student use of AI, as was done in the assignment described in this chapter. The assignment described in this chapter directed students to use AI as a scaffolding tool to help them activate higher levels of learning.

The assignment also directed students to prompt ChatGPT to adopt external, authority-based personas, which ultimately shaped their writing differently than expected. Multiple studies indicate that students, when provided with assignments that contextualize their writing for review by an external audience, may be more motivated to write at levels above their class persona (MacArthur, Graham, & Fitzgerald, 2015). This assignment required students to generate AI text from a prescribed persona of a school district superintendent, thus potentially changing the perceived audience of their writing from the teacher’s perspective to the external view of a professional expert.

Methods

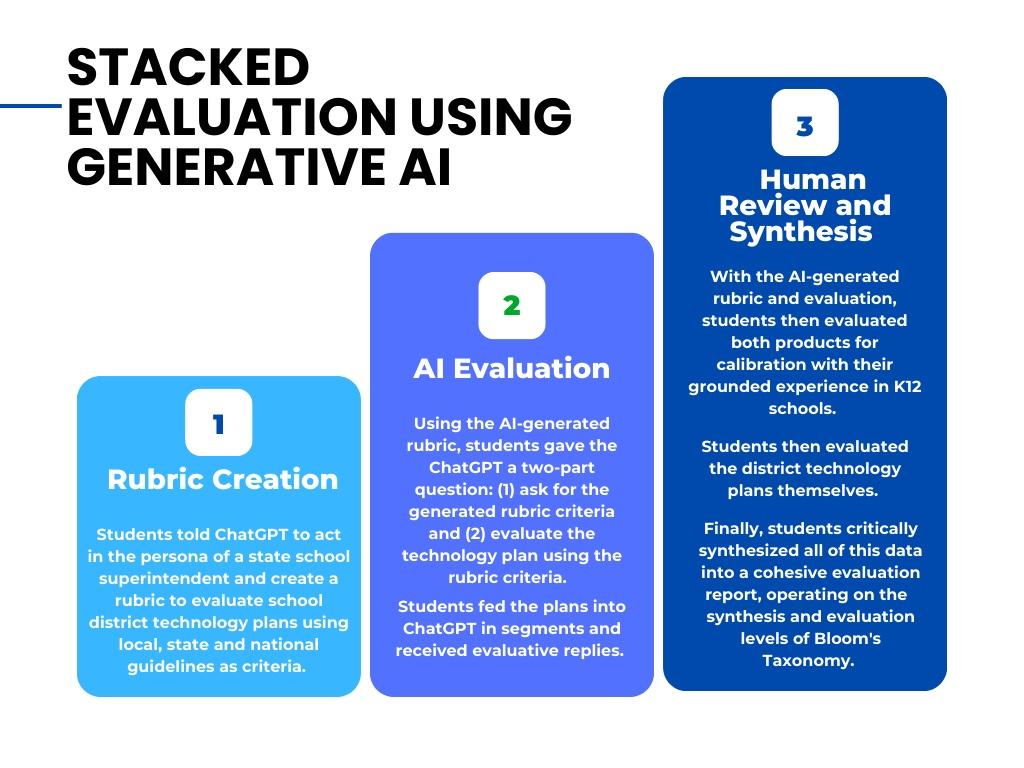

The school district technology plan evaluation assignment has been used in previous semesters. Prior to 2023, students used a rubric provided by the professor for the evaluation, and mastery was evidenced in the students’ final evaluation report, which consisted of only one layer: the students’ review. The assignment was revised in 2023 to incorporate generative AI, allowing students to complete a stacked evaluation of these public documents with the goal of increasing the level of evaluative thinking. The label “stacked evaluation” refers to a three-level evaluation. First, students prompted ChatGPT to act as a school district superintendent and create a rubric to evaluate district technology plans. Second, students pasted the technology plans and the generated rubric criteria into ChatGPT, prompting it for an evaluation. Finally, students assessed ChatGPT’s evaluation and then wrote their own, synthesizing all generated and original text into a final document. Students were given the prompts in Figure 1 as starter guidance. The stacked evaluation model is visualized in Figure 2, and the full assignment instructions are contained in Figure 3.

Figure 1: Generative AI, Evaluation, Assessment, Assignment, Taxonomies

ChatGPT Starter Assignment Prompts

|

Assignment Prompt One

|

|

Assignment Prompt Two “You are the state superintendent of schools. You would like to use a rubric to evaluate district technology plans that includes the following criteria: (list them). I will provide you with a portion of a district technology plan, and you will evaluate it using this rubric. Once you have evaluated the first portion, ask me to provide the next portion and then evaluate it using the rubric. Continue asking me for the next portion until I tell you that I’ve provided the entire plan.”

|

Figure 2: Stacked Evaluation using Generative AI

Figure 3: General Assignment Instructions (2023)

|

General Assignment Instructions (2023 Assignment) |

|

This is a group assignment. To complete this assignment successfully, you must examine and evaluate a technology plan for a school, district or state and write a comprehensive final report. The primary goal is to gain an awareness and appreciation for technology plans, as well as spend time evaluating the validity, practicality and feasibility of a technology plan. You may use AI. |

|

Step One The first part of this assignment is to locate a technology plan of your choice. This can be from your school, district or state. This needs to be in writing. |

|

Step Two Evaluate the plan with reference to national standards. To begin, there are criteria that need to be considered: • How well does this plan align with the ISTE Essential Conditions and the Technology Literacy Standards from ITEEA? |

|

Step Three Use the provided technology plan’s layout as a framework for evaluating the various components of the technology plan. You may add as many elements to your final report as needed. |

|

How to Use AI in this assignment You may use AI to help generate some of your content, but I expect at least 75% of the writing to be original. The goal in using AI will be two-fold: (1) You may use AI to generate an evaluation rubric for the plan you are evaluating. Then, (2) you may feed sections of your plan into the AI chatbot along with the criteria and points of the AI-generated rubric and ask the AI to evaluate the technology plan for you. Then, once you have generated both a rubric and the text of the chatbot’s evaluation, you need to use your own cognitive power to judge the quality and accuracy of the chatbot’s evaluation, adding (in writing) your own human perspective as well. Begin with Prompt One: “You are the state superintendent of schools and you have a strong interest in the ways your schools use technology. Since every school district in your state publishes a technology plan, generate a rubric that you can use to evaluate these plans, considering the rural vs. urban location of your schools, broadband capabilities for students and families, socioeconomic status of students, technology training for teachers, the extent of technology integration, cost, feasibility, and technology rate of change.” Once the rubric is generated, take the criteria and points and ask the AI to evaluate portions of a technology plan. Here’s a sample prompt: The final document should include both your own writing and the chatbot’s generative writing, and also should include your own meta-evaluation of the chatbot’s work. Continue with Prompt Two (using the rubric criteria generated from Prompt One): “You are the state superintendent of schools. You would like to use a rubric to evaluate district technology plans that includes the following criteria: (list them). I will provide you with a portion of a district technology plan, and you will evaluate it using this rubric. Once you have evaluated the first portion, ask me to provide the next portion and then evaluate it using the rubric. Continue asking me for the next portion until I tell you that I’ve provided the entire plan.” |

Students used the instructor-provided prompt in ChatGPT to first establish a persona, then asked ChatGPT to create an evaluation rubric from the perspective of this persona. Because images prevented the entire plan document from being pasted into ChatGPT at once, student groups then fed segments of their selected school district technology plan into ChatGPT along with the rubric criteria, asking it to use the criteria it generated to evaluate the technology plan. Finally, the students assembled the rubric and the ChatGPT’s evaluation of the plans into a cohesive document, and then performed their own evaluation of the technology plan and of ChatGPT’s assessment, evaluating the integrity of the AI’s assessment and synthesizing their human evaluation with that of the AI. Their final deliverable consisted of a stacked evaluation of these district technology plans, with the first level composed of the ChatGPT-generated rubric, the second of ChatGPT’s evaluation, and the third of the human evaluation of both the plans and the chatbot’s evaluation.

Findings and Analysis

Students reflected in an online discussion about their reactions to ChatGPT’s rubric creation and document evaluation. One student expressed surprise that ChatGPT returned different rubrics to the prompt listed in Table 1. Students worked in groups in the classroom, and group leaders immediately reported differences; one rubric featured points-based criteria, another a qualitative range (excellent to poor), one featured only a way to match strengths and weaknesses, and one rubric did not contain any scorable methods at all, only criteria. The students combined their generated rubrics to choose the most applicable components and then refined their rubrics accordingly.

One student noted that their group had to carefully refine their chat prompts; while ChatGPT did what they asked it to do, it did not incorporate the nuances they professionally knew to be true as K-12 teachers. Another student mentioned ChatGPT’s repetitiveness and mentioned that their group had to “evaluate” its evaluation, while a different student described trying to write the final project to make sense to their colleagues (i.e., human readers), indicating they operated at higher levels of thinking while doing so. A student labeled ChatGPT as a good writing partner for idea generation, but not as a scholarly tool. Idea generation is a form of scaffolding, as Lo (2023) positioned ChatGPT. Although one student said that ChatGPT saved time, a different student referenced time lost to human critique of ChatGPT’s output and minimized its performance, calling its evaluation “shiny and pretty but like a skeleton with no meat.” Multiple students affirmed that gathering information was quick, but evaluating the document with ChatGPT was more time-intensive than they expected. Finally, a student noted how slight changes in prompts resulted in different output and said this process has changed how she reflects upon what she is actually trying to ask or say in real time, which also is indicative of higher-order thought processes.

Student deliverables were longer than in previous semesters and included clear demarcation of AI-generated text and their own writing. Yet, there was an unexpected finding. Because students asked ChatGPT to act in the persona of a school superintendent, their own writing appeared to take on that perspective as well, indicating a carry-over effect from the AI persona to original student writing.

Discussion

Student reflections described higher order thinking as they interpreted the assignment tasks, generated the ChatGPT evaluations, and then wrote their own human evaluations of the technology plans. Students affirmed the value of ChatGPT as a partner and a way to start the assignment but were divided on its ultimate usefulness beyond these two characteristics. Their reflections affirmed the observations of Halaweh (2023) and Lo (2023) that ChatGPT can be a good writing and scaffolding tool. The students also demonstrated in the classroom and on the final assignment deliverable that their own metacognition and self-regulation skills (Dai, et al., 2023) were essential to allow them to critically assess and use ChatGPT’s output.

Additionally, the deliverables from 2023 compared to previous years are different in perspective, perhaps because the students assigned ChatGPT a persona of a school superintendent and this viewpoint persisted into their final document. Their writing indicates that they may have been influenced to write through the lens of a school superintendent rather than solely from their own perspectives, as suggested by the research about student writing when viewed by an external expert (MacArthur, Graham, & Fitzgerald (2015). Having empowered ChatGPT to think as a superintendent, their final documents reflected this perspective in their final deliverable. For example, consider the change in student writing from an evaluation in 2021 (non-AI-assisted) and 2023 (AI-assisted) regarding adherence to standards by a district technology plan in Figure 4.

Figure 4: Comparison of Student Writing 2021 and 2023

|

2021 Non-AI-Assisted Report ISTE standards were consulted for this plan. City Schools would do well to align their plan to the ISTE standards in order to show research-based strategies are being implemented.”

|

|

2023 AI-Assisted Report “The current plan does not explicitly connect to ISTE essential conditions, nor does it directly connect to the Technology Literacy standards from ITEAA. It does reference the goals of the National Education Technology Plan when providing equity and accessibility in its technology to enhance and assist in teaching and learning. The County plan also provides a robust, detailed account of the current infrastructure and sets some goals for the future. There is evidence that the technology can be effectively used for assessment and subsequent data analysis and visualization by teachers and leadership while also providing families and other stakeholders with such information in a user-friendly manner.” |

While arguments could be made that ChatGPT simply gave students more words to build from or made their writing more aspirational, the 2021 student writing appears to be grounded in the student’s school-based persona, while the 2023 student writing is more professional, authoritative, and could be considered as portraying the persona of a school district superintendent. The response from 2021 was representative in length of other students in the class. While the response in 2023 is longer than the average response in 2021, it is also representative of the other responses from the 2023 semester. Marked differences between 2021 and 2023 are present, including the management-based language, sentence length, and most notably, the more specific sentiments regarding adherence to standards in 2023.

As an educator, I was surprised that these graduate students had not already created accounts with ChatGPT by the summer of 2023. While they were aware of developments in AI, they had not yet incorporated it into their academic or professional lives, making our class the first time they actively used it. Toward semester end, they almost uniformly expressed plans to use AI more frequently as a productivity job aid in their professional work, but they expressed concerns that they might inadvertently cheat if they used AI for their academic work. In addition, they articulated their worry that using ChatGPT would add time to work they could simply complete on their own.

A recent study based on the Technology Acceptance Model (TAM) surveyed students regarding their acceptance of AI, and found that the perceived ease of use of AI significantly and positively influences perceived usefulness, which in turn directly affected the students’ behavioral intentions (Kang, 2023). As described earlier, the graduate students who used AI to complete the school district technology plan evaluations described their hesitation toward AI and what they perceived as an excessive time commitment, which is in line with the study by Kang (2023) that established perceived ease of use and usefulness as directly impacting behavioral intentions.

Conclusion

While causing some skepticism among the students, their use of ChatGPT in this 2023 technology plan evaluation assignment appears to have promoted higher levels of evaluative thinking. By assigning an administrative persona in the initial prompt, the use of ChatGPT also appears to have elevated the perspective by which students wrote their evaluation papers. Based upon student reflections and the final deliverables, changes will be made in future years as AI continues to evolve. Students will be given more time for human analysis and evaluation of ChatGPT’s output. They will conduct extensive peer reviews of group work and interview a living expert in school district administration so that they can incorporate more of a human perspective into their evaluation to counterbalance the AI persona they assign. Students will continue to use prompt-based personas but will ask ChatGPT to speak from more than one professional role. Future research will compare this set of students’ initial reactions to ChatGPT over time as they progress in their graduate program to determine if their behavioral intentions toward AI use changes as they perceive that AI tools become easier to use and more useful for their professional and academic needs. Overall, the purposeful and strategic use of ChatGPT in this assignment increased the quality and substantiveness of student work by pushing them to higher levels of thinking, which met the primary assignment goal.

Questions to Guide Reflection and Discussion

- Reflect on the effectiveness of using generative AI to create evaluation rubrics. How does this approach enhance or complicate the assessment process?

- Discuss the pedagogical implications of using AI-generated content to scaffold student learning and provoke higher-level thinking.

- How can educators ensure the accuracy and relevance of AI-generated evaluations in educational settings?

- Consider the ethical dimensions of employing AI in evaluating student work. What safeguards are necessary to maintain fairness and transparency?

- Explore the potential for generative AI to transform traditional educational assessment practices. What are the long-term implications for teaching and learning?

References

Acacia L. Overono & Annie S. Ditta (2023) The Rise of Artificial Intelligence: A Clarion Call for Higher Education to Redefine Learning and Reimagine Assessment, College Teaching, DOI: 10.1080/87567555.2023.2233653

Dai, Y., Liu, A., & Lim, C. P. (2023). Reconceptualizing Chatgpt and Generative AI as a student-driven innovation in Higher Education. Procedia CIRP, 119, 84–90. https://doi.org/10.1016/j.procir.2023.05.002

Halaweh, M. (2023). Chatgpt in education: Strategies for responsible implementation. Contemporary Educational Technology, 15(2). https://doi.org/10.30935/cedtech/13036

International Society for Technology in Education. (2023) Standards. https://iste.org/standards

Kang, L. (2023). Determinants of college students’ actual use of AI-based systems: An extension of the technology acceptance model. Sustainability, 15(6), 5221. doi:https://doi.org/10.3390/su15065221

Lo, C. K. (2023). What is the impact of CHATGPT on education? A rapid review of the literature. Education Sciences, 13(4), 410. https://doi.org/10.3390/educsci13040410

MacArthur, C. A., Graham, S., & Fitzgerald, J. (Eds.). (2015). Handbook of writing research. Guilford Publications.

National Education Technology Plan. (n.d.) https://tech.ed.gov/netp/

Sinha, R. K., Roy, A. D., Kumar, N., & Mondal, H. (2023). Applicability of ChatGPT in assisting to solve higher order problems in pathology. Cureus. https://doi.org/10.7759/cureus.35237