Student Stories of Online Learning

Carrie Lewis Miller, Ph.D. and Michael Manderfield

Abstract

Instructional designers at a Midwest university piloted a survey based on the Quality Matters general standards asking students about their online course experience prior to the COVID-19 pandemic. Students were asked to indicate whether they experienced a specific course design element and whether they considered that element to be important to their learning experience. Follow-up interviews with some participants were also held. Data from the survey indicated students perceive their online course experience to be good based on course design elements they encountered. Implications for providing additional faculty development programming based on the results of the study are discussed.

Keywords: Quality Matters, online learning, course design, faculty development

Introduction

Even before the COVID-19 pandemic, online courses were becoming a more prevalent component of the university experience (Young & Duncan, 2014). Now, in a post-pandemic academic world, online course pedagogy is receiving more focus than it ever has (Ghosal, 2020; Muljana, 2021; Rapanta et al., 2021). In the 2019-2020 academic year, 51.8% of college students were enrolled in at least one online course, not including courses moved online as an emergency pandemic measure (Smalley, 2021). However, as the prevalence of online courses continues to rise, the effectiveness of how institutions gather student feedback on those courses and what feedback is gathered continues to be a challenge (Gómez-Rey et al., 2018).

Early in the delivery of fully online courses, institutions tended to provide students with a digital copy of the end-of-course survey used in face-to-face courses using the logic, with synchronous courses in particular, that online courses are just a digital version of face-to-face courses (Gómez-Rey et al., 2018). Online pedagogy researchers started to suggest the evaluation surveys used in face-to-face courses were not appropriate for online courses (Loveland & Loveland, 2003). According to Gómez-Rey et al. (2018), a reliable and valid instrument for gathering student evaluations of teaching (SET) has yet to be developed. While there are many instruments specific to both synchronous and asynchronous online courses founded on instructional design models such as Chickering and Gamson’s (1987) seven principles of effective teaching, “most of the instruments in the literature do not take into account external items related to non-teaching variables (e.g., student characteristics, subject, course modality, etc.), yet there is evidence these variables can also affect teaching performance ratings” (Gómez-Rey et al., 2018, p. 1273). Student evaluations of teaching in an online environment, whether synchronous or asynchronous, need to incorporate feedback mechanisms on the design of the course and the technology used as well as the facilitation of the course (Bangert, 2004, 2008; Hammonds et al., 2017).

There are a variety of challenges in administering and interpreting SETs, particularly for online courses. The empirical data is conflicting and suggests that while students tend to rate online and face-to-face courses similarly, there is a difference between how faculty are rated across disciplines and confounding influences include the characteristics of an individual faculty such as physical appearance; whether the faculty is tenured or not; and whether the course is part of the major or an elective (McClain & Hays, 2018). Because SETs are often used as evidence for tenure and promotion, hiring, or contract renewal, faculty are justifiably skeptical of the reliability and validity of SETs as well as how the data from them is collected and used (Hammonds et al., 2017).

Brookfield’s (1995) Critical Incident Questionnaire (CIQ) has been one of the solutions implemented to gather a more thorough picture of how students perceive their course experience. Historically, the CIQ has been used for in-class activities and end-of-course evaluations but has more recently been used to determine the effectiveness of online courses (Ali et al., 2016; Keefer, 2009; Phelan, 2012). The CIQ can be used to capture the essential, or critical, moments in a course or learning event (Keefer, 2009). The five questions in the CIQ (ex: At what moment in class this week were you most distanced from what was happening?) are designed to promote reflective practice for both student and instructor and should help the instructor inform changes to pedagogy or course design as needed (Keefer, 2009).

At a medium-sized, public, comprehensive (in the Carnegie Classification) university in the Midwest, Quality Matters Standards for online course design is supported and promoted as one potential pathway to online course excellence. Instructional designers provide professional development and consultation to faculty who teach online or want to improve their online course design. As part of a pilot study to provide evidence-based professional development for online teaching practice, instructional designers conducted a survey and interviews of students to gather their perceptions and feedback on their online course experiences. Using a modification of Bangert’s (2004) Student Evaluation of Online Teaching Effectiveness mapped to Quality Matters (QM) General Standards, participants were asked Likert-style questions about their perceptions of their online course experience. Participants were asked to provide their experience on the specific online course design item and whether they felt the item was of importance in an online course. The goal of this study was to determine student perceptions of their online course experience rather than to determine Quality Matters framework effectiveness; therefore, students were recruited randomly rather than from specific Quality Matters certified online courses or from those online courses not following the QM framework. Follow-up interviews were offered as an option to provide clarification using questions based on Brookfield’s (1995) CIQ. Results from this pilot study were used to inform future faculty professional development offerings on online teaching practice.

Conceptual Framework

Student Evaluations of Teaching

Historically, student evaluations of teaching were used as a voluntary measure by instructors who wished to engage in formative practice and to inform improvements to their teaching methods through student feedback (Radchenko, 2020). Over the years, SETs have transformed into methods for “(a) improving teaching quality, (b) providing input for appraisal exercises (e.g., tenure/promotion decisions), and (c) providing evidence for institutional accountability” (Spooren et al., 2013, p. 599). Concerns about the validity and reliability of many of these SETs, often thrown together by administrators rather than experts in instrument design, abound among higher education faculty (Radchenko, 2020; Spooren et al., 2013, Spooren & Christiaens, 2017). In addition to concerns about the design of the SETs, instructors also indicate concerns over students’ lack of knowledge about what constitutes good teaching (Spooren et al., 2013).

The concerns about the SET process in general have led to a flood of research on the topic and the potential biases potentially impacting SET results. Spooren and Christiaens (2017) indicate “well-known potentially biasing factors include class size, the teacher’s gender, and course grades” (p. 44). Studies have also shown a negative relationship between academic rigor and positive SETs leading students to rate instructors lower even if the student had a positive learning experience (Clayson, 2009).

It is also challenging to get student participation in the SET process (Neckermann et al., 2022; Radchenko, 2020; Spooren et al., 2013, Spooren & Christiaens, 2017). Non-responsiveness on SETs, particularly in the digital age, can be explained by a variety of factors, including survey fatigue, lack of interest in the process, and a belief their feedback will not result in any meaningful changes (Adams & Umbach, 2012; Neckermann et al., 2022). Treischl and Wolbring (2017) found there is a marked difference in participation depending on the delivery mode of the survey (paper versus digital) and sufficient in-class time should be dedicated to the completion of the survey for maximum participation. Even face-to-face courses evaluated using an online survey will show a decline in participation (Stanny & Arruda, 2017) and few strategies have a noticeable impact on the rate of participation (Adams & Umbach, 2012).

Student Perceptions of Online Learning

Students choose online course modalities for a variety of reasons. Convenience and flexibility are the most cited reasons, but other justifications for online course enrollment may include a lack of face-to-face options, scheduling conflicts, or priorities of family or work life (Mather & Sarkans, 2018). In terms of SETs for online courses, students tend to rate online courses and online instructors lower than their face-to-face counterparts (Van Wart et al., 2019). Some theorized reasons for this disparity include a lack of social presence disconnecting students from teachers; a lack of a structured, organized course environment; and a lack of institutional support mechanisms for struggling students (Van Wart et al., 2019). Mathers and Sarkans (2018) found in comparing face-to-face and online course experiences, students indicated they experienced poor faculty communication, long waits for assessment feedback, and challenges with completing virtual group work.

Both students and instructors may perceive online courses and coursework as a passive experience, hurrying through content just to complete the required activities for a given semester (Kaufmann & Vallade, 2020). If the coursework requires more effort or is harder than perceived, students feel more discontented and disconnected (Kaufmann & Vallade, 2020). Student engagement in online courses is one of the keys to a successful learning experience and higher SET participation and ratings (Kaufmann & Vallade, 2020; Martin & Bolliger, 2018; Van Wart et al., 2019). Martin and Bolliger (2018) found “engagement can be enhanced both in the interactive design of online courses and in the facilitation of the online courses. Instructor facilitation is crucial; hence, instructors need to have strategies for time management and engaging discourse” (p. 218). Mather and Sarkans (2018) encourage instructors and institutions to move beyond online, quantitative surveys and to conduct more focus groups or longitudinal or comparative research to provide a more accurate and in-depth look at student perceptions of online learning, which could inform future curriculum decisions and faculty professional development offerings.

Online Course Design

Many factors contribute to a successful online learning environment. Course design, instructor presence, good instructor communication, and peer-to-peer interaction all impact students’ engagement with the course and with the material, as well as their perceptions of the value of the online course experience (Kaufmann & Vallade, 2020). Martin et al. (2019) suggest a systematic approach to course design is one of the key elements to a successful online course. Kaufmann and Vallade (2020) echo their findings and conclude “the course structure needs to be planned in advance; specifically, instructors need to consider how the course design and technology influence opportunities for and quality of interaction, communication, and collaboration with the course assignments and assessments” (p. 9). It is the design of the online course impacting students’ perception of the overall course climate (Kaufmann & Vallade, 2020). To promote learner engagement, Lewis (2021) suggests some best practices for online course design, including:

- providing a course overview

- designing clearly stated, appropriate, and measurable outcomes

- curating culturally inclusive materials, images, and other resources designed to support the learning needs of diverse learners

- providing accessible online courses

- providing multiple ways to engage learners

- designing inclusive instructions

- developing a consistent user interface experience (p. 68).

A variety of frameworks have been developed to assist with online course design, such as the Quality Matters Rubric (Quality Matters, 2021); the Online Learning Consortium Quality Scorecard (Online Learning Consortium, 2022); the CSU Quality Learning and Teaching Rubric (The California State University, 2022); the University of Illinois Quality Online Course Initiative (QOCI) Rubric (University of Illinois Springfield, 2022); and the Penn State Quality Assurance e-Learning Design Standards (The Pennsylvania State University, 2022). These course frameworks provide guidance for achieving online course design best practices which can, in turn, help engage students in the course. According to Tualaulelei et al. (2021), “online courses require purposeful design not just for cognitive and behavioural engagement, but also for social, collaborative and emotional engagement” (p. 12).

Quality Matters

The Quality Matters Course Design Standards are “a set of eight General Standards and 42 Specific Review Standards used to evaluate the design of online and blended courses” (Quality Matters, 2021, para. 1). The intent for the standards is to provide a long-term quality assurance mechanism for course design and improving student engagement and outcomes. The QM standards provide consistency in course design independent of learning management system or academic discipline. It is important to note the QM standards only address course design elements and not teaching practices. Implementing the QM process, including the peer review and course certification process, can result in improved student learning outcomes (Hollowell et al., 2017; Swan et al., 2012); student engagement (Sadaf et al., 2019); student motivation and self-efficacy (Simunich et al., 2015); and student retention (Al Naber, 2021).

Purpose of Study

Instructional designers at a medium-sized, public, comprehensive university in the Midwest piloted a survey of students’ online course experiences to provide a basis for future faculty development programming using quantitative data to design an evidence-informed experience. Qualitative data, collected through interviews, was used to provide a more in-depth picture of the students’ perceptions. The following research questions guided this study:

- What are the overall perceptions and experiences of students who took or are taking online classes?

- What elements of online course design do students consider to be important?

- Based on the students’ experiences, what areas of online course design should be the focus of faculty professional development?

Method

Participants

At a medium-sized, public, comprehensive university in the Midwest, participants were randomly recruited in person in the University Student Union and were provided with a giveaway (e.g., t-shirt, book, collectible) for participation. A total of 121 students participated in an anonymous online survey, and 11 participated in a follow-up interview in the year before the COVID-19 pandemic. Seventeen participants were excluded from the analysis as they completed less than 15% of the questionnaire, completed the questionnaire in less than 2 mins, or responded the same way to every item (e.g., answering strongly agree to every item). Of the remaining 105 participants, 54.9% identified as female; 79.8% were between the ages of 18-24; 76.2% had taken more than one fully online class; 48.6% were enrolled in their most recent online course at the time of the survey; 57.7% were in their sophomore or junior years. The racial make-up of the survey participants was representative of the population of the campus, with 68.3% of the participants identifying as White, 14.4% identifying as Black, 11.5% identifying as Asian and the remaining 4.8% selecting other or declining to self-identify. Demographic information was not collected from the interview participants.

Measures

Survey

An anonymous online survey was designed for the purposes of this study. The survey was comprised of demographic questions, eight blocks of Likert-style questions about various aspects of their online course experience, and a final question asking participants if they would be willing to participate in a follow-up interview. The questions were broken into sections mapped to the Quality Matters General Standards categories:

- Course Overview and Introduction

- Learning Objectives (Competencies)

- Assessment and Measurement

- Instructional Materials

- Learning Activities and Learner Interaction

- Course Technology

- Learner Support

- Accessibility and Usability (Quality Matters, 2021, para. 3)

For the purposes of this pilot study, the categories Learner Support and Accessibility were combined, and Usability was broken out into its own category. The questions asked participants to rate their opinion of whether they experienced a specific component of the standard in their online course (Strongly Agree, Agree, Disagree, Strongly Disagree) and if they considered that component to be important (Important, Somewhat Important, Not Important) to their overall learning experience and success in the online environment.

Instructors at the institution where the research is being conducted are not obligated to administer the end-of-semester Student Rating of Instruction (SRI) evaluations. In addition, the existing university SRIs are not built on any course design framework such as Quality Matters, nor do the ones administered to online courses address online course design best practices or student perception of the importance of those practices. Since the institution in question is a subscribing Quality Matters institution and the current intuitional practice around SRI does not address student perceptions of specific online course design elements, the researchers decided to develop a survey that encompassed those needs. Other institutions may have more detailed SRIs and in general, the value of SRIs to understanding students’ perceptions of the teaching and learning process should not be underestimated.

The survey was reviewed by multiple experts on the Quality Matters rubric, including a Master Course Reviewer, and it was found to be a valid measure representing the QM general standards. Internal consistency was determined for the items and subitems on the survey using Cronbach’s Alpha. While the internal consistency of the importance items in several categories lies in the questionable range (0.6 ≤ α < 0.7), the combined Cronbach’s Alpha for Opinion and Importance of all items is at a minimum in the acceptable range (0.7 ≤ α < 0.8). Table 1 shows the Cronbach’s Alpha values for all items.

| Construct | Opinion/Importance | Cronbach’s alpha | N of Items |

| Course Overview / Introduction | Opinion | 0.899 | 9 |

| Importance | 0.78 | 9 | |

| Both | 0.848 | 18 | |

| Learning Objectives | Opinion | 0.859 | 4 |

| Importance | 0.798 | 4 | |

| Both | 0.742 | 8 | |

| Assessments/ Measurement | Opinion | 0.801 | 5 |

| Importance | 0.634 | 5 | |

| Both | 0.733 | 10 | |

| Instructional Materials | Opinion | 0.847 | 6 |

| Importance | 0.71 | 6 | |

| Both | 0.794 | 12 | |

| Learning Activities | Opinion | 0.879 | 7 |

| Importance | 0.735 | 7 | |

| Both | 0.82 | 14 | |

| Learner Interaction | Opinion | 0.892 | 7 |

| Importance | 0.832 | 7 | |

| Both | 0.849 | 14 | |

| Feedback | Opinion | 0.93 | 14 |

| Importance | 0.887 | 14 | |

| Both | 0.906 | 28 | |

| Course Technology | Opinion | 0.875 | 6 |

| Importance | 0.852 | 6 | |

| Both | 0.876 | 12 | |

| Learner Support/Accessibility | Opinion | 0.837 | 3 |

| Importance | 0.699 | 3 | |

| Both | 0.703 | 6 | |

| Usability | Opinion | 0.899 | 7 |

| Importance | 0.845 | 7 | |

| Both | 0.855 | 14 |

Interview

To develop the interview questions, Brookfield’s Critical Incident Questionnaire was modified to align with elements of online courses (e.g., At what moment in your last online course did you feel most engaged with what was happening?). Interview participants were chosen by their “yes” answer to the follow-up interview question on the survey. The interview questions were taken directly from the modified Brookfield’s (1995) CIQ used for previous Quality Matters professional development participants. The open-ended questions were:

- Q1: At what moment in your last online course did you feel most engaged with what was happening?

- Q2: At what moment in your last online course did you feel most distanced from what was happening?

- Q3: What action that anyone (whether instructor or fellow student) took in your last online course did you find most affirming and helpful?

- Q4: What action that anyone (whether instructor or fellow student) took in your last online course did you find most puzzling or confusing?

- Q5: What about your last online course surprised you the most? (This could be something about your own reactions to what went on, or something that someone did, or anything else that occurs to you.)

Analysis

An analysis was conducted on the survey data using SPSS 27 to calculate frequencies and descriptive statistics as well as internal reliability for the survey items. The correlation between the reported experience and the importance placed upon the element was also calculated. Responses to the eight Likert sections were categorized into high-low favorability and high-low importance. Favorability scores are the percentage of participants who selected either strongly agree or agree to the ‘opinion’ questions. Importance scores are the percentage of participants who selected either important or somewhat important to the ‘importance’ questions.

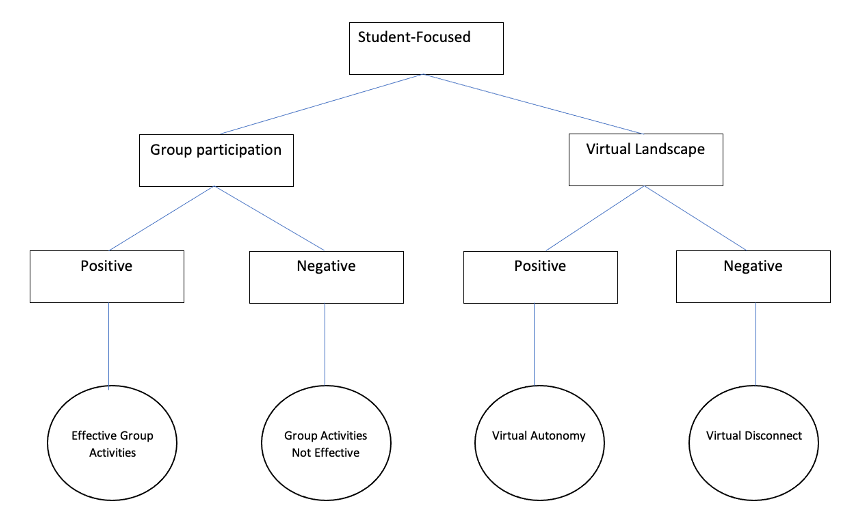

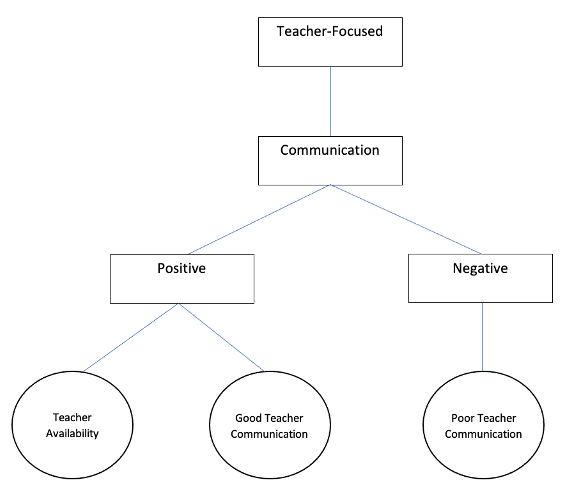

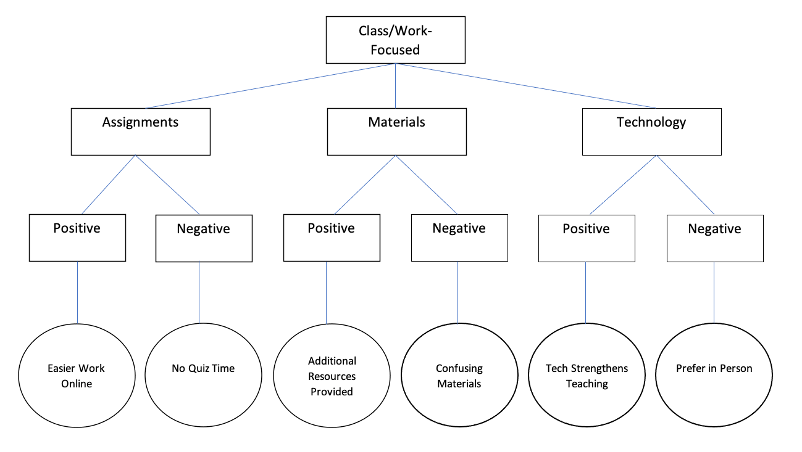

The qualitative interview data (N=11) was coded using a hierarchical coding frame, “where individual codes emerge from the data but then are used to generate insight into more general concepts and thematic statements” (Wasserman et al., 2009, p. 356). The results from this analysis were divided into three categories: student-focused responses, class-focused responses, and teacher-focused responses. Each of those three categories was broken down into two or three subcategories (i.e., Student-focused > Group Participation / Virtual Landscape; Poor/Good Teacher Communication). The data coded into those subcategories were then divided into positive or negative groups. The positive and negative comments were then coded into one of 13 themes, seen in figures 1-3.

Results

Survey

Course Overview and Introduction

There was a moderate positive relationship between student experience and importance (r = .31, p = .005) in the course overview and introduction section. The more participants reported experience with the course overview and introduction items, the more likely they were to indicate the items were important to online course design. The frequency results from this section can be seen in Table 2.

| Item | Experience | Importance |

|---|---|---|

| Q7.8: The instructor introduction was appropriate. | 88.0% | 88.4% |

| Q7.4: The course introduction made me aware of the course and institutional policies. | 81.4% | 92.0% |

| Q7.6: The course content clearly stated the prerequisites and required competency that I would need in order to complete the course successfully. | 83.3% | 94.7% |

| Q7.2: I understood the purpose of course resources. | 82.4% | 98.7% |

| Q7.5: It was clear what technologies I needed to complete the course and how to obtain these. | 81.0% | 98.7% |

| Q7.1: It was easy to get started and find information in the course. | 79.4% | 100.0% |

| Q7.7: The content clearly stated the technical skills that I needed in order to complete the course successfully. | 79.5% | 98.7% |

| Q7.3: I understood what behavior was expected of me in the online classroom. | 77.2% | 92.1% |

| Q7.9: I was prompted to introduce myself to my classmates at the beginning of the course. | 54.5% | 68.0% |

Learning Objectives (Competencies)

There was no significant relationship between the experience ratings and importance ratings by participants on the learning objectives items (r = .08, p = .51). The results from this section can be seen in Table 3.

| Item | Experience | Importance |

|---|---|---|

| Q8.2: I understood what the learning objectives/purpose was for all of the modules in the course. | 85.0% | 96.3% |

| Q8.1: The learning objectives for the course clearly stated what I would do during the course. | 84.2% | 98.8% |

| Q8.3: The activities during the course helped me reach the learning objectives for each module and for the course. | 79.2% | 97.5% |

| Q8.4: The objectives of the course were appropriate for my level. | 78.2% | 98.7% |

Assessment and Measurement

There was a small positive relationship between student experience and importance (r = .28, p = .01) in the assessment and measurement section. The more participants reported experience with the assessment, feedback, and grading items, the more they indicated the items were important to online course design. The results from this section can be seen in Table 4 below.

| Item | Experience | Importance |

|---|---|---|

| Q9.2: The course grading policy was clear and easy to access. | 92.8% | 98.8% |

| Q9.3: The course documentation clearly described course grading/feedback system. | 82.6% | 97.4% |

| Q9.1: The assessments during the course accurately measured my progress towards the learning objectives. | 77.8% | 100.0% |

| Q9.5: Up-to-date grades were available throughout the course. | 74.7% | 97.5% |

| Q9.4: There were a variety of types of assessment throughout the course (papers, exams, projects, etc.). | 74.8% | 94.9% |

Instructional Materials

There was a moderate positive relationship between student experience and importance (r = .26, p = .02) in the instructional materials section. The more participants reported experience with the course resources and materials items, the more likely they were to indicate the items were important to online course design. The results from this section can be seen in Table 5 below.

| Item | Experience | Importance |

|---|---|---|

| Q10.2: The materials were relevant to the activities and assessments in the course. | 93.0% | 97.5% |

| Q10.4: The materials in the course were up-to-date and relevant. | 88.0% | 100.0% |

| Q10.1: The resources in the course provided appropriate information to help me reach the learning objectives. | 85.9% | 97.6% |

| Q10.5: There were a variety of materials and resources included in the course. | 80.0% | 97.5% |

| Q10.3: The instructor cited all of the resources that they included in the course. | 79.8% | 93.8% |

| Q10.6: It was easy to tell the difference between required and optional information. | 63.0% | 97.5% |

Learning Activities and Learner Interaction

There was no significant relationship between the experience ratings and importance ratings by participants on the learning activities and learner interaction items (r = .19, p = .07). The results for this section can be seen in Table 6 below.

| Items | Experience | Importance |

|---|---|---|

| Q11.11: The course allowed me to take responsibility for my own learning. | 92.6% | 98.7% |

| Q11.6: The course documentation described the expectations for my performance in the online classroom. | 87.5% | 97.6% |

| Q11.1: The activities in the course helped me reach the learning objectives. | 83.7% | 98.8% |

| Q11.10: Instructor effectively communicated any changes/clarifications regarding course requirements. | 80.4% | 97.5% |

| Q11.9: The amount of contact with the instructor was satisfactory (email, discussions, face-to-face meetings, etc.) | 79.3% | 95.0% |

| Q11.8: The instructor was accessible to me outside of the course (both online and in-person). | 76.0% | 93.9% |

| Q11.4: Feedback was delivered in a timely fashion and within the limits described in the course documentations. | 75.2% | 96.4% |

| Q11.5: Feedback was informative, supportive, and articulate. | 73.2% | 97.5% |

| Q11.3: The activities encouraged me to engage with learning. | 72.1% | 98.8% |

| Q11.13: I felt comfortable interacting with the instructor and other students. | 72.1% | 97.5% |

| Q11.7: The instructor was enthusiastic about online teaching. | 70.1% | 95.1% |

| Q11.14: This course included activities and assignments that provided me with opportunities to interact with other students. | 69.4% | 88.8% |

| Q11.2: The course used realistic assignments that motivated me to do my best work. | 67.0% | 96.3% |

| Q11.12: The course was structured so that I could discuss assignments with other students. | 59.6% | 93.7% |

Course Technology

There was a moderate positive relationship between student experience and importance (r = .39, p < .001) in the course technology section. The more participants reported experience with the technology use in course items, the more they indicated the items were important to online course design. The results for this section can be found in Table 7 below.

| Item | Experience | Importance |

|---|---|---|

| Q12.4: The technology tools for the course were easy to obtain. | 89.4% | 94.9% |

| Q12.3: Technological requirements were clearly stated, with links or documentation to support and any necessary software. | 87.4% | 97.5% |

| Q12.1: Technological tools were used appropriately for the course content. | 87.4% | 95.0% |

| Q12.5: The technologies and links in the course were up-to-date and functioned correctly. | 81.7% | 96.3% |

| Q12.6: The documentation provided information and/or links to the policy statements of technology tools in the course. | 81.1% | 95.1% |

| Q12.2: Technological tools helped me reach the learning objectives and enhanced the learning experience. | 79.0% | 93.8% |

Learner Support and Accessibility

There was no significant relationship between the experience ratings and importance ratings by participants on the learner support and accessibility items (r = .07, p = .51). The results for this section can be seen in Table 8 below.

| Item | Experience | Importance |

|---|---|---|

| Q13.2: Accessibility policies and resources were available through the course information. | 85.7% | 95.2% |

| Q13.3: There was information in the course for academic and student services that could help me succeed. | 77.6% | 98.8% |

| Q13.1: The course provided information on technical support. | 63.9% | 95.2% |

Usability

There was no significant relationship between the experience ratings and importance ratings by participants on the usability items (r = .15, p = .18). The results for this section can be seen in Table 9 below.

| Item | Experience | Importance |

|---|---|---|

| Q14.6: The course is organized in a logical manner that facilitates information retrieval. | 83.5% | 97.4% |

| Q14.2: The sequence of online course activities was effectively organized and easy to follow. | 81.4% | 98.7% |

| Q14.7: The multimedia in the course was easy to use. | 81.4% | 97.5% |

| Q14.1: The course was easy to navigate. It was easy to find information throughout the course. | 79.4% | 97.5% |

| Q14.3: The course contained information about the accessibility of the technologies in the course. | 77.6% | 98.7 |

| Q14.5: The course provided an efficient learning environment. | 76.3% | 98.7% |

| Q14.4: There were multiple formats for course materials (audio, written, video, etc.). | 65.9% | 93.9% |

Interview

Analysis of the qualitative data indicated participants (N=11) all mentioned the importance of good teacher communication and had experienced poor teacher communication in their online courses at some point. Other areas of concern included confusing course materials and feeling a virtual disconnect with the teacher and other classmates. Teacher availability was another common theme, as was technology use to strengthen teaching. Table 10 lists the 13 coding themes and the number of responses in each coded category. Example responses and their coding designations are listed below.

- Participant 1: “I think the online courses, they should have this, this interface for students to communicate with each other in a way which would, be like an in-class experience, perhaps using an in-class video” (virtual disconnect, tech strengthens teaching).

- Participant 10: “There was one time I emailed him [the teacher] asking to help me with something and I got a response that did not help me at all. It was just kind of a half-ass response, I was like cool, I’m completely lost now, this is great” (poor teacher communication).

- Participant 5: “He [the teacher] always puts a reminder on what to do and stuff like that cause I work and other things. So the last minutes, the reminder, he got down on me like, Oh, I have to do this. So a reminder actually helped me to keep up” (good teacher communication, teacher availability).

- Participant 2: “So my professor was very good at giving feedback. So with, we had to do a couple of papers for the course. And I felt like I just improved in my writing skills just from a lot of feedback you got, which was very freeing for me. Because I know there are some professors who don’t give great feedback. So I think that was very helpful” (good teacher communication).

- Participant 4: “I just I appreciated my instructor because he was really willing to open his office hours for me even though it technically is an online class, but he was very supportive and I understood what I was doing after I went to his office hours” (teacher availability).

| Code | Number of Responses |

|---|---|

| Teacher Availability | 9 |

| Good Teacher Communication | 11 |

| Poor Teacher Communication | 11 |

| Confusing Materials | 11 |

| Virtual Disconnect | 9 |

| Tech Strengthens Teaching | 8 |

| Virtual Autonomy | 7 |

| Effective Group Activities | 6 |

| No Quiz Time | 5 |

| Easier Work Online | 5 |

| Group Activities Not Effective | 4 |

| Additional Resources Provided | 3 |

| Prefer in Person | 2 |

Discussion

What Are the Overall Perceptions and Experiences of Students Who Took or Are Taking Online Classes?

Overall, participants of this study indicated that their online course experience, from a design perspective, was relatively good. There were some areas of improvement to be addressed, such as including meaningful opportunities for students to introduce themselves at the beginning of class; keeping grades up-to-date and providing a variety of assessments; clearly differentiate between required and optional information; providing information about student success and technical assistance options; designing an efficient, easy-to-navigate learning environment; and provide multiple formats of information. The largest area in need of improvement, according to the survey participants, is Learning Activities and Learner Interaction. Participants indicated multiple areas in need of improvement in their online learning experience including the availability of the instructor, whether online or in-person; the use of timely, quality feedback; peer-to-peer interaction in selected activities and assessments; the use of realistic assignments; and the instructor’s enthusiasm for online teaching. In this category, an area of primary concern is the lack of design elements which allow students to discuss assignments and activities with their peers. Additional concerns about confusing course materials were also reported, which can be addressed specifically by Quality Matters standards (Standards 4.1 and 4.2) or similar guidelines from any other online course design framework.

These findings echo the recommendations of Lewis (2021) and Martin and Bolliger (2018) about the importance of course design on student engagement as well as the need for teacher-student and peer-to-peer interaction and communication. Instructors who prefer to use a framework to assist in their course design to improve student engagement and interaction could implement the Quality Matters Rubric or any of the online course design frameworks their institution recommends. Based on the results of this study, instructors are encouraged to engage in the following practices to support their course design efforts and assist them in meeting the Quality Matters Rubric Standards:

- Consider each activity (weekly assignment, project, etc.), and ensure it will map back to the course and module level learning goals/objectives or competencies.

- Vary the activities in the course when possible.

- Encourage learners to engage with one another in sensemaking and “muddiest point” discussions.

- Structure assignments or activities to include opportunities for opportunistic failure and “leveling up”. Ensure these interactions have a clear purpose.

- Include a clear statement in the syllabus and for each assignment about the timeframe for which feedback will be provided.

- Include sections in the syllabus and/or getting started guide articulating expectations for interaction and group work.

Thoughtful implementation of course design elements as presented in any online course design framework can favorably impact students’ perception of the course (Kaufmann & Vallade, 2020).

Outside of course design elements, communication between instructor and student can have a substantial impact on the students’ overall experience in the course. The qualitative data from this study indicates poor teacher communication and poor availability for questions or assistance negatively impact the students’ perception of the course. Those students who were able to access help from the teacher had a more positive perception of their online course experience than those who received no communication or unhelpful communication. This is supported by Kaufmann and Vallade (2020), who recommend good teacher communication as an essential element in engaging students and encouraging an overall positive perception of the course.

What Elements of Online Course Design Do the Students Consider To Be Important?

The frequency of the “agree” and “strongly agree” responses were calculated for both experience and importance for all items of the survey. Of all the course design elements participants were asked about, only course introductions were found to be of low importance by frequency of the responses (67.2%). All other areas of online course design were found to be especially important by participants and three areas (It was easy to get started and find information in the course; The assessments during the course accurately measured my progress towards the learning objectives; The materials in the course were up-to-date and relevant) were unanimously agreed upon as particularly important to an online course.

Although participants indicated low agreement as to the importance of course introductions in an online course environment, including a discussion area where learners can introduce themselves and get to know their classmates is a standard instructional design recommendation and a Quality Matters Rubric Standard (Murillo & Jones, 2020). It is also a recognized practice for building social presence and establishing a collaborative environment (Chunta et al., 2021; Clark et al., 2021). In the context of this study, 53% of respondents indicated they did not experience course introductions in their online class. However, 67% responded that this online course design element was of lower importance. Given the amount of research indicating this is an important design element for student engagement and social presence, perhaps the participants’ experience with course introductions has not been meaningful in any way (Chunta et al., 2021; Clark et al., 2021; Kaufmann & Vallade, 2020; Lewis, 2021; Martin & Bolliger, 2018). Simply asking students to introduce themselves without any encouragement of interactions or meaningful guidance can defeat the purpose of these types of interactions. Chunta et al. (2021) recommend instructors ask students to “introduce themselves to the class by sharing something fun about themselves or including pictures of their favorite foods, pets, or vacation sites” (p. 90). Kirby (2020) recommends a variety of icebreaker activities to promote engagement and community in an online course. Kay (2022) suggests asking 3-4 questions for the students to answer and modeling the desired response to build community and social presence. Technology can also play a role in online course introductions, incorporating video responses rather than text or inviting students to create their own introduction in the format that works best for them (Chunta et al., 2021; Kay, 2020).

Based On the Students’ Experiences, What Areas of Online Course Design Should Be the Focus of Faculty Professional Development?

From the results of this study, when providing professional development on the topic of online course design, going beyond a course design framework, such as Quality Matters, and emphasizing student engagement techniques, the importance of good communication, the benefits of timely feedback, and the impact of instructor presence will all serve to provide a better online course perception from the students. When looking at a course design framework, such as Quality Matters, the sheer number of standards could be overwhelming to an instructor new to online teaching and learning (Bulger, 2016; Burtis & Stommel, 2021). While the systematic design of a course, online or otherwise, can provide a good foundation for learning, it is the human element that matters most (Burtis & Stommel, 2021). Future faculty development offerings should provide an easy-to-use framework to help instructors with the physical design of their courses while heavily emphasizing the need for personal interactions and connections, particularly in an online asynchronous environment (Burtis & Stommel, 2021).

Implications

Instructional designers or faculty developers providing resources or instruction to faculty and instructors building online courses may want to conduct their own formal or informal research into the student online course experience at their institution. What is done well at one institution may be an opportunity for improvement at another. Using an online course design framework as a baseline or point of discussion is a tangible way to enter into conversations about the importance of communication, timely feedback, and accessibility. It is important to remember that even quality assurance and peer reviews do not consider what happens in the course when it comes to teaching and interaction, which, as this study shows, is impactful on the overall student experience.

Limitations

While this study provides insights into the online course experience of students at a medium-sized, public, comprehensive university in the Midwest, it does have some limitations. This study was based on the Quality Matters Rubric Standards and may not be generalizable to other online course design frameworks. This data was also collected prior to the COVID-19 pandemic and data collected post-pandemic emergency measures may be vastly different. In response to the pandemic switch to online learning, institutions may have implemented policies or adopted frameworks for online courses which may impact the applicability of the results presented here.

The researchers did not ask students to specify which online courses they referred to in the responses to the survey, nor did they ask students to identify departments, colleges, or specific instructors. In addition, it is possible the online course the participants based their responses on was taken at a different institution as the survey did not specify that they needed to only consider classes taken at the institution where the research was being conducted. We also did not distinguish between asynchronous and synchronous online courses, both of which can have slightly different design considerations. Therefore, while we can develop a professional development strategy applicable to all faculty, it is possible to miss impacting the specific instructors who may benefit most from additional online course design training.

Finally, the study relied on the participants’ ability to interpret and understand course design elements such as learning objectives, assessment, and accessibility. While these terms are largely understood by instructors, it is hard to determine if a lack of understanding of survey items such as “I understood what the learning objectives/purpose was for all of the modules in the course” impacted the participants’ responses.

Conclusion

Research into students’ online course experiences can provide an evidence-based foundation for faculty professional development. A survey based on Quality Matters Rubric Standards allowed instructional designers to glimpse areas of excellence and opportunities for improvement as discussion points for future faculty professional development offerings. Student interviews provided more clarity on patterns seen in the survey data, showing instructor communication, timely feedback, and availability for questions were all elements potentially impacting students’ perceptions about the course.

Future Research

Opportunities for future research in this area include a post-Pandemic survey and comparison of the results to the data presented here. Determining how emergency measures impacted instructors’ course design strategies and students’ responses to those strategies would be useful in informing ongoing professional development programming. Conducting focus group interviews with students would provide a more in-depth look at the impact of online course design elements. A similar study could be conducted with a different online course design framework to determine if comparable results are obtained.

Acknowledgments

The authors would like to thank Dan Houlihan and the Center for Excellence in Scholarship and Research staff for their assistance in editing and review of this manuscript as well as with data analysis. We would also like to thank the graduate assistants who provided support with data collection: Keith Hauck, Katelyn Smith, Steven Rencher, Firdavs Khaydarov, Haley Peterson, and Greta Kos.

References

Adams, M.J.D., & Umbach, P.D. (2012). Nonresponse and Online Student Evaluations of Teaching: Understanding the Influence of Salience, Fatigue, and Academic Environments. Research in Higher Education, 53, 576–591. https://doi.org/10.1007/s11162-011-9240-5

Ali, M. A., Zengaro, S., & Zengaro, F. (2016). Students’ Responses to the Critical Incident Technique: A Qualitative Perspective. Journal of Instructional Research, 5, 70-78.

Al Naber, N. (2021). The Effect of Quality Matters Certified Courses on Online Student Retention at a Public Community College in Illinois (Doctoral dissertation, University of St. Francis).

Bangert, A. W. (2004). The seven principles of good practice: A framework for evaluating online teaching. The Internet and Higher Education, 7(3): 217-232. https://doi.org/10.1016/j.iheduc.2004.06.003

Bangert, A. W. (2008). The development and validation of the student evaluation of online teaching effectiveness. Computers in the Schools, 25(1-2), 25-47. https://doi.org/10.1080/07380560802157717

Brookfield, S.D. (1995). Becoming a critically reflective teacher (1st ed.). Jossey-Bass.

Bulger, K. (2016, March 23). Quality matters: Is my course ready for QM? FIU Online Insider. Retrieved March 29, 2022, from https://insider.fiu.edu/course-quality-matters-ready/

Burtis, M., & Stommel, J. (2021, August 10). The Cult of Quality Matters. Hybrid Pedagogy. Retrieved March 29, 2022, from https://hybridpedagogy.org/the-cult-of-quality-matters/

Chickering, A., & Gamson, Z. (1987). Seven principles of good practice in undergraduate education. AAHE Bulletin, 30(7), 3–7.

Chunta, K., Shellenbarger, T., & Chicca, J. (2021). Generation Z students in the online environment: strategies for nurse educators. Nurse Educator, 46(2), 87-91. https://doi.org/10.1097/nne.0000000000000872

Clark, M. C., Merrick, L. C., Styron, J. L., & Dolowitz, A. R. (2021). Orientation principles for online team‐based learning courses. New Directions for Teaching and Learning, 2021(165), 11-23. https://doi.org/10.1002/tl.20433

Clayson, D.E. (2009). Student Evaluations of Teaching: Are They Related to What Students Learn?: A Meta-Analysis and Review of the Literature. Journal of Marketing Education, 31(1), 16–30. https://doi.org/10.1177/0273475308324086

Ghosal, A. (2020). Making Sense of “Online Pedagogy” after COVID-19 Outbreak. CEA Critic, 82(3), 246.

Gómez-Rey, P., Fernández-Navarro, F., Barbera, E., & Carbonero-Ruz, M. (2018). Understanding student evaluations of teaching in online learning. Assessment & Evaluation in Higher Education, 43(8), 1272-1285. https://doi.org/10.1080/02602938.2018.1451483

Hammonds, F., Mariano, G. J., Ammons, G., & Chambers, S. (2017). Student evaluations of teaching: improving teaching quality in higher education. Perspectives: Policy and Practice in Higher Education, 21(1), 26-33. https://doi.org/10.1080/13603108.2016.1227388

Hollowell, G. P., Brooks, R. M., & Anderson, Y. B. (2017). Course design, quality matters training, and student outcomes. American Journal of Distance Education, 31(3), 207-216. https://doi.org/10.1080/08923647.2017.1301144

Kaufmann, R., & Vallade, J. I. (2020). Exploring connections in the online learning environment: student perceptions of rapport, climate, and loneliness. Interactive Learning Environments, 1-15. https://doi.org/10.1080/10494820.2020.1749670

Kay, R. H. (2022). Ready, Set, Go–Your First Week Online. Thriving Online: A Guide for Busy Educators. https://ecampusontario.pressbooks.pub/aguideforbusyeducators/chapter/ready-set-go-your-first-week-online/

Kirby, C.S. (2020). Using share‐out grids in the online classroom: From icebreakers to amplifiers. Biochemistry and Molecular Biology Education, 48(5), 538–541. https://doi.org/10.1002/bmb.21451

Keefer, J. M. (2009, May). The critical incident questionnaire (CIQ): From research to practice and back again. In Proceedings of the 50th Annual Adult Education Research Conference (pp. 177-180).

Lewis, E. (2021). Best practices for improving the quality of the online course design and learners experience. The Journal of Continuing Higher Education, 69(1), 61-70.

Loveland, K. A., & Loveland, J. P. (2003). Student Evaluations of Online Classes Versus On-Campus Classes. Journal of Business & Economics Research, 1(4). https://doi.org/10.19030/jber.v1i4.2993

Martin, F., & Bolliger, D. U. (2018). Engagement matters: Student perceptions on the importance of engagement strategies in the online learning environment. Online Learning, 22(1), 205-222.

Martin, F., Ritzhaupt, A., Kumar, S., & Budhrani, K. (2019). Award-winning faculty online teaching practices: Course design, assessment and evaluation, and facilitation. The Internet and Higher Education, 42, 34–43. https://doi.org/10.1016/j.iheduc.2019.04.001

Mather, M., & Sarkans, A. (2018). Student perceptions of online and face-to-face learning. International Journal of Curriculum and Instruction, 10(2), 61-76.

McClain, G. A., & Hays, D. (2018). Honesty on student evaluations of teaching: effectiveness, purpose, and timing matter. Assessment and Evaluation in Higher Education, 43(3), 369–385. https://doi.org/10.1080/02602938.2017.1350828

Muljana, P.S. (2021). Course-Designing during the Pandemic and Post-Pandemic by Adopting the Layers-of-Necessity Model. TechTrends, 65(3), 253–255. https://doi.org/10.1007/s11528-021-00611-x

Murillo, A. P., & Jones, K. M. (2020). A “just-in-time” pragmatic approach to creating Quality Matters-informed online courses. Information and Learning Sciences. 121(5/6), pp. 365-380. https://doi.org/10.1108/ILS-04-2020-0087

Neckermann, S., Turmunkh, U., van Dolder, D., & Wang, T. V. (2022). Nudging student participation in online evaluations of teaching: Evidence from a field experiment. European Economic Review, 141, 104001–. https://doi.org/10.1016/j.euroecorev.2021.104001

Online Learning Consortium. (2022). OLC Quality Scorecard Suite. https://onlinelearningconsortium.org/consult/olc-quality-scorecard-suite/

Phelan, L. (2012). Interrogating students’ perceptions of their online learning experiences with Brookfield’s critical incident questionnaire. Distance Education, 33(1), 31-44. https://doi.org/10.1080/01587919.2012.667958

Quality Matters. (2021). Course Design Rubric Standards. https://www.qualitymatters.org/qa-resources/rubric-standards/higher-ed-rubric

Radchenko. (2020). Student evaluations of teaching: unidimensionality, subjectivity, and biases. Education Economics, 28(6), 549–566. https://doi.org/10.1080/09645292.2020.1814997

Rapanta, C., Botturi, L., Goodyear, P., Guàrdia, L., & Koole, M. (2021). Balancing technology, pedagogy and the new normal: Post-pandemic challenges for higher education. Postdigital Science and Education, 3(3), 715-742. https://doi.org/10.1007/s42438-021-00249-1

Sadaf, A., Martin, F., & Ahlgrim-Delzell, L. (2019). Student Perceptions of the Impact of Quality Matters-Certified Online Courses on Their Learning and Engagement. Online Learning, 23(4), 214-233. https://doi.org/10.24059/olj.v23i4.2009

Simunich, B., Robins, D. B., & Kelly, V. (2015). The impact of findability on student motivation, self-efficacy, and perceptions of online course quality. American Journal of Distance Education, 29(3), 174-185. https://doi.org/10.1080/08923647.2015.1058604

Smalley, S. (2021, October 13). Half of All College Students Take Online Courses. Inside Higher Ed. https://www.insidehighered.com/news/2021/10/13/new-us-data-show-jump-college-students-learning-online#.Yjh3l41mQ9o.link

Spooren, P., Brockx, B., & Mortelmans, D. (2013). On the Validity of Student Evaluation of Teaching: The State of the Art. Review of Educational Research, 83(4), 598–642. https://doi.org/10.3102/0034654313496870

Spooren, P. & Christiaens, W. (2017). I liked your course because I believe in (the power of) student evaluations of teaching (SET). Students’ perceptions of a teaching evaluation process and their relationships with SET scores. Studies in Educational Evaluation, 54, 43–49. https://doi.org/10.1016/j.stueduc.2016.12.003

Stanny, C.J., & Arruda, J. E. (2017). A Comparison of Student Evaluations of Teaching with Online and Paper-Based Administration. Scholarship of Teaching and Learning in Psychology, 3(3), 198–207. https://doi.org/10.1037/stl0000087

Swan, K., Matthews, D., Bogle, L., Boles, E., & Day, S. (2012). Linking online course design and implementation to learning outcomes: A design experiment. The Internet and Higher Education, 15(2), 81–88. https://doi.org/10.1016/j.iheduc.2011.07.002

The Pennsylvania State University. (2022). Penn State quality assurance e-learning design standards. https://weblearning.psu.edu/resources/penn-state-online-resources/penn-state-quality-assurance-e-learning-design-standards/

The California State University. (2022). Online and hybrid course certifications & professional development. https://ocs.calstate.edu/

Treischl, E. & Wolbring, T. (2017). The causal effect of survey mode on students’ evaluations of teaching: empirical evidence from three field experiments. Research in Higher Education, 58(8), 904–921. https://doi.org/10.1007/s11162-017-9452-4

Tualaulelei, E., Burke, K., Fanshawe, M., & Cameron, C. (2021). Mapping pedagogical touchpoints: Exploring online student engagement and course design. Active Learning in Higher Education, 1-15. https://doi.org/10.1177/1469787421990847

University of Illinois Springfield. (2022). Quality online course initiative (QOCI) rubric. https://www.uis.edu/ion/resources/qoci/

Van Wart, M., Ni, A., Rose, L., McWeeney, T., & Worrell, R. (2019). A literature review and model of online teaching effectiveness integrating concerns for learning achievement, student satisfaction, faculty satisfaction, and institutional results. Pan-Pacific Journal of Business Research, 10(1), 1-22.

Wasserman, J. A., Clair, J. M., & Wilson, K. L. (2009). Problematics of grounded theory: innovations for developing an increasingly rigorous qualitative method. Qualitative Research, 9(3), 355-381. https://doi.org/10.1177/1468794109106605

Young, S., & Duncan, H. E. (2014). Online and face-to-face teaching: How do student ratings differ? MERLOT Journal of Online Learning and Teaching, 10(1), 70-79.