The Relative Influence of Instructor Training on Student Perceptions of Online Courses and Instruction

Mary Bowne, Ed.D; Melissa Wuellner, Ph.D.; Lisa Madsen, M.S.; Jessica Meendering, Ph.D.; and John Howard, Ph.D.

Abstract

Online learning opportunities have greatly increased in past years. Various studies have examined online courses and instructor practices but have not examined students’ perceptions of their online courses and online instructors who were offered a voluntary online certification program. Students who took online courses at a Midwestern university completed a survey related to their perceptions of their individual online course and instructor. Results showed that instructors who were certified received higher, positive ratings than instructors who were not certified. The certification program utilizes a “faculty as student’ model, where faculty take courses from a student learner perspective to provide experiential learning about the pedagogy needed for successful online learning and effective teaching.

Introduction

Online learning opportunities have greatly increased throughout the United States (Online Learning Consortium, 2016). The expansion of online education has notable benefits, such as improved flexibility and convenience of learning opportunities for students, compared to traditional face-to-face course delivery (Sher, 2008). However, online teaching is different from traditional face-to-face learning environments, particularly because students must self-regulate much of their own learning (Boyd, 2004) and the nature of interactions among students and between students and instructors differs (Smith et al., 2001).

A growing number of studies have examined various aspects of online course design and instructor practices in enhancing student learning and satisfaction (Kuo et al., 2013, Yukselturk & Yildirim, 2008; Sessums et al., 2006; Jiang &Ting, 1998; among others), yet many of these studies provide unclear or contradictory information. For example, strategies that promote online “connectedness” between students have been proven critical for learner success in some studies (So & Brush, 2008; So & Kim, 2005). Other studies suggest that the major predictor of success and satisfaction is the student’s “skill at learning to learn,” followed by student-faculty contact, program factors such as relevance and integration, and opportunities to learn outside the traditional framework (Neumann & Neumann, 2016). This research has yet to provide a uniform set of data and recommendations for student satisfaction and success in distance education.

In response to the increased demand for online education, some institutions have increased online enrollment opportunities without necessarily thinking much about the qualitative aspects of online teaching and learning. Others have offered varied professional development opportunities to support both the quantitative and qualitative aspects of online success.

The nature of professional development opportunities for online learning is also varied and changing. In 2016, 94% of 2- and 4-year institutions developed their own distance education courses (IES NCES, 2016). Eighty percent of all institutions offered faculty training for online teaching, while 20% did not (Herman, 2012). In addition, a recent and comprehensive survey revealed that the following most common types of faculty development programs are offered by 75% or more of higher education institutions include website/LMS with resources, technical service (without content or pedagogy), printed and multi-media materials, consultation/informal exchanges, internal workshops (<4hrs), conference attendance, and critical review of courses. Finally, fifty-four percent of institutions offer online synchronous training (Herman, 2012).

The survey also revealed seventy percent of faculty described their institutional support of online instruction as average or below average, while one third described online development and teaching as requiring more time than traditional courses (Herman, 2012). Previous studies did not control for the previous training of the faculty to teach online and thus, may be a partial contributor to the conflicting results.

Based on this research, we, a group of certified online faculty members at a Midwestern university, wanted to learn more about the university’s online students, specifically their perceptions of their current online courses and instructors. Since we were certified through the university’s voluntary Online Instructor Certification Program (OICP), we wanted to find out if the students would report greater online satisfaction and success with faculty who had participated in the OICP compared to faculty who had not participated in the program. The primary objective of this study was to compare students’ perceptions between students who took from a certified instructor versus those who took the course from a non-certified instructor.

Overview

Online Instructor Certification Program (OICP)

The Online Instructor Certification Program (OICP) offered at the Midwestern university where this study was conducted was designed and is currently being used to teach the skills, knowledge, and best practices required of quality online/hybrid instruction. The voluntary program allows online/hybrid instructors to choose to become certified at one of three levels: Basic, Advanced, and Master. In order to better understand online pedagogy, faculty who are involved in this certification are treated as online students as they complete the levels through the university’s LMS, directed by the Instructional Design Services on campus. The program’s content includes an understanding of the course review process, measurable course objectives and learning outcomes, types of assessment, communication strategies, collaboration, social networking, Cloud services and applications, copyright, multi-media, and alignment of goals, content, and assessment. Faculty who wish to obtain the Master’s Level must have taught online for four semesters, while faculty who wish to obtain the Basic Level must have taught online for only one semester, prior to starting the training. As faculty move through the levels within the OICP, the content becomes more in-depth and the activities become larger and more collaborative.

Methods

Survey Planning

We decided first to identify a survey that focused primarily on the students’ perceptions of various online components with a particular emphasis on online course and online faculty satisfaction. We utilized portions of the Distance Education Course Evaluation Instrument survey, developed by an academic working group at the University of Florida (Sessums, Irani, Telg, & Roberts, 2006). The survey includes sections on instructor preparedness, student preparedness, technology, and course design. Adaptations to this survey include supplemental questions to identify relevant student demographics (see Appendix A). Broadly, the survey was used to evaluate online students’ perceptions of their respective online instructor and course. The electronic survey was administered via an electronic survey program (QuestionPro®). The research project was approved by the university’s Institutional Review Board (IRB-16020170-EXM). The survey was piloted by thirty-five students within three online courses prior to full implementation.

Recruitment of Respondents

All online instructors (both certified and uncertified) were informed of the survey through an email sent by the research group as well as via a weekly email newsletter from the university’s president. Instructors were also informed of the survey that the university would allow the survey results to be used as an effective teacher evaluation tool as required for annual, individual staff evaluations, since class results would be provided back to them individually. Instructors were to inform their students of the survey through a generated email we created that was to be sent to all students of the selected courses. To increase response rates, we incentivized student participation by offering one entry into a drawing to win four, $100 gift cards to the SDSU Bookstore for completing the survey. Both instructor and student participation in the survey was voluntary.

Student responses were categorized into one of two groups: Certified Instructor or Non-Certified Instructor. Instructors who were certified had completed 1-3 levels of certification within the OICP. Non-certified instructors were faculty who had not obtained any level of certification within the OICP.

Factors Measured

Students answered multiple-choice and Likert-based scale questions pertaining to various items demographics and perceptions of their online instructor and their online class. Students were asked to select one response for each item.

Specific demographic questions included the following: age, overall GPA, whether the course was required for the student’s degree program, how many credits the student was enrolled in, how many hours per week the student worked outside of schoolwork, how many hours per week the student spent on family obligations, how many online courses the student had taken prior to the current one being analyzed, and which device they used to access their online course.

Specific factors that were analyzed for overall rating of online course quality between the two groups of faculty included the following: relationship between exams and learning activities, appropriateness of assigned materials to the nature and subject of the course, reliability of the technology used to deliver the course, coordination of the learning activities with the technology, technical support’s ability to resolve technical difficulties, availability of necessary library resources, and convenience of registration procedures.

Specific factors that were analyzed for overall rating of online instructor quality between the two groups of faculty included the following: description of course objectives and assignments, communication of ideas and information, expression of expectations for performance in the class, timeliness in responding to students; timeliness in returning assignments; respect and concern for students, interaction opportunities with other students, stimulation of interest in course, coordination of the learning activities with the technology, enthusiasm for the subject, and encouragement of independent, creative, and critical thinking (see Appendix A).

Data Analysis

The research team used SPSS-23 (Statistical Package for the Social Sciences) for statistical analysis. Students’ demographics were quantified using descriptive statistics and were reported as the percent of respondents by category. Researchers then divided the respondents by the category of the instructor: 1) students who took an online course from a Certified Instructor; and 2) students who took an online course from a Non-Certified Instructor. Students’ perceptions of their online course and their instructor were then summarized by these two groups, and potential differences in perceptions were determined using multi-nominal regression. Statistical significance was determined at α = 0.05.

Findings

Thirty-one faculty members who taught 45 sections of online courses sent the online survey to their students via email. Of the 31 faculty members who volunteered, 14 of them had enrolled in the OICP offered on campus through the state regents online learning management system. Of the 14 faculty members who participated in the program, seven had completed and maintained the Masters Certification Level, the highest level obtainable through the OICP, six had obtained the Advanced Certification Level, and one had obtained the Basic Certification Level.

The electronic survey was administered to at least 505 undergraduate students, and 322 students completed the survey in its entirety (an approximately 84% response rate). Of the 322 students, 152 were enrolled in a course taught by a faculty who had completed an OICP course, whereas 170 students were enrolled in a course taught by faculty who had not completed any portion of the OICP. By course, the number of students completing the survey was 0 to 28. Students completed the survey within 6 minutes on average. Most students (95%) used their desktop or laptop computers; the remaining students completed the survey on a smartphone.

Results from this research study showed similar demographics between the students who took an online course from a Certified Instructor versus students who took an online course from a Non-Certified Instructor. Of high interest was that nearly 70% of students within both groups indicated they had previously taken 3 or more online courses prior to taking the selected online course for this study. Other majority responses included the following:

- being between 19-22 years of age

- having an overall GPA of 2.8-4.0

- having an A or B grade expectation for the enrolled course

- taking 12-17 credits per semester

- devoting similar amounts of time to work and to family members.

Results also indicated that students tended to rate themselves with “Completely True” responses related to their individual comfort level using technology. Specifically, a large portion (93%) of students indicated that they do not give up easily when confronted with technology-related obstacles, consider themselves “good” at completing tasks independently (98%), achieve goals set for themselves (99%), and regulate their behaviors to complete course requirements (99%).

Specific variables that demonstrated significant and positive results of students’ perceptions with quality online courses included relationships between exams and learning activities, appropriateness of assignment materials to the nature and subject of the course, timeliness in delivering required materials, and technical support’s ability to resolve technical difficulties. Specific variables that demonstrated significant and positive results for students’ perceptions of quality online instructors included relationships between exams and learning activities, appropriateness of assignment materials to the nature and subject of the course, timeliness in delivering required materials, and technical support’s ability to resolve technical difficulties.

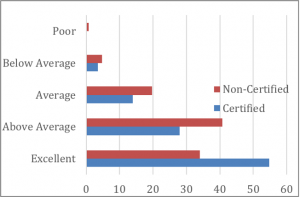

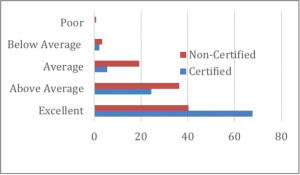

Nominal regressions indicated that those faculty who had participated in online certification programs did, in fact, receive higher excellent scores on all questions related to both quality online courses (Pseudo = .76; p ‹ .05) (see Figure 1), as well as quality online instruction (Pseudo = .91; p ‹ .05) (see Figure 2). Specifically, Certified Instructors obtained higher positive results than Non-Certified Instructors for both overall course quality and overall instructor quality. Forty-five percent of the instructors had achieved at least some level of certification in the OICP; of these, approximately half were certified as “Master Online Instructors”.

Discussion and Future Directions

Overall, results from our study showed that Certified Instructors obtained higher positive results than Non-Certified Instructors for both overall course quality and overall instructor quality. This demonstrates the value for ongoing professional development for online instructors, particularly classes and programs that are easily accessible either on campus or online. It also suggests the value to create professional development opportunities where instructors act as student learners, to understand student perceptions, viewpoints, and the reasoning and purpose behind using various online pedagogical tools. The OICP offers these opportunities for students at the respective university.

It is also important to note that the results from this research study showed similar demographics between the groups of students who were enrolled in courses taught by instructors who had versus had not obtained any level of certification within the OICP. The results also showed significant and positive relationships within several of the course design aspects, instructor practices, and student perceptions of their online course.

Research has noted that students often select online courses as they fit better in students’ daily schedules (Willging and Johnson, 2009). The majority of students in this study were working > 10 hours per week and/or taking full-time credit loads (≥ 12 credit hours), thus potentially drawn to the flexibility of online courses. Sanford et al. (2014) noted that some students may perceive an online course as “successful” if it is convenient for them, regardless of their own personal preferences to learn online or face to face. Thus, other factors not identified in this study may be contributing to the overall positive perceptions noted by students.

Motivation to take an online course may play a role in these results as well. Two motivating factors may have informed this study: 1) the online course was a requirement for the student’s major; and 2) online courses provide convenience in the student’s schedule. A majority of students may have taken an online course as part of their degree program. These students may have been more motivated to engage with their online course, thus increasing the time spent on the course to achieve deeper learning (Wuellner, 2015) and therefore increasing their satisfaction with the course. Additionally, students who take an online course within their major or program may more readily recognize the course relevancy in their future careers and view the course as meaningful or useful (Summers et al., 2005). Thus, students may be more satisfied with online courses within their degree programs than in other online courses that fulfill general education requirements.

Students reported very high levels of comfort with using technology. At face value, these findings may not be surprising given that other commentary about Millennials, who were largely represented in this study, has described this generation as “digital natives” (Meyer, 2015). However, other research has shown that Millennials frequently have low skills in solving problems with technology (Schaffhauser, 2015). These results beg the question of whether students are overconfident in their assessment of their own technology skills, or whether they truly do possess the specific technology skills needed to be successful in online courses. Certainly, students who struggle with technology may not do as well in or are less likely to be satisfied with online courses (Rodriguez et al., 2008). A growing number of students nationwide are taking online classes due to the offerings of particular degree programs or personal time constraints (Allen & Seaman, 2014) but perhaps do not possess the technology skills needed to be successful or enjoy their experience. Further research is needed to examine which specific technological skills students must possess in order to successfully navigate and learn online and whether Millennial students possess those skills.

Course design, defined broadly, greatly impacts retention and completion. A key component of course design assessment is student perception, and students tend to judge a distance education course by the level of interaction of their instructor and course qualities, or lack thereof. In addition, an expanding view of the effective design of distance education includes requirements of the instructor such as past experience in learning online as a student, a higher technology skill set including safety and implementation, and an ability to use data analytics and other findings from assessment to modify courses.

Professional development opportunities, where faculty have practical experiences as student learners, is often identified as one of the most effective means of learning more about online teaching. Additionally, because instructors work at a variety of locations, online training opportunities reach more faculty than on-campus offerings. Because of these items, professional development should be offered online, and it should be a continuous process of improvement, supported by online mentoring and monitoring. (Southern Regional Educational Board, 2009). These trainings must also focus on online pedagogy, specifically, having faculty act as students within an online certification program such as the OICP utilized at the respective university. This helps with a differing viewpoint of a student learner, rather than an instructor, knowing and understanding the pedagogy needed for successful online teaching and learning. Training and programs of online instructors in the areas of both course design and student interaction should also consider focusing on the variables identified in the study.

Conclusion

The changing faces and goals of today’s college students and the barriers to broad and effective professional development for faculty all prove a need for significant reforms in distance education. It must start with a better understanding of the students and their perceptions of online learning and teaching along with offering quality professional development opportunities to faculty who teach online. Professional development opportunities are necessary for faculty to build on current online pedagogical strategies. Offering concentrated training modules and programs related to course design and instructor practices where faculty view the course from a student learner perspective, such as the OICP, provides faculty continuous improvement opportunities to further their teaching abilities to support students learning.

References

Allen, I. E., & Seaman, J. (2014). “Grade change: Tracking online education in the United States.” Sloan Consortium. Retrieved from http://onlinelearningconsortium.

Boyd, D. (2004). The characteristics of successful online learners. New Horizons: Adult Education 18, 31–39.

Herman, J. (2012). Faculty development programs: The frequency and variety of professional development programs available to online instructors. Journal of Asynchronous Learning Networks 16(5), 87-106.

IES NCES. (2016). Distance education at post-secondary Institutions. Retrieved from https://nces.ed.gov/programs/coe/pdf/coe_sta.pdf

Jiang, M., & Ting, E. (1998). Course design, instruction, and students’ online behaviors: A study of instructional variables and student perceptions of online learning. Paper presented at the Annual Meeting of the American Educational Research Association, April 13-17, 1998, San Diego, CA.

Kuo, Y.-C., Walker, A. E., Belland, B. R., & Schroder, K. E. E. (2013). A predictive study of student satisfaction in online education programs. The International Review of Research in Open and Distance Learning, 14(1), 16-39.

Meyer, K. (2015, January 3). “Millennials as Digital Natives: Myths and Realities.” Nielsen Norman Group. Retrieved from https://www.nngroup.com/articles/millennials-digital-natives/.

Neumann Y. and E Neumann. (2016, May 3). What we’ve learned after several decades of online learning. Retrieved from http://www.insidehighered.com

Online Learning Consortium. (2016). Babson study: Distance education enrollment growth continues. Retrieved from https://onlinelearningconsortium.org/news_item/babson-study-distance-education-enrollment-growth-continues-2/

Rodriguez, M. C., Ooms, A., & Montañez, M. (2008). Students’ perceptions of online-learning quality given comfort, motivation, satisfaction, and experience. Journal of Interactive Online Learning, 7(2), 105-125.

Sanford, Jr., D. M., Ross, D. N., Rosenbloom, A., Singer, D., & Luchsinger, V. (2014). The role of the business major in student perceptions of learning and satisfaction with course format. MERLOT Journal of Online Learning and Teaching, 10(4). Retrieved from http://jolt.merlot.org/vol10no4/Ross_1214.pdf.

Schaffhauser, D. (2015, June 11). “Report: 6 of 10 Millennials Have ‘Low’ Technology Skills.” The Journal. Retrieved from https://thejournal.com/articles/2015/06/11/report-6-of-10-millennials-have-low-technology-skills.aspx.

Sessums C., Irani, T., Telg, R., & Roberts, T.G. (2006). Case study: Developing a university-wide distance education evaluation program at the University of Florida. In Williams, D., Howell, R., & Hricko, M. (Eds.), Online assessment, measurement and evaluation: emerging practices (pp. 76-91). Hershey, PA: Information Science Publishing.

Sher, A. (2008). Assessing and comparing interaction dynamics, student learning, and satisfaction within web-based online learning programs. MERLOT Journal of Online Learning and Teaching, 4(4). Retrieved from http://jolt.merlot.org/vol4no4/sher_1208.htm.

Smith, G. G., D. Ferguson, and M. Caris. 2001. Online vs Face-to-face. Technological Horizions in Education, 28(9). https://www.questia.com/library/journal/1G1-74511367/online-vs-face-to-face.

So, H.J., & Brush, T.A. (2008). Student perceptions of collaborative learning, social presence and satisfaction in a blended learning environment: Relationships and critical factors. Computers and Education, 51(1), p.318-336.

So, H. J., & Kim, B. (2005). Instructional methods for computer Supported collaborative learning (CSCL): A review of case studies. Paper presented at the 10th CSCL Conference, Taipei, Taiwan.

Summers, J. J., Waigandt, A., & Wittaker, T. A. (2005). A comparison of student achievement and satisfaction in an online versus a traditional face-to-face statistics class. Innovative Higher Education, 29, 233–250. doi:10.1007/s10755-005-1938-x.

Willging, P. A. & Johnson, S. D. (2009). Factors that influence students’ decision to dropout of online courses. Journal of Asynchronous Learning Networks, 13(3),115-127.

Wuellner, M. R. (2015). Student success factors in two online introductory-level natural resource courses. Natural Sciences Education, 44, 1-9.

Yukselturk, E., & Yildirim, Z. (2008). Investigation of interaction, online support, course structure and flexibility as the contributing factors to students’ satisfaction in an online certificate program. Educational Technology and Society 11(4), 51-65.

Zimmerman, B. J. (2001). Theories of self-regulated learning and academic achievement: An overview and analysis. In B. J. Zimmerman & D. H. Schunk (Eds.), Self-regulated learning and academic achievement: Theoretical perspectives (pp. 1-37). Mahwah, NJ, US: Lawrence Erlbaum Associates Publishers.

Appendix A: Survey Questions

| Excellent | Above Average | Average | Below Average | Poor | |

| Description of course objectives and assignments | ❏ | ❏ | ❏ | ❏ | ❏ |

| Communication of ideas and information | ❏ | ❏ | ❏ | ❏ | ❏ |

| Expression of expectations for performance in this class | ❏ | ❏ | ❏ | ❏ | ❏ |

| Timeliness in responding to students | ❏ | ❏ | ❏ | ❏ | ❏ |

| Timeliness in returning assignments | ❏ | ❏ | ❏ | ❏ | ❏ |

| Respect and concern for students | ❏ | ❏ | ❏ | ❏ | ❏ |

| Interaction opportunities with other students | ❏ | ❏ | ❏ | ❏ | ❏ |

| Stimulation of interest in course | ❏ | ❏ | ❏ | ❏ | ❏ |

| Coordination of the learning activities with the technology | ❏ | ❏ | ❏ | ❏ | ❏ |

| Enthusiasm for the subject | ❏ | ❏ | ❏ | ❏ | ❏ |

| Encouragement of independent, creative, and critical thinking | ❏ | ❏ | ❏ | ❏ | ❏ |

| Overall rating of instructor | ❏ | ❏ | ❏ | ❏ | ❏ |

| Excellent | Above Average | Average | Below Average | Poor | Not sure | |

| Relationship between examinations and learning activities | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| Appropriateness of assigned materials (readings, video, etc.) to the nature and subject of the course | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| Timeliness in delivering required materials | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| Reliability of the technology(ies) used to deliver this course | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| Technical support’s ability to resolve technical difficulties | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| Availability of necessary library resources | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| Convenience of registration procedures | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| Strongly Agree | Somewhat Agree | Neutral | Somewhat Disagree | Strongly Disagree | Not sure | |

| The course is well organized and easy to navigate. | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| An easy to follow schedule is posted with expected due dates. | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| The instructor provides timely announcements and reminders. | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| The instructor provides constructive feedback on assignments. | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| The instructor promotes a supportive online learning environment. | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| The instructor effectively uses various media and active learning strategies throughout the course. | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| The instructor effectively uses various assessment tools throughout the course. | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

Please rate the overall quality of your online course(s) this semester.

- Excellent

- Above Average

- Average

- Below Average

- Poor

| Strongly Agree | Somewhat Agree | Neutral | Somewhat Disagree | Strongly Disagree | Not sure | |

| I can troubleshoot my own issues when I cannot connect to the internet. | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| A know who to contact in the event that I have a computer issue that I cannot solve. | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| I can properly format a document in Microsoft Word | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| I can identify file extensions for standard application such as .doc, .xls, .pdf, .ppt, .jpg, .wav, and .mp3. | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| I can send e-mail with little to no issues. | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| I can properly attach files to e-mail messages I send. | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| I can find reliable sourcees of information on the internet. | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| I can efficiently search the internet for my own personal needs. | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| I can use social media effectively to create a positive online presence. | ❏ | ❏ | ❏ | ❏ | ❏ | ❏ |

| Completely true | More true than false | More false than true | Completely false | Not sure | |

| I believe online courses are less rigorous than their face-to-face counterparts. | ❏ | ❏ | ❏ | ❏ | ❏ |

| I believe I am responsible for my own education; what I learn is ultimately my responsiblity. | ❏ | ❏ | ❏ | ❏ | ❏ |

| I do not give up easily when confronted with technology-related obstacles (e.g., internet connection issues, inability to contact the instructor immediately, etc.). | ❏ | ❏ | ❏ | ❏ | ❏ |

| I am comfortable working in alternative learning environments outside of the traditional classroom (e.g., online, the library, at home). | ❏ | ❏ | ❏ | ❏ | ❏ |

| I work well in a group. For example, I am an active participant and do at least my fair share of the work. | ❏ | ❏ | ❏ | ❏ | ❏ |

| I am good at completing tasks independently. | ❏ | ❏ | ❏ | ❏ | ❏ |

| I organize my time to complete course requirements in a timely manner. | ❏ | ❏ | ❏ | ❏ | ❏ |

| I regulate and adjust my behavior to complete course requirements. | ❏ | ❏ | ❏ | ❏ | ❏ |

| I understand the main ideas and important issues of readings without guidance from my instructor. | ❏ | ❏ | ❏ | ❏ | ❏ |

| I achieve goals that I set for myself. | ❏ | ❏ | ❏ | ❏ | ❏ |

Was this course required for your degree program?

- Yes

- No

What is your overall GPA?

- 1.9 or less

- 2.0 – 2.2

- 2.3 – 2.7

- 2.8 – 3.3

- 3.4 – 4.0

What grade do you expect to earn in this course at the end of the semester?

- A

- B

- C

- D

- F

- Not sure

How many credits are you taking this semester?

- Less than 12

- 12 – 14

- 15 – 17

- 18 or more

How many hours per week on average are you working this semester?

- 0

- 1 – 10

- 11 – 20

- 21 – 30

- More than 30

How many hours per week on average are spent attending to family obligations/needs this semester?

- 0

- 1 – 10

- 11 – 20

- 21 – 30

- More than 30

What is your age?

- 18 or younger

- 19 – 20

- 21 – 22

- 23 or older

How many online courses have you taken prior to this one?

- 0

- 1

- 2

- 3 or more

Which devices do you use to access your online course? (Select ALL that apply.)

- Laptop

- Smartphone

- Tablet

- Smartwatch

- Other