6 The creation of a Teaching Excellence Framework: Defining, measuring, and facilitating teaching excellence

James Agutter; T. Adam Halstrom; and Anne Cook

Introduction

Defining what constitutes teaching excellence is a crucial focus for faculty development to create deep learning opportunities in our classrooms and ensure our students’ success. However, establishing a single definition is challenging to standardize and quantify. We recognize outstanding teaching when we see and experience it — we all know the faculty members who spark curiosity, foster deep learning, and create memorable experiences for our students. Yet, how do we assess something so complex, nuanced, and profoundly influential? Excellent teaching varies based on the institution, the field, the context, the content, and the demographics of the students in a course. This variability significantly complicates the assessment of teaching quality.

Many colleges and universities have utilized Student Evaluations of Teaching (SETs) for decades to assess teaching quality. SETs were developed to gather students’ insights into their learning experiences and provide instructors with valuable feedback for improving their teaching. Over time, these evaluations have taken on a much more significant role, becoming central to how institutions make decisions regarding tenure, promotions, and efforts to enhance teaching practices (Carlucci et al., 2019; Ray et al., 2018). While SET results can offer some insights into what students are experiencing in the classroom, they have limitations on how effective they are at measuring teaching quality.

Research has shown that SET results are often influenced by factors outside an instructor’s control, like class size, course difficulty, course content, and/or the instructor’s gender, appearance, or accent. This raises important questions about whether SETs can capture important feedback on the quality of teaching or if they mainly reflect overall student satisfaction (Abrami et al., 2007).

Given these challenges, it is important to approach the assessment and evaluation of teaching quality thoughtfully, with the aim of optimizing existing measures and integrating other types of information. To capture what excellent teaching entails, we need methods that reflect diverse dimensions and bring together qualitative and quantitative data from various stakeholders with expertise in assessing teaching quality. Student Evaluation of Teaching (SET) is a starting point, but it does not tell the whole story. This is why many institutions are exploring new, broad teaching quality frameworks and tools to enhance our understanding and assessment of teaching quality. This chapter will explore the background of the origins and limitations of SETs, discuss examples of how institutions have developed frameworks to address these limitations and expand the methods to assess quality teaching, and then delve into the University of Utah’s specific approach.

Background

Course evaluations originated in the late 19th century as American universities began applying scientific approaches to measuring teaching quality. Initially performed by outside groups and associations, these assessments measured general teaching performance rather than gathering student feedback. In the 1920s, student rating scales were introduced in universities. (Berk, 2005). In the 1950s, Purdue University was at the forefront of research on student ratings (SETs).

The Elementary and Secondary Education Act enacted in 1965 institutionalized program evaluations, including teaching quality assessments. This led to SETs becoming increasingly prevalent in the 1970s in higher education settings (Stufflebeam, 2001). This increased use created a demand for evaluation expertise and more sophisticated methods, which further helped drive the integration and routine assessment of programs and evaluations to understand if learning objectives were being met. As a result, interest in researching the use of student teaching rating instruments increased significantly, with scholars such as Kenneth Feldman interested in studying their reliability and validity. Feldman’s research showed potential biases in the student ratings. Factors such as class size, course level, and subject matter, which were out of the faculty’s control, could affect the ratings (Feldman, 1976).

During the early 2000s, as digital systems emerged, the distribution of course evaluations shifted significantly from paper to online formats (Guder & Malliaris, 2010). These evaluations became more straightforward to distribute and analyze, although they were potentially less reliable and even more prone to bias. The utility of traditional SETs is well-documented, yet they remain susceptible to gender bias, course load, and instructor appearance (Royal, 2017). Furthermore, one of the significant challenges in transitioning to online evaluations is the low response and return rates compared to paper evaluations (Ahmad, 2018). A low response rate suggests an underrepresentation of the target student population and jeopardizes the validity of the assessment (He & Freeman, 2020).

Institutional Frameworks for Teaching Evaluation

In recent years, there has been a growing recognition of the limitations of traditional SETs (Uttl et al., 2017). Following the work of Feldman, other research confirms that SETs are influenced by various factors unrelated to teaching quality, such as gender bias, the instructor’s accent, course difficulty, and even the instructor’s physical attractiveness (Benton & Cashin, 2014). There has been an increasing effort across multiple higher education institutions to address these known biases by developing more comprehensive and holistic methods for evaluating teaching. As part of acknowledging the need for more robust evaluation methods, various institutions and organizations have created frameworks for assessing teaching effectiveness; see Table 1 for examples and a review of each of these frameworks. These frameworks will also be reviewed in subsequent paragraphs.

Table 1.

Example Frameworks for Defining and Evaluating Teaching Excellence in Institutions of Higher Education

|

Institution/Organization |

Framework Name |

Brief Description |

URL |

|

Association of American Colleges and Universities (AAC&U) |

A practical, web-based tool to help campuses build capacity and lead institutional transformation to ensure students are learning. It guides campuses through a five-phase process to examine, implement, and enhance teaching, learning, and assessment practices. |

|

|

|

United Kingdom Office for Students |

The UK Teaching Excellence Framework (TEF) assesses and rewards excellence in teaching at universities and colleges, focusing on student satisfaction, retention rates, and graduate outcomes. |

|

|

|

Colorado State University |

Comprises seven essential, interrelated domains of effective teaching practices for face-to-face and online instruction. Includes rubrics, goal-setting processes, and teaching practices to improve student learning. |

|

|

|

University of Iowa |

Establishes that assessment of teaching should be based on at least two sources of evidence and a teaching statement. Identifies six possible forms of evidence for demonstrating teaching effectiveness. |

|

|

|

A rubric-based framework for documenting, reviewing, and evaluating university teaching. Focuses on seven dimensions of teaching and includes resources for professional development. |

|

||

|

University of Colorado Boulder (TEval Project) |

Facilitates departmental and campus-wide efforts to provide a richer evaluation of teaching. Defines teaching as a scholarly activity and focuses on seven core components of scholarly teaching. |

|

|

|

University of Massachusetts Amherst (TEval Project) |

Based on the University of Kansas Benchmark Teaching Effectiveness framework and rubric, UMass Amherst has developed their own template for evaluating teaching. |

|

|

The Association of American Colleges and Universities (AAC&U) has developed the Teaching-Learning-Assessment (TLA) framework, a web-based tool that assists campuses in building capacity and driving institutional transformation. The TLA Framework is intended to guide campuses through a thoughtful, five-phase process focused on examining, improving, and enhancing teaching, learning, and assessment practices. While the TLA does not specifically address measuring teaching excellence, it provides a broad framework to identify, assess, and provide approaches to support and enhance student learning outcomes. The TLA was initially piloted at 20 community colleges, serving approximately 150,000 students. An example of how it has been effective is at Salt Lake Community College (SLCC), where it has been used to identify gaps in learning outcomes and implement meaningful improvements in some of its programs.

The UK Teaching Excellence Framework (UKTEF) was initially developed in 2016 as part of a comprehensive overhaul of the UK government’s higher education system. This framework assesses and recognizes excellence in university and college teaching. Its multifaceted goals include improving teaching quality, supporting student decision-making, acknowledging institutional accomplishments, and raising the profile of teaching in higher education. Data such as student satisfaction, retention rates, and graduate employment outcomes, among others, are analyzed to determine an overall view of teaching excellence. This information is subsequently used by the UK higher education system to evaluate and reward universities (UK Office for Students, 2023).

While the UKTEF has prompted a focus on teaching quality and student experience, it has faced criticism regarding the appropriateness of its metrics and potential unintended consequences. Critiques of the UKTEF indicate that narrowly focusing on predefined metrics may prevent institutions from addressing real improvements in teaching quality (Ashwin, 2017). The impact of the UKTEF on institutional reputations and the significant resources required for assessment preparation are additional critiques of the broad use of the framework (Tomlinson et al., 2020).

Colorado State University has developed a Teaching Effectiveness Framework (TEF), which consists of seven essential interrelated domains of effective teaching practices. These domains include Inclusive Pedagogy, Curriculum/Curricular Alignment, Pedagogical Content Knowledge, Student Motivation, Instructional Strategy, Feedback and Assessment, and Classroom Climate. Like other frameworks, the TEF emphasizes using multiple evidence sources, such as instructor self-reflection, peer review of teaching, and student feedback (Colorado State University, 2024).

The University of Iowa has implemented a framework for evaluating teaching developed by the Office of the Provost and the Teaching Effectiveness Task Force (University of Iowa, 2024). This framework requires at least two sources of evidence and a teaching statement demonstrating specific qualities of effective teaching. The approach seeks to provide a more comprehensive assessment of teaching practices by acknowledging the various dimensions of an instructor’s contributions to student learning.

Finally, the TEval project is funded by the National Science Foundation (Transforming Higher Education – Multidimensional Evaluation of Teaching, 2024). The goal is to examine how a framework could be developed and deployed across multiple campuses. These include the University of Kansas, the University of Colorado, and the University of Massachusetts Amherst. The TEval project created and assessed a framework at these institutions, gathering evidence such as self-reflection, peer evaluation, and student feedback. This triangulation, also utilized in other frameworks, attempts to minimize the biases associated with single-metric approaches. The developed TEval frameworks incorporate components of effective teaching strategies, such as including clear learning objectives, creating a positive classroom climate, providing effective mentoring practices, and providing opportunities for reflective growth for educators and students.

Additionally, TEval features a goal-setting process in which teachers identify aspects of their teaching they wish to improve. The implementation of TEval differs across institutions. Tools and solutions, including additional rubrics and peer review protocols, originated at the University of Massachusetts, Amherst. The University of Kansas offers faculty development services through workshops and support systems. At the same time, the University of Colorado, Boulder, has adopted an incremental model that facilitates voluntary department adoption and mentorship teams.

A review of current frameworks for evaluating teaching effectiveness reveals a growing consensus around the need for multi-dimensional, evidence-based approaches. While frameworks such as the UKTEF emphasize institutional metrics like student satisfaction and graduate outcomes, others, including Colorado State University’s TEF, the University of Iowa’s model, and the NSF-funded TEval project, prioritize a combination of self-reflection, peer review, and student input to capture teaching from multiple perspectives. The AAC&U’s TLA framework differs in focus, serving more as a planning tool to enhance institutional teaching and learning practices rather than directly measuring teaching excellence. Across these models, there is a shared movement toward recognizing the complexity of teaching and the importance of inclusive, reflective, and context-specific practices. However, differences remain in implementation: for example, while TEval emphasizes faculty goal-setting and adaptation across campuses, the UKTEF applies standardized metrics nationally, sometimes drawing criticism for its narrow scope and resource demands.

Developing the Utah Teaching Excellence Framework (UTEF)

As individuals who work in academic innovation and have administrative roles in the University of Utah’s Martha Bradley Evans Center for Teaching Excellence (MBECTE), we were tapped to lead the work on developing a teaching framework for the university. James Agutter is the Senior Associate Dean for Academic Innovation for Undergraduate Studies and an Associate Professor in the College of Architecture and Planning. Adam Halstrom is the Feedback and Innovation Systems Manager in MBECTE. Anne Cook is a Professor in Educational Psychology and the Director of MBECTE. Our center administers, manages, and analyzes SET data, and we are invested in the evaluation of teaching excellence on campus, whether for faculty development or evaluation purposes.

Like other higher education institutions, the University of Utah (U of U) has integrated teaching quality evaluation into its academic review process. These evaluations are used during Retention, Promotion, and Tenure (RPT) decisions and annual performance reviews, ensuring that teaching excellence remains a component of a broad evaluation.

The U of U’s annual review process includes all categories of faculty, such as tenured, tenure-track, career-line, and adjunct positions. These reviews consistently evaluate the instructional quality of faculty engaged in teaching. Teaching, along with research, creative activities, and service, is essential for RPT evaluations for tenure-line faculty. Similarly, faculty on single or multi-year teaching contracts but not tenure-line are assessed on their teaching as part of their standard review and promotion process. Each department must establish teaching standards in its evaluation criteria and guidelines for both tenure-line and teaching faculty, employing various methods to capture the diverse nature of teaching. The methods for assessment encompass peer evaluations, self-assessments, classroom observations, student learning outcomes achievement, and faculty-curated course material examples. Student input is vital, and course surveys and faculty responses are required for every review. While course evaluations provide a foundational measure, the university encourages a holistic view of teaching excellence and ongoing faculty development. Career-line, adjunct, and visiting faculty members receive regular reviews, with comprehensive evaluations conducted at key milestones, such as the end of initial appointment terms or during promotion assessments. This ensures that all instructors participate in a continuous cycle of feedback and improvement regardless of rank or type of appointment.

In 2021, the Acting Provost established a cross-institutional Teaching Excellence Task Force to formulate best practices for assessing teaching excellence. This interdisciplinary Task Force sought to address the diverse needs of faculty, students, and administrators by creating a robust and flexible framework grounded in research, inclusive of various perspectives and disciplines. This work was informed by and connected to the work done by other institutions, as discussed in the previous section, including the development of a clear definition of teaching excellence and multiple inputs for assessing its quality. However, the U of U’s work expanded to include a specific set of digital assessment tools such as instructor self-reflection, student course feedback, and peer observation that aligned with the definition and could be integrated into a central repository for analysis by AI-assisted technologies. The Utah Teaching Excellence Framework (UTEF), developed by the task force in collaboration with the Martha Bradley Evans Center for Teaching Excellence (MBECTE) at the U of U, represents a collaborative and forward-thinking approach to defining and evaluating teaching excellence.

The first step in developing the UTEF was establishing a comprehensive definition of teaching excellence. The task force engaged in an iterative process which included the following:

1. Explore various definitions of teaching excellence from institutions nationwide, such as the University of Illinois Urbana-Champaign (UIUC, 2024) and the University of Southern California (USC, 2024), while reflecting on the strengths and limitations of existing studies assessing teaching effectiveness.

2. Faculty and Administrator Input: The Task Force iteratively discussed the definition with faculty and administrators from various disciplines to ensure it was adaptable to various teaching environments and pedagogical approaches. This was done through a series of meetings with colleges and academic entities, such as departments and programs on campus, and individual faculty discussions.

3. Student Perspectives: Students were surveyed using a standardized survey instrument to gather insights about teaching behaviors that could be linked to their view of teaching excellence and the teaching excellence definition drafts.

4. Internal Expertise: The Center for Teaching Excellence staff members provided critical feedback on the UTEF definition of teaching excellence.

Teaching Excellence Definition

The University of Utah defines teaching excellence across five thematic areas. These areas, with descriptions, inform a common framework for course-based instructional activities.

Foster Development

- Foster student development in discipline-specific language and approaches

- Model and develop mindful, ethical, inclusive, and responsible behavior in instructional environments

- Recognize power differentials between professors, instructors, graduate students, and students

- Foster students’ ability to assess learning and adjust their learning strategies

- Develop habits of professional responsibility

Promote Deep Engagement

- Create learning objectives and experiences that are challenging yet attainable.

- Use rigorous content informed by theory, research, evidence, and context.

- Provide materials, cases, or applications that include diverse experiences, perspectives, or populations.

Incorporate Promising Teaching Practices

- Create an environment conducive to intellectual risk-taking

- Utilize relevant strategies and tools to provide students access to course materials, grades, and other feedback

- Apply multiple techniques and strategies to reach all students in an inclusive, accessible, and culturally responsive way

- Manage teaching and learning effectively: plan activities, manage time and student participation

- Use active learning strategies to promote the development of content mastery

- Foster the translation of learning and problem-solving skills to different and changing contexts

- Follow university policies and procedures regarding instructional practices and maintain course policies that are applied uniformly and fairly

Utilize Assessment Practices

- Use assessments at timely intervals throughout the course

- Provide specific, regular, and timely feedback tied to performance criteria

- Use transparent assessment processes with clear standards tied to learning objectives

- Demonstrate the effectiveness of instruction through measures of student mastery of learning objectives

Pursue Ongoing Instructional Improvement

- Utilize feedback from a variety of sources to inform teaching practices

- Reflect on practices, experiences, and integrate new knowledge

- Seek out pedagogical approaches to improve teaching practices

The definition of teaching excellence serves as a clear and sharable foundation that guided the subsequent development work, ensuring that each revised assessment tool—be it peer evaluations, instructor reflections, or student feedback—connected and included the identified components of the definition. In our initial analysis, we identified that various stakeholders’ data collection is executed differently across campus. This addressed the varying teaching and pedagogical contexts but led to inconsistencies among departments and colleges in what was being collected and what constituted teaching excellence. One of UTEF’s goals was to address these inconsistencies by streamlining the data collection process and making data collection, analysis, and interpretation more straightforward and consistent.

To ease the burden on faculty and administrators to analyze and utilize this rich data from multiple stakeholders, we developed an AI-powered tool (TEF-Talk) that analyzes and reports on the multi-dimensional data we gather. This secure web-based analysis portal provides summary insights at every level of both qualitative and quantitative data, from individual faculty members to entire departments or colleges. These insights are structured to highlight strengths and areas for improvement in teaching across the different feedback instruments.

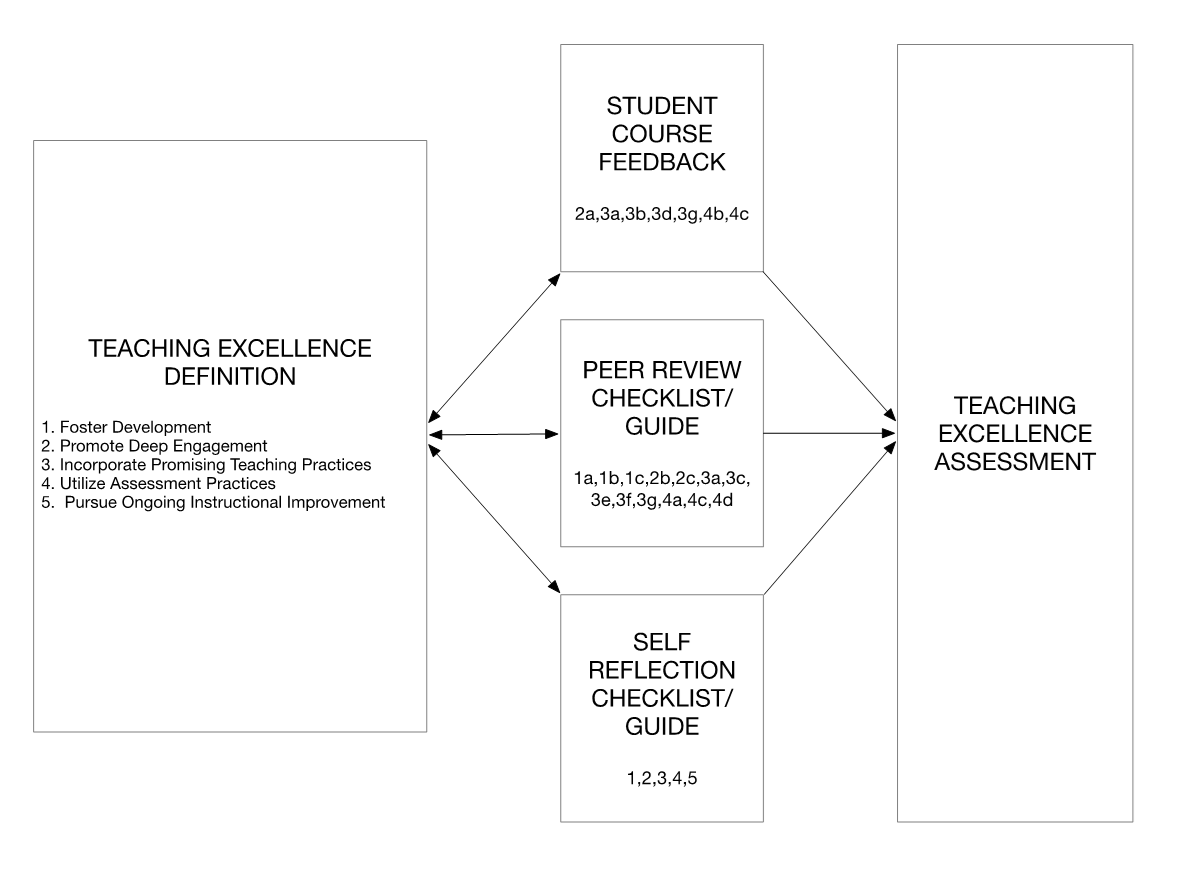

Figure 1.

The overall approach for a comprehensive teaching excellence initiative is to build on the foundation of teaching excellence with instruments, artifacts, data flows, and reports.

Figure 1 shows the overall University of Utah’s Teaching Effectiveness Framework (UTEF) approach, which describes the structured, multi-level system for addressing and assessing teaching excellence. It indicates how various colleges and departments can adapt essential teaching dimensions from the definition, ensuring flexibility and relevance. The framework describes how various instruments, student feedback, instructor reflections, and peer reviews work in conjunction with artifacts such as syllabi and assignments. In addition, data flows are indicated, and the use of the AI-enabled reporting system can produce reports for students, instructors, departments, and the university. This interconnected system offers a comprehensive and nuanced evaluation of teaching practices while permitting customization at institutional and departmental levels.

Figure 1 shows the overall University of Utah’s Teaching Effectiveness Framework (UTEF) approach, which describes the structured, multi-level system for addressing and assessing teaching excellence. It indicates how various colleges and departments can adapt essential teaching dimensions from the definition, ensuring flexibility and relevance. The framework describes how various instruments, student feedback, instructor reflections, and peer reviews work in conjunction with artifacts such as syllabi and assignments. In addition, data flows are indicated, and the use of the AI-enabled reporting system can produce reports for students, instructors, departments, and the university. This interconnected system offers a comprehensive and nuanced evaluation of teaching practices while permitting customization at institutional and departmental levels.

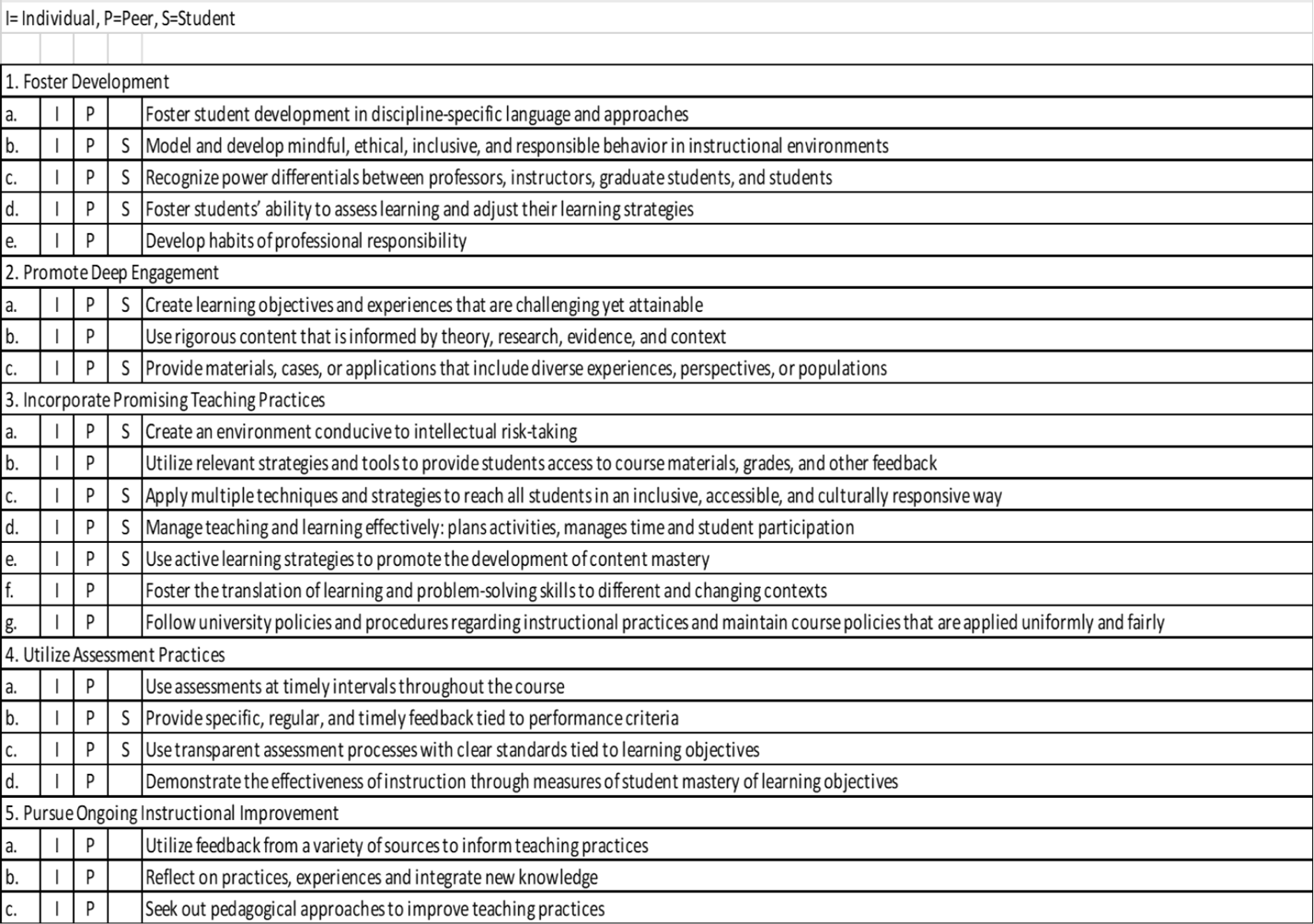

When defining teaching excellence, we recognized that not all stakeholders could evaluate each dimension. Consequently, we developed a structure to determine which stakeholder group was best equipped to assess each dimension. Table 2 outlines the dimensions of the definition by stakeholders: instructors (I), peers (P), and students (S). The alignment of various stakeholders with the dimensions of the teaching excellence definition informed the subsequent refinement of the assessment instruments for evaluating teaching excellence.

Student Course Feedback. Redesigned feedback instruments align with UTEF’s definition of teaching excellence and only asks questions that students are knowledgeable to answer. This is used to provide student insight into teaching practices in the classroom.

Peer Reviews. Standardized templates for peer reviews were created to provide consistent and meaningful teaching assessments across disciplines from peers who are experts in domain-specific best teaching practices.

Instructor Reflections. Standardized templates were developed for faculty and instructors to document and reflect on their teaching practices, establish improvement goals, and demonstrate alignment with UTEF’s definition of excellence.

Table 2.

Stakeholder Mapping to Teaching Excellence Definition

By triangulating data from these sources, UTEF aims to provide a multidimensional perspective on teaching performance based on broad and vetted definition and attempts to reduce the limitations of depending on a single measure. (See Figure 2.)

Figure 2

UTEF Assessment Instruments with Definition Dimensions and Stakeholders Mapped

Piloting the Teaching Excellence Framework at the U of U (UTEF)

To implement our framework, we developed and piloted questions for Student Course Feedback (SCF), instructor reflection forms, and peer review tools to align them with the framework’s core principles and the different dimensions of the definition. Throughout the academic year, the SCF questions were tested in two large colleges: the College of Education and the College of Health. The instructor reflection forms and peer review tools were also piloted with faculty from these two colleges and other key stakeholders across the campus, ensuring broad representation and diverse perspectives.

Student Feedback

Under the assumption that students view teaching excellence as instructional behaviors that are likely to lead to their success as students, we asked 96 undergraduates to read the student-focused dimensions of UTEF’s teaching excellence definition (see Table 2) and rate them in terms of their importance to their success as a student. On a 5-point Likert scale, where a rating of 5 represented strong importance and 1 being low importance, the mean ratings for our dimensions of teaching excellence ranged from 4.8 (SD=1.3) to 5.54 (SD=1.02).

We also wanted to assess whether the SCF items appropriately reflected the five key areas of our definition of teaching excellence. A confirmatory factor analysis was conducted on pilot data gathered from students in all courses from the College of Health and the College of Education over three semesters to determine how well our revised items mapped onto the original five UTEF components. Although the initial analyses revealed an acceptable fit for internal validity (CFI = 0.92 and TLI =.9), some items loaded on multiple factors, suggesting that further revision and analysis of the SCF items are needed. Combined, these analyses indicate good construct validity in our initial student instrument.

Instructor and Peer Review Tools

Early feedback on the instructor reflection and peer review tools has been collected to evaluate their effectiveness; this process is ongoing to identify areas for improvement. This iterative process ensures that the feedback instruments are robust and reliable, aligned with institutional goals, and effective in supporting teaching evaluation and professional development across the university.

Initial feedback from faculty indicates strong support for the standardized approach to conducting peer evaluations and generating instructor statements based on the framework. Faculty members have expressed their appreciation for its clarity and consistency, which aids in streamlining the evaluation process and ensures a more equitable assessment across disciplines. They are especially grateful that the framework identifies and values aspects of teaching often overlooked in SCF, such as contributions to curriculum development, engagement in professional development activities related to teaching, and the creation of innovative teaching tools and resources. By incorporating these elements, the framework provides a more comprehensive view of teaching excellence, which can aid in aligning evaluation practices more closely with the multifaceted nature of teaching practice.

TEF-Talk Platform

One of the most significant challenges and contributions of UTEF has been our approach to assessing the qualitative and quantitative data produced from various instruments on a large scale across the institution. Most reporting tools provide static dashboards of the resulting information and display individual reports showing only one specific course during one semester. Additionally, the rich qualitative feedback data is presented as raw quotes, requiring a faculty member or reviewer to read individual comments one by one and then synthesize and find patterns and themes. This task is further complicated by data stored in various repositories in different formats.

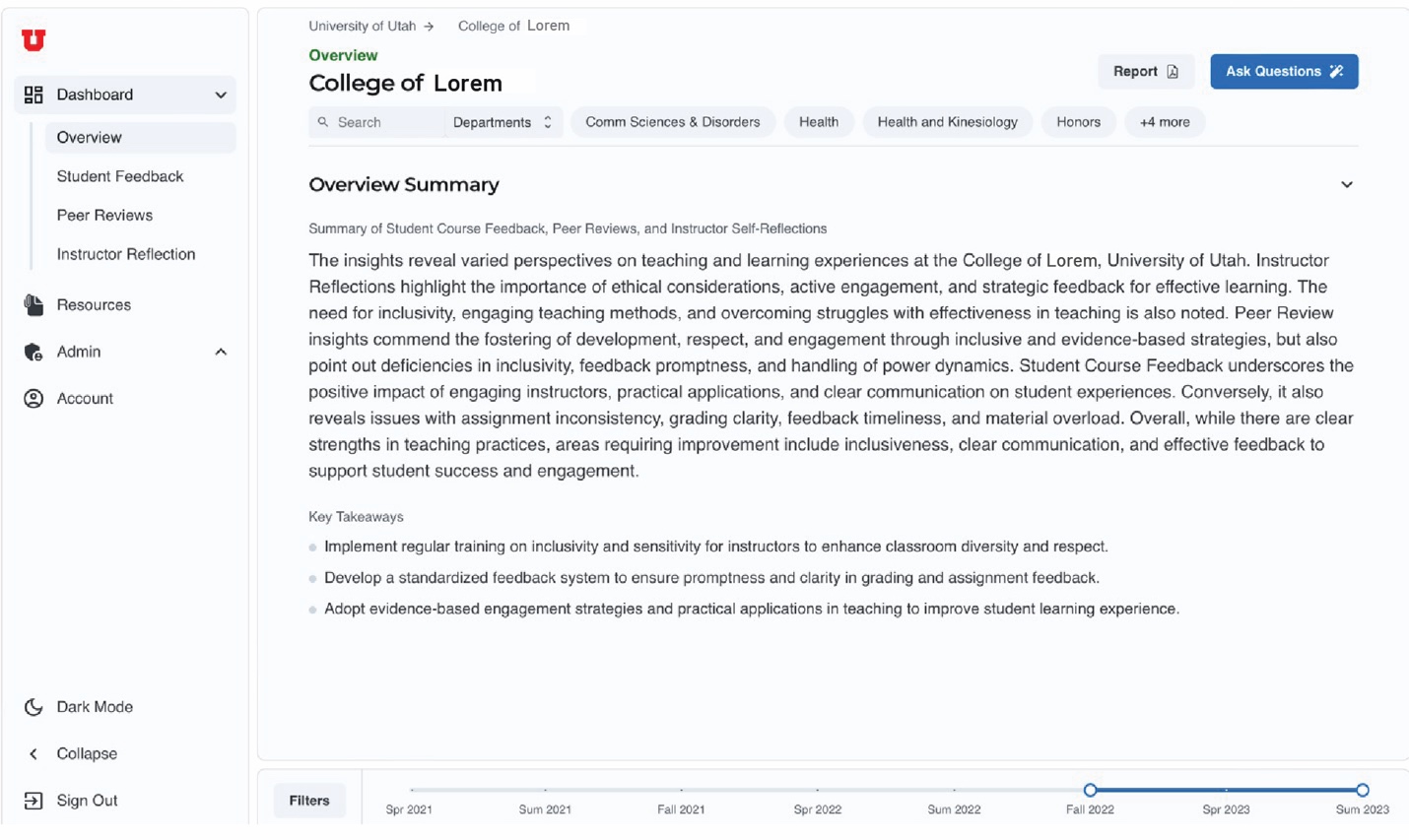

To tackle these weaknesses and difficulties, we developed the TEF-Talk platform, which utilizes the refined digital instruments discussed previously to gather insights from students, peer reviewers, and faculty self-reflection. This platform creates a scalable, systematic, and unified approach for assessing teaching, developing consistency across feedback sources, and enhancing clarity in reporting. The TEF-Talk platform is an AI-based tool powered by a secure generative AI engine that facilitates qualitative data analysis across various instrument datasets. (See Figure 3.)

Figure 3

Example of overview summary across SCF, peer review, and instructor self-reflection

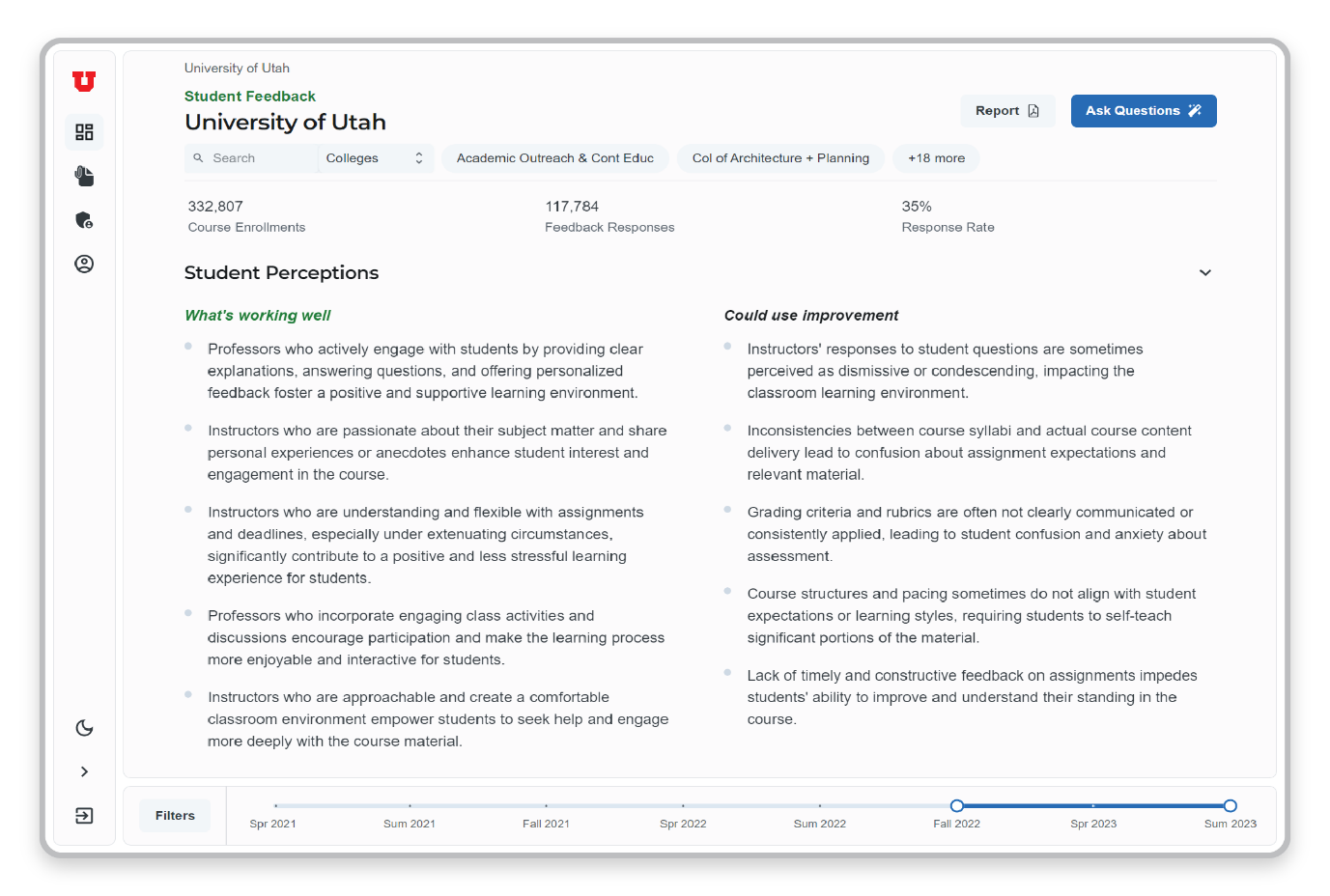

The University of Utah has collected semesterly SCF from students in all classes since 2002, first in paper format and then moving to online distribution and collection. However, finding themes and patterns across this vast volume of open-ended responses has proven challenging, often requiring a manual review of each comment. Generating insight for individual faculty in individual classes is difficult, and discerning macro trends across the campus over time has been next to impossible. TEF-Talk can analyze qualitative and quantitative data at scale and over time. It integrates three distinct qualitative datasets: self-reflection, peer review, and SCF. The platform utilizes a spatial-reduction method for AI analysis, which can synthesize, summarize, and generate insights from this large volume of data. This method enables the preprocessing of qualitative data, normalizing insights from diverse datasets, and tracing insights back to the qualitative comments. The most powerful feature of TEF-Talk is its flexible hierarchical navigation and data contexting system. The custom back-end data architecture and front-end navigation system allow users to search and aggregate data dynamically across multiple levels of the organizational hierarchy and over time. This capability enables users to assess effectiveness within and across universities, ranging from the institutional level to individual faculty members. (See Figure 4.)

Figure 4

Example of TEF-TALK SCF summarization across the institution

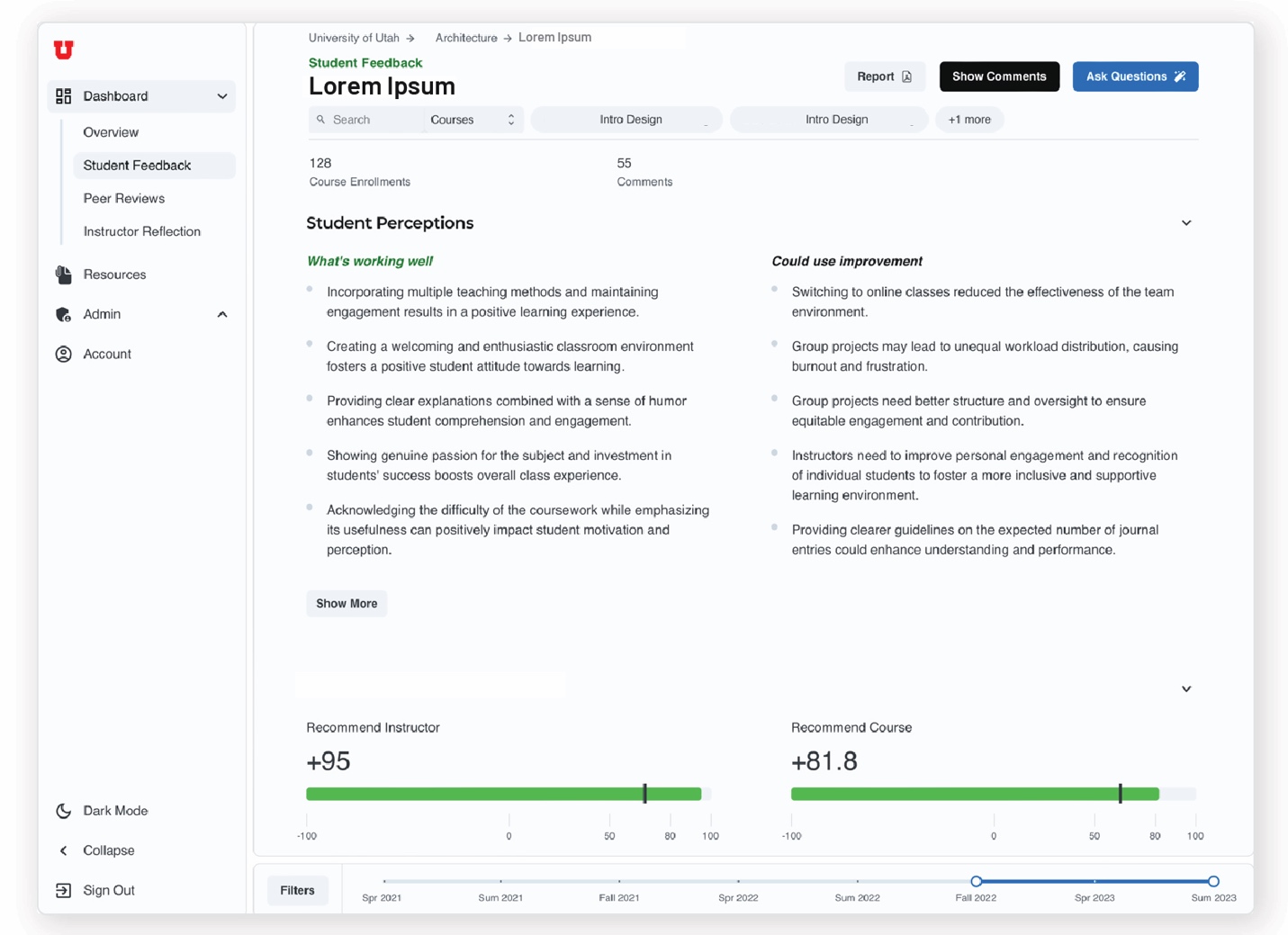

The TEF-Talk platform allows users to filter the input by time, course, faculty member, department, and/or college. Once the filters are applied, the platform delivers a complex analysis in a simplified and accessible way. Faculty members can view summaries of student feedback categorized into “what is working well” and “what could use improvement” (See Figure 5).

Figure 5

Example of individual faculty summary of SCF

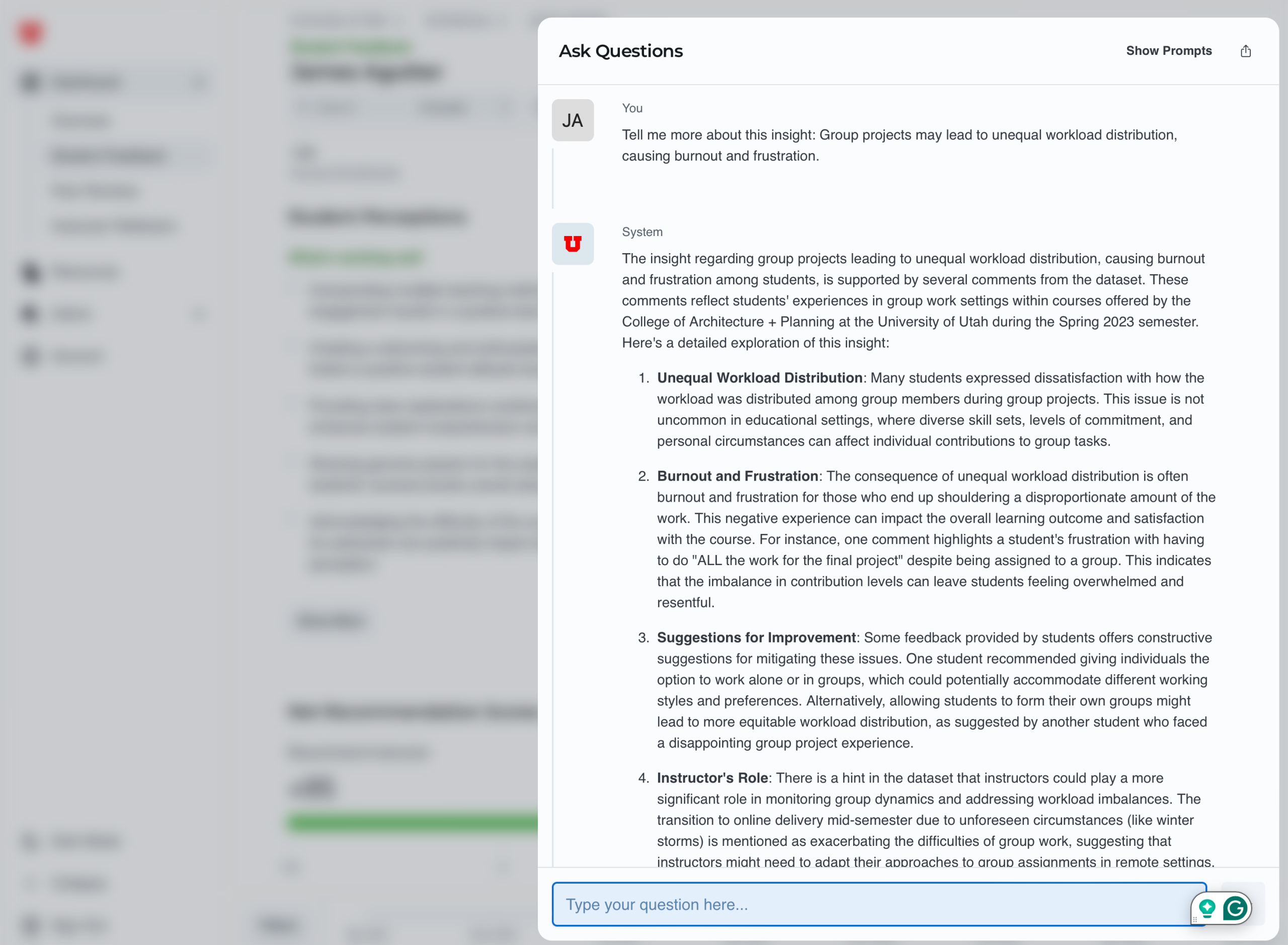

The interactive platform allows users to actively interact with the system to gain more insight, filter results, generate reports, and investigate important emergent themes. TEF-Talk’s interactive features allow instructors to

- Query their teaching feedback data for specific insights (e.g., “What areas do students consistently praise?” or “What could be improved”)

- Access curated resources and best practices for targeted improvement based on insights surfaced from themes identified in student or peer feedback.

- Receive summarized feedback that is communicated in a way that makes it actionable.

As mentioned, this platform represents a significant advancement in analyzing and supporting teaching practices by utilizing insights from various assessment instruments. By integrating data-driven insights with interactive feedback, TEF-Talk enables users to explore specific insights, view pertinent quotes, ask questions via an AI-powered chat feature, and access curated resources to address improvement areas. (See Figure 6.) TEF-Talk also provides aggregate views that unveil broader patterns across classes and among faculty members over time. This enables deans, department heads, and administrators to identify trends in effectiveness and performance among departments and colleges. At the university level, leadership can observe macro trends across all hierarchical levels.

Figure 6

Example of question-asking portal

The patterns and trends revealed in the data reveal strengths and areas for improvement, providing insight into what to address specifically. Faculty and instructors benefit from focused feedback that can guide professional development efforts, while administrators gain actionable insights that guide resource allocation, promotion, and assessment decisions.

We are piloting the TEF-Talk platform with administrators from diverse academic units, including the College of Science, College of Fine Arts, and College of Law, alongside consultants from our Center for Teaching Excellence. Feedback from these participants will help us understand their use cases and inform iterative improvements to the application. Additionally, we continue to pilot the platform with individual faculty to grasp their needs better and understand how they interact with the data to enhance their teaching practices. The MBECTE curriculum consultants use the tool to gain insight into large enrollment gateway courses with high failure rates, then provide target support and areas for improvement to address issues that may impact student success.

In the long term, the U of U’s implementation of TEF-Talk seeks to transform data collection, integration, and reporting associated with assessing teaching excellence. With secure and reliable AI summaries, themes, and patterns, we can use this tool to enable our broader TEF plan and increase the prevalence of teaching excellence at the U.

Summary and Next Steps

Built upon the efforts of others who have implemented Teaching Frameworks, our efforts to refine, evaluate, analyze, and support teaching excellence at the University of Utah have been a collaborative and multi-year process. Through the Utah Teaching Excellence Framework (UTEF), we have crafted a holistic, strategic, thoughtful, and inclusive approach that reflects the multifaceted nature of teaching. We developed a clear and concise definition of teaching excellence as a foundation that guided and grounded our work.

Using this definition, we refined and integrated diverse sources of evidence—student feedback, peer evaluations, and instructor reflections into a multidimensional data set. Central to this initiative is the TEF-Talk platform, a powerful tool designed to streamline the analysis and reporting of qualitative and quantitative data. Leveraging AI technology, TEF-Talk facilitates the discovery of meaningful insights on a large scale and across time, helping faculty, instructors, and administrators understand and respond to actionable insights and themes buried in the various instruments collecting data. The platform has already demonstrated its potential to bridge the gap between rich qualitative feedback and actionable outcomes, enhancing the evaluation process for everyone involved. The Martha Bradley Evans Center for Teaching Excellence utilizes the analyses generated by TEF-Talk to provide personalized support for individual faculty or courses while scaling up to offer broader development opportunities within departments and colleges or across the university.

As we move forward, we will concentrate on refining and expanding these tools and processes. We will continue to gather feedback from pilot participants to enhance TEF-Talk’s functionality and ensure it meets the varied needs of faculty and administrators. Additionally, we plan to increase the implementation of UTEF across more departments and colleges, using our insights to make the framework even more effective. We will also conduct a systematic analysis and performance evaluation of the tool.

The UTEF initiative illustrates our strong commitment to fostering a culture of continuous improvement in teaching. By providing clear definitions, meaningful assessments, and supportive tools, we are creating a system that values the full range of effective teaching, allowing both faculty and students to excel.

References

Abrami, P. C., d’Apollonia, S., & Rosenfield, S. (2007). The dimensionality of student ratings of instruction: What we know and what we do not. In R. P. Perry & J. C. Smart (Eds.), The scholarship of teaching and learning in higher education: An evidence-based perspective (pp. 385–456). New York: Springer.

Ahmad, T. (2018). Teaching evaluation and student response rate, Vol. 2 No. 3, pp. 206–211. https://doi.org/10.1108/PRR-03-2018-0008

American Association of Colleges and Universities, (2024). https://www.aacu.org/initiatives/tla-framework/analyze-revise?t

Ashwin, P. (2017). Making sense of the Teaching Excellence Framework (TEF) results. Times Higher Education. https://www.timeshighereducation.com/news/teaching-excellence-framework-tef-results-2017

Benton, S. L., & Cashin, W. E. (2014). Student ratings of instruction in college and university courses. In Higher education: Handbook of theory and research: Volume 29 (pp. 279–326). Dordrecht: Springer Netherlands.

Berk, R. A. (2005). Survey of 12 strategies to measure teaching effectiveness. International Journal of Teaching and Learning in Higher Education, 17(1), 48–62.

Carlucci, D., Renna, P., Izzo, C., & Schiuma, G. (2019). Assessing teaching performance in higher education: A framework for continuous improvement. Management Decision, 57(2), 461.

Colorado State University (2024). https://tilt.colostate.edu/prodev/teaching-effectiveness/tef/

University of Iowa (2024). https://provost.uiowa.edu/assessment-teaching

Feldman, K. A. (1976). The superior college teacher from the student’s view. Research in Higher Education, 5(3), 243-288.

Feldman, K. A. (2007). Identifying Exemplary Teachers and Teaching: Evidence from Student Ratings. In R. P. Perry & J. C. Smart (Eds.), The scholarship of teaching and learning in higher education: An evidence-based perspective (pp. 93-143). Springer.

Guder, F., & Malliaris, M. (2010). Online and paper course evaluations. American Journal of Business Education, 3(2), 131-138.

He, J., & Freeman, L. A. (2020). Can we trust teaching evaluations when response rates are not high? Implications from a Monte Carlo simulation. Studies in Higher Education, 46(9), 1934–1948. https://doi.org/10.1080/03075079.2019.1711046

Royal, K. (2017). A guide for making valid interpretations of student evaluation of teaching (SET) results. Journal of Veterinary Medical Education, 44(2), 316–322.)

Office for Students, (2023) https://www.officeforstudents.org.uk/for-providers/quality-and-standards/aboutthetef/#:~:text=The%20Teaching%20Excellence%20Framework%20(TEF)%20is%20a%20national%20scheme%20run,positive%20outcomes%20from%20their%20studies.

Ray, B., Babb, J., & Wooten, C. A. (2018). Rethinking SETs: Returning student evaluations of teaching for a student agency. Composition Studies, (1), 34.

Royal, K. (2017). A guide for making valid interpretations of student evaluation of teaching (SET) results. Journal of Veterinary Medical Education, 44(2), 316–322.)

Tomlinson, M., Enders, J., & Naidoo, R. (2020). The Teaching Excellence Framework: Symbolic violence and the measured market in higher education. Critical Studies in Education, 61(5), 627–642. https://doi.org/10.1080/17508487.2018.1553793

University of Illinois Urbana Champaign (2024). https://teachingeval.illinois.edu/teaching-excellence-defined/

University of Southern California – Center for Excellence in Teaching, (2024). https://cet.usc.edu/usc-excellence-in-teaching-initiative/

Transforming Higher Education – Multidimensional Evaluation of Teaching, (2024). https://teval.net

Stufflebeam, D. L. (2001). Evaluation Models. New Directions for Evaluation, 89, 7-98

Uttl, B., White, C. A., & Gonzalez, D. W. (2017). Meta-analysis of faculty’s teaching effectiveness: Student evaluation of teaching ratings and student learning are not related. Studies in Educational Evaluation, 54, 22–42.