2 Chapter 2: Lessons from Environmental History

We study history for a variety of reasons: for its stories of heroism, suffering, and intrigue; to find out what really happened; to see how the world that we experience in the present came into being; or to learn its lessons because, as the saying goes, those who do not know history are doomed to repeat it. Amazingly, environmental history is considered to be a new field, though it merges with older scholarly traditions in anthropology, archaeology, geography, and what was once called natural history. Environmental history is the chronicle of how human societies have been shaped by the climate, ecosystems, geography, and natural resources available to them and, in turn, have modified those environments.

Here we take a brief look at environmental history to distill from it four lessons that are essential in our study of natural resources sustainability: 1) Homo Sapiens is a species capable of expanding their ecological niche, but 2) some humans have expanded their niche more than others. 3) Undermining or overshooting your ecological niche is bad news, but 4) such disasters can be avoided.

Lesson 1: Homo Sapiens are a Species Capable of Expanding their Ecological Niche

If a biologist from another planet had taken a tour of Earth, say 40,000 years ago, she would have been awed by the splendor and diversity of life she witnessed both in the sea and on land. Enormous glaciers covered as much as 30 percent of the Earth’s land surface, including much of Europe, northern Asia, and North America, but to their south, the Earth was teeming with life. Moving her gaze beyond the stupendous flocks of birds of several thousand varieties to the largest mammals, she would have witnessed elephants, giraffes, zebras, and various antelopes roaming the African savannas in their attempt to avoid the lions and hyenas. Over a dozen species of whales were plying the oceans in considerable numbers. Unlike today, however, she would have seen abundant wild cattle, goats, and sheep grazing in the rich grasslands of southwest Asia and wild pigs foraging there and in the lush forests of eastern Asia. She would have noticed humpless camels and 20-foot-tall sloths lumbering through the diverse forests and grasslands of South America, and mammoths, mastodons, horses, and saber-toothed tigers roaming right up to the edge of the glaciers in the grasslands and tundra of North America. If she was particularly thorough, she would have noticed the flightless birds, like the dodo, of South America and the Pacific Islands from Hawaii to New Zealand and two species of upright ape, one a little shorter and stockier, the other taller and lighter of build, exchanging complex utterances as she approached.

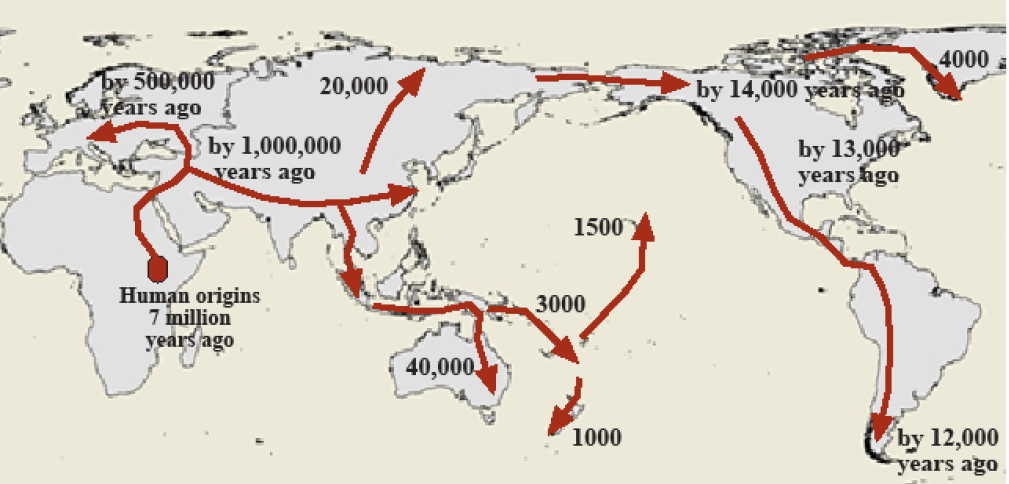

These largest of primates other than the African gorilla could be found only in small numbers, dwelling often in caves and roaming in hunting, gathering, and fishing clans of a dozen to a few hundred from East Africa across southern Eurasia and even into Australia-New Guinea. With sea level as much as 400 feet lower than it is today, she would have found this medium-sized continent separated from the large, lush peninsula of southeast Asia by only several, narrow warm seawater channels. Australia-New Guinea was then populated by large marsupials like the kangaroo rather than true placental mammals. These were rapidly diminishing or disappearing altogether, however, wherever she found the taller apes, which had apparently recently arrived from Asia on some kind of raft or outrigger canoe. The lower sea level also revealed the British Isles as an ice-covered peninsula of Eurasia, and North America and Asia connected by a 1000-mile wide land called Beringia.

If her descendent were to return 30,000 years later (10,000 years ago), he would find Earth to be as splendid as his predecessor had foretold but also a lot that had changed. Two-thirds of the glaciers had disappeared, retreating to only Antarctica, Greenland, and high mountain valleys. Associated melt waters had caused sea level to rise 400 feet, producing the world map we now know.

What would have most surprised him was that the short, stocky upright apes could no longer be found while the taller, thinner ones had migrated wherever the ice had retreated, throughout Eurasia and even to the Americas, where numerous bands resembling those in Siberia were working their way south through South America, feasting on large, unwary prey. To their north, however, the populations were smaller and most of the large mammals his predecessor had observed, even the horse, were gone.

Even more surprisingly, in southwest Asia (what is now called the Middle East) and a few river valleys in eastern Asia, the tall upright apes had become truly numerous. They were constructing houses to form settlements of thousands, surrounded by arduously tended fields of wheat, barley, millet, and chickpeas, as well as herds of goats and sheep, expertly controlled in partnership with what appeared to be, based on his predecessor’s report, mutated wolves. Of all the wondrous animals—large, small, and medium-sized, four-legged and winged, herbivorous and carnivorous—that his predecessor had admired on her exploratory adventure 30,000 years before, he found it incredible that this one species of rather large, upright omnivorous ape was coming out on top in the competition for life on Earth. Humans were expanding their ecological niche.

Every living thing has an ecological niche within which it can survive and outcompete other species (and other members of its own species) for critical ecological resources. For plants, these include sunlight, water, nutrients, and a favorable temperature range. For animals, they include food, a fertile mate, and a place to hide from predators and shelter from severe weather. For example, various species of oak trees (white, red, black, pin) usually outgrow other species of plants in the central hardwood forests extending from Pennsylvania to eastern Texas, wherever: (a) rainfall is fairly plentiful year-round, (b) frost-free growing seasons are 6–10 months long, (c) soils are well-drained, (d) abundant sunlight can pass through the forest canopy to the leaves of oak saplings, (e) farmers have not removed the forest and plowed the fields or turned in into cattle pasture, and (f) squirrels are forgetful of their stashes of buried acorns.

The feisty smallmouth bass I like to catch (and release) usually outcompete other predatory fish where (a) water temperatures between 50–75°F can be found for all but the coldest months of the year, (b) waters are well-oxygenated and relatively clear to aid in spying prey, (c) gravelly stream, river, or lake bottoms provide for fertile spawning beds, and (d) small fish, amphibians, and especially crawfish are available as prey.

The microscopic malaria parasite is one of the most dreaded diseases throughout human history with half a billion cases and one to three million deaths each year. It thrives where people and anopheles mosquitoes live in close proximity, freezing temperatures are rare, stagnant water is common, and pesticides are not used to protect people from mosquito bites. We could similarly describe the ecological niche of other familiar species of plants, animals, and microorganisms. But what is the ecological niche of humans? How has it changed and expanded over time?

There are many ways in which humans have risen, over time, above the animals. We have spoken and written language and can therefore interrelate and coordinate with other individuals in ways far more complex than a wolf pack, a flock of Canadian geese, or an ant colony. We can think abstractly, make plans, and, through determination and sense of purpose, see those plans come into effect (like writing this book!) With little effort, you can think of many more examples, but superior brains do not exempt humans from having an ecological niche.

It has been well demonstrated that our species evolved over several million years, from an ancestor we have in common with the chimpanzee, in small hunting, gathering, and fishing bands in the highland savannas of East Africa. In this ancestral homeland, temperatures are usually in the 50–90°F range, the sun shines brightly about 12 hours per day year-round, fresh water can usually be found (though with difficulty in the dry season), fruits, grass seeds (i.e., grain), and meat (dead or alive) is fairly abundant, and large predatory cats, hyenas, and venomous snakes are a very real threat, especially to children.

To this day, humans feel most comfortable in temperatures not too far from 70°F; in landscapes that combine trees, grasslands, and water; where a menu founded on grains liberally augmented by fruits and vegetables and meat or fish is available. Like Tom Hanks in Cast Away, we crave the company of others, to share this favorable environment with a household of a favored few close relatives within a community of dozens of people we know personally and with whom we share a common language, hopes, and fears. Have you found your village?

Also held over from ancestral times in East Africa, small children are afraid of monsters that want to eat them, many people get a bit depressed in northern winters with short cloudy days and limited outdoor activity, and nearly all of us, let’s admit it, are startled by snakes not captured behind glass cages. Most of us also enjoy gathering together around a warming fire, especially if there’s some good food to be had (or beer, but that wasn’t invented until about 4,000 years ago in Egypt when someone let the barley bag get wet and sit around for a while to ferment).

Of course, humans moved beyond the East African homeland a long time ago. Homo erectus, with a considerably smaller brain than we command, did so first, about one million years ago, populating the entire southern tier of Asia, which offered grasslands full of edible seeds and grazing animals and riverine and coastal fisheries that far exceeded the limited carrying capacity of the East African highlands. Much later, they slipped back west to populate southern Europe (Figure 2.1). Eurasia was likely not an easy place to live for an African species. In these subtropical (warm wet summer, mild winter) and Mediterranean (warm dry summer, cool wet winter) climates, clothes derived from hunted animal skins or agricultural byproducts had to be invented for use in winter, a season that doesn’t occur in equatorial East Africa. But, even despite the tigers, they made it, if never in large numbers. That is, until Homo Sapiens came through.

Evolving in the original homeland of East Africa around 100,000 years (i.e., 5,000 generations) ago, this large-brained hominid, genetically identical to us, also found the narrow isthmus at Sinai (now spanned by the Suez Canal) or the narrow strait of Bab el Mandeb at the southern end of the Red Sea about 50,000 years ago. Eurasia lay before them. As language-speaking, tool-using, observant fruit and seed gathers, net-throwing and hook-baiting fishers, spear-wielding pack hunters, clothes-sewing masters of fire, Homo Sapiens found the habitats of Eurasia even more to their liking. They likely drove Homo Erectus into extinction by 30,000 years ago, and interbred with Homo Neanderthalensis as they expanded their population to probably several million—no larger than the population of many other large mammals or a single metropolis today, but a base to build upon and a cushion against extinction.

While it is obviously true that humans’ ecological success is largely due to our superior brains, humans also have other advantages that overcome the disadvantages of an uncommonly long period of childhood dependence (whether upon parents or the village that it takes to raise a child). Humans are not grazers, like cattle, who utilize the meager nutritional value of ordinary plant cellulose by possessing a large digestive organ called a rumen and eating grass or chewing their cud most of their waking hours. Nor are we strictly carnivores, like the cats and many fish, whose diets are packed with protein, but who teeter at the narrow top of the food chain. (Any ecosystem contains, at most, 10 percent as much animal as plant matter as we will explore further in Chapter 4.) Rather we are omnivores who utilize the more fortified plant foods, especially grains (that is, human’s ecological niche is founded on consuming grass seeds), augmented by fruit and vegetables and meat or fish when we can get them. Our diets are therefore somewhere between those of two similar-sized mammals we domesticated long ago: the pig, which eats our high-energy plant-based diet but less meat, and the dog, primarily a carnivore. This dietary strategy allows us to live on a wide variety of ecological resources (Michael Pollen has called this the “omnivore’s dilemma”) without confining us to the small ecological carrying capacity of carnivores or the endless chewing of grazers.

If you’ve ever watched the Olympics, you’re probably as amazed as I am at the athletic possibilities of an upright posture on two sturdy legs, with two arms connected to amazingly dexterous hands freed from service in locomotion. Young women stand on a balance beam, jump in the air, do a back flip with a full twist, only to land cleanly back on the beam. Young men do triple somersaults only to catch the high bar on the way back down. Athletes swim at a speed faster than a vigorous walk using four different strokes.

Not even the fastest of human runners at the Olympics can sprint as fast as a horse, and my dog delights in my inability to catch him, but with only two legs, Usain Bolt of Jamaica hit a top speed of nearly 30 mph in winning gold medals in 2008, 2012, and 2016. Kenyan and Ethiopian male marathoners cover 26.2 miles at an average speed of over 12 mph, women at 11 mph. So with only two legs, humans are remarkably good distance runners and we can easily outdistance any other primate on the ground, though of course not in the trees—the price we pay for an upright posture.

This sacrifice in sprinting speed compared to our four-legged friends and branch-swinging compared to other primates enables the arms and hands to do things no other animal can achieve. Even if your pet dog, cat, horse, or rabbit were as smart as you are, I can’t imagine how any of them could drive a car or sew a new shirt, cook a nice dinner or play the violin, guitar, or piano. Nor could they swish a jump shot, pitch a baseball or hit that pitch with a wooden bat, nor shoot a bow and arrow or rifle accurately. Moreover, human’s sense of sight is unique in the animal world; eyes are propped up about 5 feet off the ground when standing, and humans have excellent binocular vision and therefore depth perception. Moreover, like the birds and reptiles, but only primates among the mammals, we have excellent color vision (that’s right, your pet cat, dog, horse, or rabbit are color-blind but the snake in your garden is not).

Of critical ecological importance, humans armed (note the anatomical reference) with a sharp bit of stone or bone lashed to a stiff wooden stick can accurately throw or jab this weapon with great effectiveness in hunting (or battle), especially when working in teams. The human body plan is uniquely adapted to throwing, critical in successful hunting. It’s a scary thought, a dozen fit, determined humans, probably mostly young adult males, executing a coordinated plan to rush at you from various directions with a skillfully wielded weapon that can kill you from a distance. That’s likely what happened to the unwary marsupials of New Guinea-Australia upon the arrival of humans around 40,000 years ago, the large mammals of North America 13,000 years ago, and flightless birds of the Pacific in historical times. It may even be what happened to Homo Erectus and Homo Neanderthalensis. The point is not to say that humans are vicious, though sometimes they certainly are, but that we have a potent package for winning for ourselves an ecological niche on a planet where that process is inherently competitive. We must also keep in mind, however, that “our” ability to effectively cooperate gives us a competitive advantage over “them” (whoever “us” and “them” happen to be at the time).

Lesson 2: Some Humans Have Expanded Their Ecological Niche More than Others

Jared Diamond’s 1997 Pulitzer Prize-winning non-fiction book Guns, Germs, and Steel takes the human ecological story from the origins of agriculture about 10,000 years ago to the beginning of the modern age a few hundred years ago. In teaching from Diamond’s excellent, but controversial, book in class, I have found that the title should have been “Farms, Germs, and Steel” since this better captures his thesis, but perhaps “Guns” are more eye-catching and make for better marketing than “Farms” on a book cover.

Some scholars have critiqued Diamond’s widely-read book (note that in academia, having your work criticized is far better than having it be ignored) as being environmental determinist and others as being social Darwinist. The first charge means that it puts nature in command of human history rather than human ideas, institutions, values, and free will. I find, however, that the book does nothing of the sort. Diamond is a possibilist rather than a determinist—nature does not dictate human outcomes, but it does limit what can happen and what is beyond the range of possibility. In fact, until the relatively recent rise of environmental history, historians have been seriously remiss in leaving nature almost completely out of the human story, except when geography is invoked to explain military outcomes, as if there were no ecological laws that apply to humans.

The second charge of social Darwinism means that human history is viewed as a struggle for the survival of the fittest societies, like the competition among and within species. Guns, Germs, and Steel certainly does have an element of social Darwinism, and yet it seems to lead Diamond to draw many correct conclusions about why “the fates of human societies” (the subtitle of the book) have come out the way they, in fact, have. For this reason, I find it to be the most important book in environmental history written so far, though Alfred Crosby’s Ecological Imperialism drew some of the same conclusions thirteen years before Diamond.

According to Diamond, the evolution of hunting into herding and gathering into cropping, that is, the development of agriculture in what is referred to by archaeologists as the Neolithic Revolution, is the central event in human history. (Agriculture is also a key characteristic of Earth in the 21st Century and lies at the heart of natural resource sustainability as we will see in later chapters.) It is what has made humans the dominant animal and some humans dominant over others. Diamond states his thesis this way:

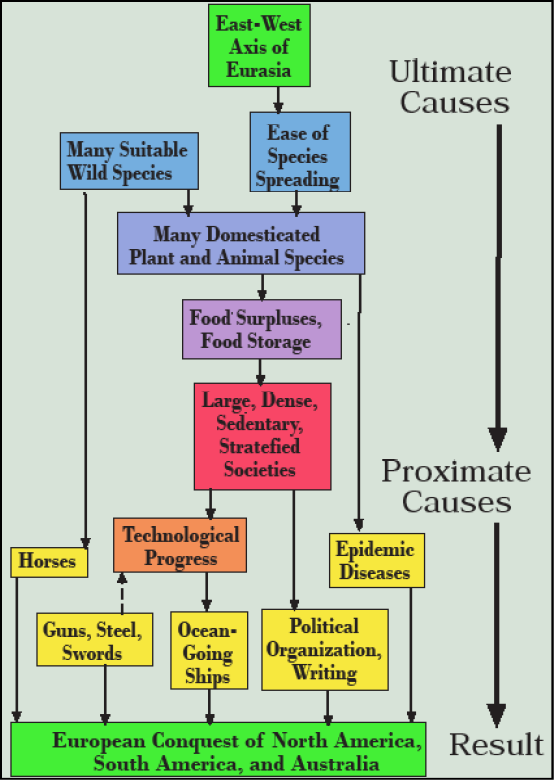

Thus, food production, and competition and diffusion between societies, led as ultimate causes, via chains of causation that differed in detail but that all involved large dense populations and sedentary living, to the proximate agents of conquest: germs, writing, technology, and centralized political organization. Because those ultimate causes developed differently on different continents, so did those agents of conquest (Diamond, 1997, p. 292).

Figure 2.2 captures Diamond’s overall argument and gives us a road map to follow through major themes of environmental history. It is important to note that earlier theories explaining the Europeanization of the Americas and Australia and enslavement of Africans had invoked racism—genetic superiority. Following all available scientific evidence, Diamond rejects this notion. In fact, the African, East Asian, and Caucasian strains of humans separated less than 100,000 years ago, far too short a time to evolve significant differences that are more than skin deep. Instead, Diamond, as well as Crosby, argue convincingly that Europeans had a superior ecological inheritance than the Native Americans and Australians they conquered in recent centuries.

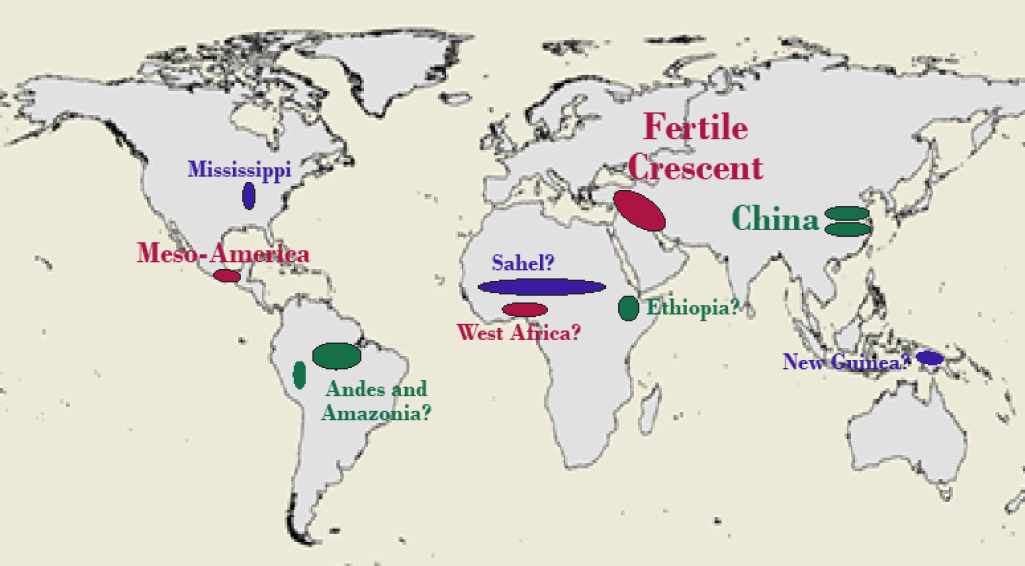

The Fertile Crescent is a wide arc of highlands east of the Mediterranean Sea in what is now Israel, Palestine, Lebanon, Jordan, Syria, and Turkey, extending into the highlands straddling Iraq and Iran. It surrounds the low-lying deserts of Syria, Jordan, and western Iraq through which flow the Tigris and Euphrates Rivers (Figure 2.3). The area spans latitudes of 32-38°N (the same span as from San Diego to San Francisco) and its physical geography and climate resemble California.

Hunters and gatherers there possessed an opportunity that humans in other regions lacked—a few key species of wild plants and animals that had the potential for domestication. Gatherers there discovered that the grains of two varieties of wheat, einkorn and emmer (staples to this day for making bread and pasta), could be greatly encouraged by clearing an area of other plants and placing the seeds in the ground, especially in autumn at the beginning of the rainy season. Around the same time, hunters found that two tasty species of animals—goats and sheep—could be controlled, with the help of a dog and fertile pastures, to live according to human desires to produce milk, meat, wool, and fertilizer for the crop fields. Offspring could be selected for reproduction from among the best of the herd (see Table 2.1 for a summary of the earliest domesticated crops and livestock). Thousands of innovations followed, including:

- a third and fourth species of animal (pigs and cattle, which could be used for heavy farm labor),

- a few new crops (olives, barley, and chickpeas),

- ways of storing last year’s crop to prevent spoilage, and tide over a poor harvest,

- tools for more efficiently clearing areas to be planted,

- social rules for sharing labor at planting and harvest time, and the crops that labor produced,

- methods for cooking the grains and meat to make them more digestible and tasty.

The quintessential human economic activity of farming had been born and, with it, the ability to manipulate an ecosystem to produce 10-100 times as much food per acre as hunting and gathering could provide.

A few centuries later, agriculture also developed, probably independently, in the Wei River Valley, a tributary of the Yellow River in what is now central China. The crops were millet, followed by water-loving rice, then soybeans, and the pig was the primary livestock, later augmented by the chicken.

Diamond argues that these two fountainheads of “western” and “eastern” civilization, respectively, were favored because these were the home ranges of the very few species of plants and animals on Earth that can be successfully domesticated for agriculture. Only four other instances where agriculture developed independently have ever been documented, and none of them has the variety of crops and animals of the Fertile Crescent or China:

- New Guinea, based on sugar cane and bananas,

- the Sahel, just south of the Sahara Desert in Africa, based on a grain called sorghum (or milo),

- the Andes Mountains of South America, based on the potato and the similar root crop manioc, along with the camel-like llama,

- Mesoamerica based on the three sisters of corn, beans, and squash (Figure 2.4).

Other regions, of course, had plenty of animal and plant species, but only a very few have been successfully domesticated, even in the 21st century (fortunately, one of them is the coffee bean native to Ethiopia). To this day, five grains (wheat, rice, corn, barley, and sorghum) alone provide half of all human food calories. If soybeans, potatoes, sweet potatoes, manioc, sugar cane, sugar beet, and bananas are added, the total climbs to over 80 percent. Just five species of animals—cattle, goats, sheep, pigs, and chickens—dominate world meat production. These heartlands of agriculture, especially the Fertile Crescent and China, loom large indeed because they had the plant and animal species upon which agriculture could be founded.

Table 2.1. Earliest domestication of major agricultural crops and livestock.

|

Crop Species |

Years Ago |

Geographic Location |

|

wheat |

10,500 |

Southwest Asia |

|

pea, olive |

9,500 |

Southwest Asia |

|

rice, millet |

9,500 |

China |

|

sugar cane, banana |

9,000 |

New Guinea |

|

sorghum |

7,000 |

Sahel |

|

corn, beans, squash |

5,500 |

Mesoamerica |

|

potato, manioc |

5,500 |

Andes, Amazonia |

|

Animal Species |

||

|

dog |

12,000 |

SW Asia, China |

|

sheep, goat |

10,000 |

Southwest Asia |

|

pig |

10,000 |

China, SW Asia |

|

cow |

8,000 |

SW Asia, India |

|

chicken |

8,000 |

SE Asia, China |

|

horse |

6,000 |

Ukraine |

|

donkey |

6,000 |

Egypt |

|

water buffalo |

6,000 |

China |

|

llama |

5,500 |

Andes |

|

camel |

4,500 |

Cen. Asia, Arabia |

Source: Diamond, 1999

In fact, the world today produces almost no food from crops or animals that were domesticated since Columbus stumbled into the Americas in 1492. Take the typical Midwestern American farm. The three most important crops are corn, first developed 5,500 years ago in Mesoamerica (today’s southern Mexico and Guatemala); the soybean, first developed in China even earlier; and wheat (Fertile Crescent, 10,000 years ago). The primary livestock are pigs (China and the Fertile Crescent, 10,000 years ago), and cattle (Fertile Crescent, 8000 years ago), though I hear that goat meat and milk (Fertile Crescent 10,000 years ago) are suddenly popular, as if this was something new. They were all brought to the fertile Midwest by people who had inherited the agricultural traditions of these heartlands of civilization, with crops and livestock improved over hundreds to thousands of generations of artificial selection—breeding from the best seeds and sires, with “best” defined by what the farmer wants.

The Fertile Crescent constellation of crops and livestock came to Egypt and India, adjacent to the Middle East, about 9,000 years ago, and from east to west across Europe from 8,000 to 6,000 years ago. Chinese-style agriculture spread to Korea and Japan about 8,000 years ago. Note that these vast lands are all contiguous and lie in the northern subtropics (about 23–35°N latitude) to midlatitudes (about 35–50°N latitude) where the Fertile Crescent and Chinese crops and livestock were originally found wild. In this way, Eurasia jumped way ahead of other world regions in developing an expanded human ecological niche, and therefore much larger populations, based on agriculture.

This did come with a terrible price, however. If you live close to cows and pigs, dogs and ducks, you will eventually catch their diseases (Table 2.2). Smallpox, measles, tuberculosis (all from cattle), and influenza (from ducks and pigs) raged through the ancient world killing untold millions of people.

Table 2.2. Animal origins of some major human diseases.

| Human disease | Animal |

| measles | cattle |

| tuberculosis | cattle |

| smallpox | cattle |

| flu | pigs. ducks |

| pertussis | pigs, dogs |

Source: Diamond, 1999

Generation by generation, however, those whose genes gave them an immune system that better withstood these onslaughts were more likely to survive and pass on those traits to later generations. The intermixing of peoples across Eurasia also provided a degree of genetic variation, missing in the Americas as we will see, that improved the chances that at least some of the population would be resistant to any one epidemic.

With this great increase in human population came the potential for technological innovation (e.g., pottery, metallurgy), along with the necessity of maintaining the agricultural enterprise. The replacement of a nomadic life by sedentary, fixed settlements allowed people to build permanent structures. A division of labor and social stratification followed. Written language and the number system were first developed to keep track of who had paid their tribute in crops and livestock to the dominant military classes.

One innovation, metallurgy, was particularly potent because it allowed first copper (which is very easily fashioned into useful shapes) and later iron (which is more difficult to work but very abundant) to be used for everything from cooking knives and saws to plows and nails, to swords (and armor against them). Together with the domestication of the horse (first in what is now Ukraine about 6,000 years ago), metal swords gave agricultural societies a huge military advantage over hunting and gathering societies. An even more potent weapon was the diseases that agriculturalists had long shared with their livestock, but to which hunters and gatherers had never been exposed. The result was that every region with good agricultural potential was taken over by farmers, pushing hunters and gatherers to the margins of deserts, tropical forests, mountains, and the arctic where climate and soils needed for agriculture to thrive are lacking.

Both Diamond and Crosby utilize this explanation to explain why European explorers and conquistadors in the 15th–18th Centuries were able to conquer all but the most tropical and most arctic parts of three continents (South America, North America, and Australia) despite being outnumbered and sacrificing the home field advantage. (We’ll leave it to others to explain why they were motivated to do so.) They had an insurmountable military advantage based on steel weapons (swords and later guns), wooden sailing ships, and a powerful, fast, and compliant military vehicle known as a horse. They had written languages and national-level military organization to supply and coordinate their armies.

Most of all, they had germs. The arrival of Europeans was a catastrophe for Native Americans, and the greatest killer of all was not guns or steel swords—it was smallpox.

Its effects are terrifying: the fever and pain; the swift appearance of pustules that sometimes destroy the skin and transform the victim into a gory horror; the astounding death rates, up to one-fourth, one-half, or more with the worst strains. The healthy flee, leaving the ill behind to face certain death, and often taking the disease along with them. The incubation period for smallpox is ten to fourteen days, long enough for the ephemerally healthy carrier to flee for long distances on foot, by canoe, or, later, horseback to people who know nothing of the threat he represents, and there to infect them and inspire others newly charged with the virus to flee to infect new innocents (Crosby 1986, p. 201).

Unfortunately, smallpox did not act alone. Measles, diphtheria, whooping cough, chicken pox, bubonic plague, malaria, typhoid fever, cholera, yellow fever, scarlet fever, influenza, and other Eurasian diseases found virgin ground among the Native Americans. Together, it is estimated they killed as much as 95 percent of the population, reducing the North American population from 20 million or more in 1500 to only about one million in 1700, in what can only be described as a holocaust. All those sci-fi movies you’ve seen about alien invaders and runaway plagues—to the Native Americans, that’s what actually happened, though the greater part of it was, in fact, inadvertent, legends of whites giving smallpox-laden blankets to Indians as gifts notwithstanding.

By 1800, Europeans were the majority population on all three “new world” continents and the Fertile Crescent agricultural package controlled these vast Neo-Europes, as Crosby calls them. Adding immeasurably to the European’s advantage, Eurasian plants and animals often ecologically outcompete North America species, and nearly always outcompete South America and Australian species that have evolved separated from Eurasia since the dinosaurs went extinct 65 million years ago. Razorbacks and wild boars—domesticated pigs gone wild—exploded in population to become the dominant animals of North American and Australian forests as they earlier had on the islands of the Atlantic and Pacific. Wild cattle and horses similarly thrived in North America and Australia and exploded in the South American pampas to fill the prairies. Eurasian rats and many species of fast-spreading plants also found these three continents much to their liking. In Australia, rabbits multiplied in the billions in the absence of owls, hawks, coyotes, and cats to hunt them. Nearly every invasive species that North American ecologists struggle to control is of Eurasian origin, from the zebra mussel to kudzu. This is what Alfred Crosby terms Ecological Imperialism: The Biological Expansion of Europe in his wonderfully written 1986 book.

While Europeans have expanded their ecological niche over the greatest geographical extent, Asians and Africans who developed agriculture several thousand years ago have also expanded their range. The Austronesian expansion originating in southern China has brought Asian languages, crops, and livestock to every habitable scrap of land in the Pacific, even as far as Easter Island in the Western Hemisphere and New Zealand’s cool marine climate where the Maori first landed about 1,000 years ago. The Bantu of West Africa, who first domesticated sorghum some 7,000 years ago, have similarly spread at the expense of hunters and gatherers across that vast continent, reaching South Africa just ahead of the Dutch and English colonists. These medium-scale examples reinforce the Crosby-Diamond thesis that ecological niche expansion through agricultural development and the conquering of hunter-gatherers by agriculturalists has been a central theme in human environmental history. It also looms large as a central feature of a 21st century world where European descendants are in the majority and European institutions dominate not only in Europe, but also in North and South America and Australia.

Lesson 3: Undermining or Overshooting Your Ecological Niche Is Bad News

The story thus far has been one of overall human ecological success, especially for the inheritors of the Neolithic Revolution. Environmental history is also littered with failures, however, some of them locally catastrophic. Again, Jared Diamond’s work is fundamental. In his 2005 book Collapse, he chronicles a number of cases where human societies became locally overpopulated, undermined their natural resource base, failed to address the issue, and fell apart in a downward spiral of starvation and violent conflict.

Perhaps the archetypal example is Easter Island, an isolated island of 66 square miles situated 2,300 miles west of Chile in the southeast Pacific and 1,300 miles from any other inhabitable island. Easter Island, famous among archaeologists for its giant stone statues, was discovered by the Polynesians. Originating from Asia as part of the Austronesian expansion, Polynesians populated the entire tropical Pacific by following birds to new islands paddling outrigger canoes—with a cargo of bananas, taro, sweet potato, and sugarcane as seeder crops as well as pigs as seeder livestock.

At first, they thrived on Easter, but in reaching an overly dense population of 15,000–30,000, they completely deforested the island, cutting down the very last tree, to expand crop production. In doing so, they undermined those agricultural efforts through soil erosion while depriving themselves of the wood needed for fishing canoes—and cutting off their own escape. For this reason, Easter Island serves as a chilling metaphor for the environmental Armageddon that inspires doomsday films and science fiction books.

The Easter Islanders’ isolation probably explains why I have found that their collapse . . . haunts my readers and students. The parallels between Easter Island and the whole modern world are chillingly obvious. Thanks to globalization . . . all countries on Earth today share resources and affect each other, just as did Easter’s dozen clans. Polynesian Easter Island was as isolated in the Pacific Ocean as the Earth is today in space. When the Easter Islanders got into difficulties, there was nowhere to which they could flee, nor to which they could turn for help; nor shall we modern Earthlings have recourse elsewhere if our troubles increase. Those are the reasons why people see the collapse of Easter Island society as a metaphor, a worst-case scenario, for what may lie ahead of us in our own future (Diamond 2005, p. 119).

When European explorers first found Easter Island in 1722, all 21 tree species had been extirpated (gone extinct locally), and by 1872 only 111 islanders remained, less than one percent of the population when the statues were raised.

While Easter Island represents perhaps the purest case recorded in environmental history of human populations overshooting their local carrying capacity resulting in social collapse, it is, unfortunately, not the only one as we will see. In his study of these cases, Professor Diamond distills five key factors that contribute to environmental collapse:

- environmental damage, especially of ecological resources critical to agriculture,

- climate change, especially drought,

- the encroachment of hostile neighbors that increase pressure and usurp resources,

- the loss of friendly trading partners that had fulfilled essential needs, and

- an inadequate or ineffective social response to these challenges.

Each of these factors comes into play to different degrees in different times and places; for example, at isolated Easter Island, 1 and 5 were the primary driving factors.

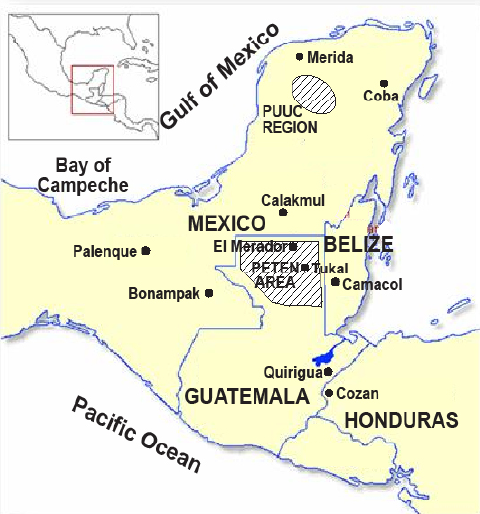

While Easter Island represents a microcosm, the Maya are a mesocosm (the prefix meso means medium-sized, larger then micro, smaller then mega) of Diamond’s five factors. The Maya, perhaps the pinnacle of pre-Columbian civilization, thrived in the Mesoamerican agricultural heartland, straddling what is now southern Mexico, Belize, and Guatemala (Figure 2.5), reaching its zenith of temple building, hieroglyphics writing, astronomy, and calendar systems in the 8th century when it attained a population estimated at 3–14 million. When the Spanish conquistadors arrived in the 16th century, however, it was the Aztecs of the Valley of Mexico, not the Mayans to the south, who represented the height of Native American civilization. The Mayan population had been reduced to only about 30,000, no more than one percent of its peak 800 years prior. The pyramidal temples of Copan, Tikal, and Chichen Itza, which you can still visit today, were overgrown with tropical vegetation.

What happened to the Maya is likely another example of overshoot and collapse. As the populations of this hierarchical agricultural society grew, they expanded corn and bean farming from the fertile valley bottoms to the steep valley slopes by removing the forest. Soil erosion not only undermined crop productivity on the hills, despite some attempts at terracing and raised fields, but also filled the valleys with infertile sand. Then came repeated drought and crop failures. Environmental refugees fled the southern regions for the Yucatan, but that drier region could not accommodate them. Leadership fractured. Instead of reducing human fertility and augmenting food supplies, stressing soil and forest conservation, the bow-and-arrow was invented, multiplying the human cost of conflict among clans and tribes. We can hypothesize that a similar overshoot and collapse occurred about 10,000 years ago when the first wave of Native Americans prospered and multiplied in virgin hunting grounds, only to be reduced by starvation when those herds were decimated.

While we have seen how the Neolithic Revolution agricultural package spread from the Middle East to India and Europe and from there to the Americas and Australia, back in the original homeland, problems similar to those the Mayans faced were grinding away. Arid (dry) environments are inherently more fragile than humid (rainy) ones; and the soil has a long memory. The Middle East and North Africa has always been short on rainfall, but the deserts themselves are a human creation born of centuries of overgrazing and the accumulation of salt in soils through mismanaged irrigation. Soil erosion on the hillsides of the Mediterranean lands was a contributing factor in the fall of Rome. The slow historical rise of northern and western Europe over the Middle East and Mediterranean is partly due to the greater resilience of soils in Europe’s cool, moist climate and their careful management through traditional animal husbandry that places livestock, crops, and soil in a mutually beneficial, and thus sustainable, relationship. Crops feed the animals, who renew the soil with manure to nurture the growth of new crops.

Rather than tell that very long story in depth, let us turn our attention to two more recent examples of environmental collapse—the Dust Bowl of the 1930s in the American Great Plains and the ongoing demise of the Aral Sea in Central Asia.

America’s Greatest Environmental Collapse: The Dust Bowl

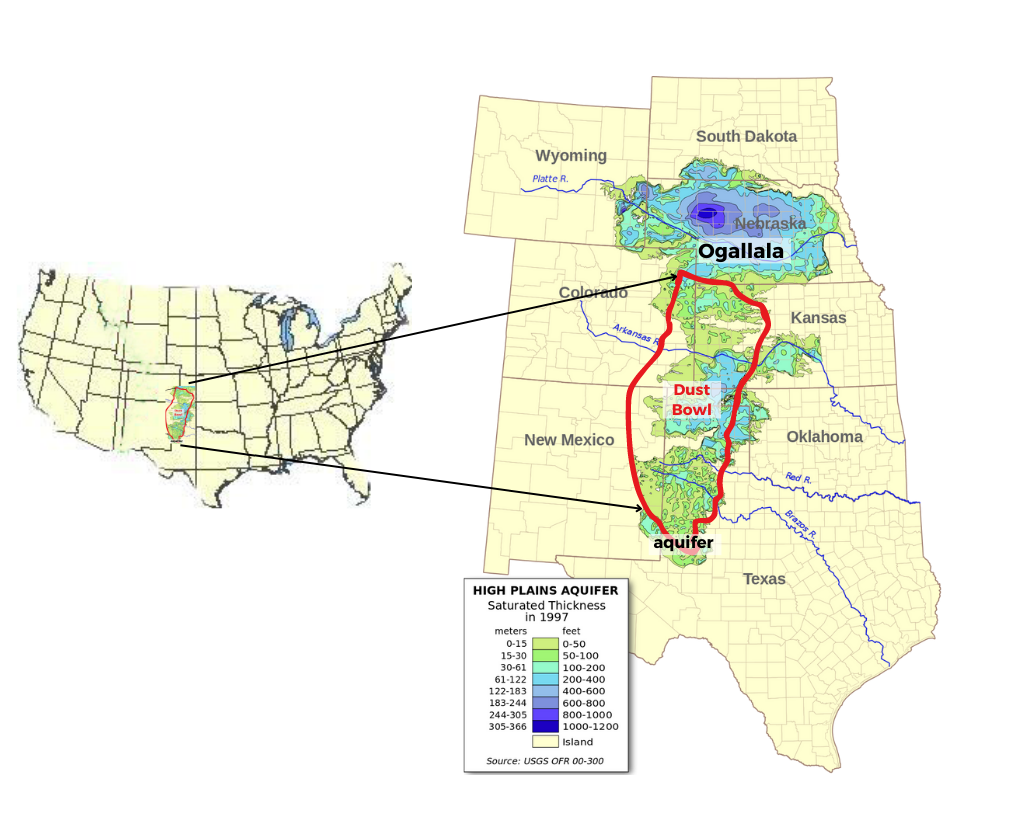

Zebulon Pike, who explored the southern part of the Louisiana Purchase following Lewis and Clark’s more famous expedition, named not only Pikes Peak in the front range of Colorado, but also the Great American Desert in what we would now call the southern Great Plains (Figure 2.6). Stretching from north Texas to southwestern Nebraska, the semiarid southern plains offered a thin veneer of fertile soils protected by a sea of grass but almost completely lacked both trees and streams. “Miles to water, miles to wood, and only six inches to hell” (Egan 2006, p. 26) was the 19th century description of a land still controlled by the Comanche and Apache tribes, whose fabled skills on horseback were honed hunting bison with bow and arrow. Manifest destiny, however, would not leave this land to the Native Americans. The buffalo were exterminated to starve out the Comanche who were then rounded up and sent to the short-lived reservation that was soon to become Oklahoma. Cattle ranchers followed.

World War I (1914–1918) and the “Roaring Twenties” brought uncommonly generous rains to the southern plains and a belief that rain will follow the plow. The regional economy boomed on plentiful wheat harvests as America gained its first experience as the world’s greatest food exporter. Southern plains farmers got rich, but the grass—whose tangled roots and dense carpet protected the fragile soil from the relentless wind and from the torrents of rain followed by extended drought—disappeared. Inevitably, beginning in 1932, the rains failed, and with them the crops of winter wheat that farmers plant in the fall and harvest in early summer. Exposed to the wind, the dry, unprotected soils blew away in a process known simply as wind erosion and, in the extreme form the southern plains would witness, desertification. Soil blew into drifts against fences and houses, forming dunes, even on lands that held their grass cover, smothering it. Dust blew into the stomachs of cattle and the eyes of chickens. Horses panicked. Only the birds could fly fast enough to outrun the dust storms.

Humans could not, even in the Model A and Model T Fords they had purchased with their wheat profits, because drifts would block the roads, dust would choke the carburetors, visibility was reduced to a few yards, and static electricity would short the engines or knock people to the ground if they touched the car or shook hands. In 1933 there were 70 days of dust storms; in 1934 there were 54, but things were not getting better. In the first four months of 1935, there were 117. Not only were the grasslands, crops, and soil fertility being destroyed but also the most essential service nature provides—breathable air—was being sacrificed to the relentlessly penetrating dust. Emergency hospitals in school gyms set up by Roosevelt’s New Deal filled with patients suffering from “dust pneumonia”—lungs hopelessly damaged by the abrasive dust that worked its way through walls, windows, doors, wetted sheets, and surgical masks into the air sacks in the lungs of its victims. The sun was blocked and dust settled on windowsills, doorways, cars, and carpets in Chicago, Boston, New York, and the capital in Washington, DC. The heat would not relent and the rains would not come, no matter how much money people wasted on conmen’s empty promises to seduce water from the cloudless skies.

The High Plains lay in ruins. From Kansas through No Man’s Land (the Oklahoma panhandle) up into Colorado, over in Union County, New Mexico, and south into the Llano Estacado of Texas, the soil blew up from the ground or rained down from above. There was no color to the land, no crops, in what was the worst growing season anyone had seen. Some farmers had grown spindles of dwarfed wheat and corn, but it was not worth the effort to harvest it. The same Texas Panhandle that had produced six million bushels of wheat just two years ago now gave up just a few truckloads of grain. In one county, 90 percent of the chickens died; the dust had gotten into their systems, choking them or clogging their digestive tracts. Milk cows went dry. Cattle starved or dropped dead from what veterinarians called “dust fever.” A reporter toured Cimarron County, OK and found not one blade of grass or wheat (Egan 2006, p. 140-41).

Then, on April 14, 1935 in the fourth year of drouth (the local pronunciation of “drought”), with farmers in bankruptcy, hunger and poor health rampant, came Black Sunday. Starting in the Dakotas as a spring cold front came bearing down on the plains, the purple-black wall of 300,000 tons of dust raced southwards at speeds exceeding 40 mph. Anyone caught outdoors was unlikely to survive.

The Great Plains lost 850 million tons of soil that year, 480 tons per acre, 100 times more than what is considered a soil erosion “problem.” Soil scientist and master politician Hugh Hammond Bennett made his fame on the Dust Bowl, arguing that it was a disaster authored by unsustainable farming techniques. Bennett asked Congress for funds to remedy the agony in the southern plains, not just on any day, but on April 19, 1935 as the dust clouds from Black Sunday settled on Washington. As the sunny day turned to gloom out the windows of the U.S. capital building, he spoke to Congress of soil conservation techniques and the need for the Federal government to fund them. The Soil Conservation Service (now Natural Resources Conservation Service) was born.

Most people stayed the course, but a million people left the Great Plains in the 1930s— environmental refugees who at least had a large country to absorb them, if not on favorable terms (as told by John Steinbeck of “Okies” fleeing to California in The Grapes of Wrath). Dust storms continued—134 in 1937—and when a few rains finally grew some grass, swarms of billions of grasshoppers or tens of thousands of rabbits consumed it all. Nature had lost all balance.

Rains returned in the 1940s and the land partly recovered, thanks largely to the Soil Conservation Districts that Bennett’s work had helped form, and which still thrive today. Since 1986, the Conservation Reserve Program has paid farmers to keep permanent grass cover on the most highly erodible lands. On better lands, a new unsustainable resource has been tapped— groundwater.

The Ogallala aquifer (Figure 2.6) underlies nearly the same territory as the Dust Bowl, an enormous resource of approximately one quadrillion (1015) gallons that supplies a third of all irrigation water in the U.S. With this water subsidy, southern plains farmers have been able to grow not only wheat but also the more profitable and water-demanding corn, soybeans, and cotton. In pursuing irrigation so vigorously, however, that resource too is failing. Water tables have fallen throughout the Ogallala south of Nebraska, depleting the aquifer at a stupendous rate of over 3 trillion (1012) gallons per year. Some farmers have found it costs more to pump the water from deeper and deeper wells than it is worth in increased crop yields and have reverted to dry farming or cattle ranching. Another unsustainable southern plains crop boom is currently dissolving.

Finally, there is the relentless wind itself. The former Dust Bowl today boasts the largest fleet of wind turbines in North America, with Texas ranking first, Oklahoma third, and Kansas fifth among U.S. states as of 2020. Unlike the fragile soils and the depletable fossil waters of the Ogallala, wind is inexhaustible. No matter how thoroughly it is utilized today, tomorrow’s supply cannot be diminished. Perhaps the southern plains will have finally found a sustainable utilization of natural resources by delivering renewable electricity to the transmission grid that serves the great cities of Texas and neighboring states. Even for the Dust Bowl, it is never too late to change to a more sustainable course.

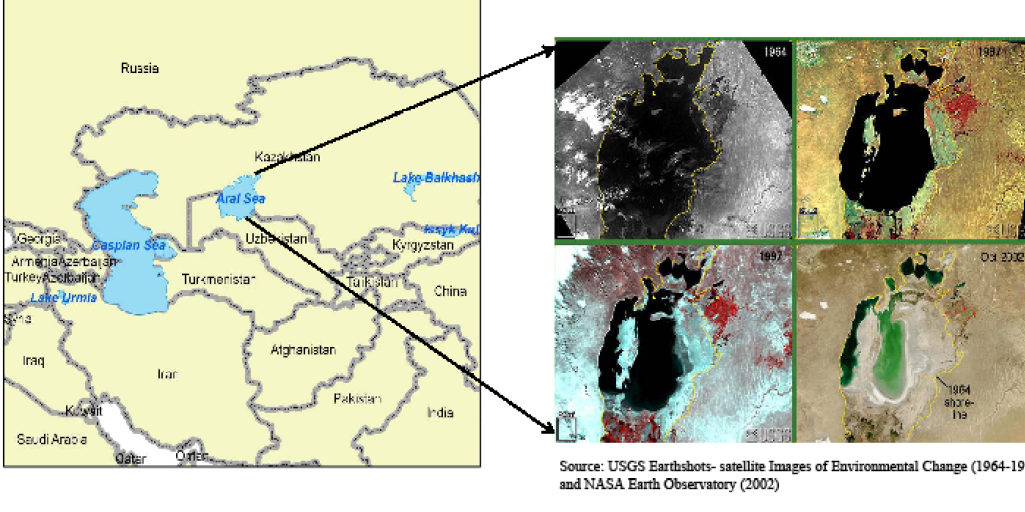

The World’s Greatest Current Environmental Collapse: The Aral Sea

In 1960 the brackish (less salty than the ocean, but not quite fresh) Aral Sea of Soviet Central Asia was the fourth largest lake in the world (behind the Caspian Sea, Lake Superior, and Lake Victoria) at 17,600 miles² (68,000 km²). It supported the world’s largest inland fishery at over 50,000 tons caught per year and 60,000 jobs (Figure 2.7). Wildlife was abundant; it was the muskrat king of the world with 500,000 pelts produced per year. Like Great Salt Lake or the Caspian Sea, the Aral Sea has no outlet to the ocean. Its water level is determined by a balance between, on the one hand, the Syr Darya and Amu Darya Rivers (plus a little groundwater) that flow into it—and rainfall that falls upon it— versus evaporation from the lake surface on the other. This matches the simple budget for any water body (even your body): input minus output equals change in storage.

By 2007 the Aral Sea had been reduced to three briny lakes totaling less than one-fourth of its original area, holding a water volume only 10 percent of what it was in 1960. The balance has been broken by drastically reduced inflows from the Syr Darya and Amu Darya rivers. Salinity has increased from 1.0 percent, surpassed the ocean’s 3.0–3.5 percent, to reach 10 percent and climbing in two of the three residual lakes. The salt and loss of aquatic habitat has not only killed every single fish but also helped drive 135 of 173 animal species into local extinction. The regional climate has become more arid with hotter summers and colder winters.

The Islamic Uzbeks, Turkmens, and Kazakhs of the region have fared nearly as poorly. The thriving fishing port of Muynak is now over 60 miles from the shore and, like the region of Kazakhstan known as Karakalpakstan, a hotbed of tuberculosis, hepatitis, and respiratory and diarrheal diseases. Like in the Dust Bowl, the wind carries 43 million tons of dust per year, but in the now dry Aral Sea lake bed, sediments are laced with salts and pesticides, including DDT and PCBs, largely from cotton farming.

Salts are not the most poisonous contaminants in public drinking water supplies in a region with high rates of kidney and liver diseases. Vozrovhdeniya (Renaissance) Island in the Aral Sea was used by the Soviets for experiments in biological warfare using smallpox and anthrax. With the receding waters, it is no longer an island. The U.S. government has stepped in to prevent human exposure to these killers. Life expectancy in the region has fallen and infant mortality has tripled with respiratory problems and diarrhea from drinking contaminated water as the major culprit. Rates of mental retardation have soared, half the population suffers from symptoms of chronic stress, and over 100,000 have become environmental refugees. How did this happen? In a word—cotton.

In the 1960s, Soviet economic planners needed an industry to employ the rapidly growing Turkish and Mongolian populations of its Central Asian Republics (Figure 2.7). With the warmest climate in the Soviet Union, relatively fertile steppe soils, and the Syr Darya and Amu Darya fed by the snows and glaciers of the 20,000-foot high Hindu Kush Mountains, Soviet planners saw the potential for a cotton industry that could serve the needs of the Soviet Union’s quarter of a billion people, with the surplus to earn cash as an export commodity. They built the Karakum Canal across Turkmenistan and other smaller canals to irrigate the deserts. Irrigation methods were wasteful, but the cotton earnings grew while, as Soviet planners had predicted, the Aral shrank due to huge increases in evaporation and transpiration (water used by plants) of the two rivers’ waters. Like the Dust Bowl, the Aral Sea collapse is rooted in unsustainable agricultural practices.

Plans to divert large proportions of the great Ob River, and its tributary, the Irtysh, from the Arctic Ocean to supplement the Aral were completed and construction was about to begin when the Soviet Union collapsed in 1989–1991 making Russia, Kazakhstan, Uzbekistan, Turkmenistan, Kyrgyzstan, and Tajikistan separate countries. The Aral Sea suddenly became an international river basin (Figure 2.7). Not only were the great river diversion schemes cancelled, but the remnants of the Aral lie largely in Kazakhstan, while the headwaters of the Syr Darya lie in Kyrgyzstan. The headwaters of the larger Amu Darya lie in Tajikistan, and it flows through Turkmenistan and Uzbekistan where a contraction of the cotton industry is not being contemplated. The regional cooperation to restore the Aral that may have been possible under Soviet command is thus increasingly unlikely. As Diamond would point out, friendly neighbors that may have generated an effective response are now less motivated to help solve the problem.

Kazakhstan has made some progress on its own by improving irrigation canals and building a dike to keep Syr Darya waters in the smaller north Aral. With water levels rising from 98 feet above sea level to 125, salinity dropping, and fish returning, initial signs are hopeful that they can salvage this remnant of the original Aral even as the larger sea to the south disappears entirely. For the five million residents of the Aral Sea region, however, the future is bleak as none of the major solutions to the world’s greatest current ecological collapse—northern river diversions, huge reductions in water use for irrigation—are being seriously contemplated. This is despite so many scientific studies that locals complain that if every scientist came with a bucket of water, the Aral would be full.

Lesson 4: Disaster Can Be Avoided

An often-heard complaint is that the environmental literature delivers an oversupply of bad news, that it is an enclave of pessimists predicting doomsday. While our environmental challenges are steep, there is both some truth to this complaint and a danger in undue pessimism. Overly negative assessments of the state of the world and dire predictions about its future can inspire resignation or a have-fun-while-you-can attitude and thereby undermine the very commitment to the future that is the key ingredient in solving environmental problems and achieving sustainability. While it is true that one must correctly diagnose a problem before it can be solved, it is also helpful to learn about success stories because they provide the models we may wish to emulate. Fortunately, there are so many examples where natural resource problems have been overcome that the difficulty is in choosing from among them those that reveal larger principles. Below we will take a brief look at four different kinds of natural resource problem-solving that environmental history teaches us:

- substituting an increasingly scarce resource with a more abundant one,

- replacing a resource use system that damages or pollutes the environment,

- developing and applying technology for cleaning up pollution, and

- the most difficult problem of all, changing human behavior.

Natural Resource Substitution

The symbol of the 18th century English industrial revolution is the steam engine. Employed in textiles, metallurgy, and other new mass-production industries, steam engines required large amounts of fuel, which the English supplied by turning trees into charcoal. England was being deforested because the demand for industrial fuel was exceeding the rate at which photosynthesis could supply it through tree growth. The answer was to tap the chemical energy accumulated by photosynthesis over millions of years and stored in fossil fuels, especially coal. By the early 19th century, coal exceeded charcoal as an industrial fuel; charcoal was phased out and England’s forests began to recover.

Contemporaneously, in a European and North American society that was first embracing universal education and literacy, but had not yet invented electricity, people illuminated their houses using oil-filled lamps, enabling them to read books (and do their homework) well into the evening. The lamps were filled with whale oil derived from the blubber of those enormous marine mammals. Like trees, whales are a renewable resource, but the forest and marine ecosystems that produce these natural resources have very limited capacities that were being quickly exceeded by a world with increasing numbers of people utilizing new energy-intensive industrial technologies. Again, the answer lay in the storehouse of chemical energy from past ecosystems available in fossil fuels. Starting in northwest Pennsylvania in 1859, for the next half-century the rapidly growing oil industry was built on the demand for fuel to provide illumination in lamps. In this way it was oil, a nonrenewable resource, that saved the whales, a renewable resource, just as it was coal that saved the English forests.

Within my own lifetime, though perhaps not yours, natural resource substitution has played a key role in overcoming the tightening market for oil, especially imports from the Middle East. In 1972 oil sold for only $2.90 per barrel (a barrel is 42 gallons of oil because that’s the size of the first wooden barrels in northwest Pennsylvania) and was an economical way not only to propel an automobile, but also to generate electricity and heat buildings. Then in 1973, in the context of the Yom Kippur War between Israel and its Arab neighbors, the Organization of Petroleum Exporting Countries (OPEC) imposed an embargo on nations supporting Israel, including the U.S. The sudden reductions in oil supply not only multiplied the price for a barrel of oil, and thus a gallon of gasoline, but they caused chaos as American drivers waited in long lines, hoping that there would be gasoline left when they finally reached the pump. In fact, the 1973 OPEC oil embargo is the central event that focused world attention on the modern prospects of natural resource scarcity and perhaps even induced a degree of paranoia in Americans that gasoline supplies will suddenly disappear. How else can one explain that on September 11, 2001, as TV news reports showed terrorists crashing jet planes into the World Trade Center towers, millions of Americans immediately went to the gas station to fill up?

In 1965 oil supplied 65 billion kilowatt hours (6.1 percent) of U.S. electricity, climbing to 365 billion kilowatt hours (16.5 percent) in 1978 on the eve of the Iranian Revolution when oil prices spiked again from $11 to $34 per barrel. Fortunately, in generating electricity, oil has many substitutes. By 1995, oil use had dropped to 75 billion kilowatt-hours, using only oil left from the refining of gasoline, only 2.2 percent of the U.S. total as coal, natural gas, nuclear fission, and hydroelectricity substituted for oil.

In summer 2008, the price of oil skyrocketed, reaching $147 per barrel in June, most of which went to power vehicles, only to crash to under $40 per barrel by the end of the year. Can we again find a substitute for an increasingly scarce resource with volatile and rising prices? Biofuels such as ethanol from corn are a possibility, but, as we will see in Chapter 8, this yields meager net energy gains and places the burden of our industrial energy needs back on the current photosynthetic capacity of ecosystems—reversing the charcoal to coal and whale oil to petroleum examples cited above. Remaining domestic oil resources offshore and in the arctic could be tapped, but these speculative resources take at least a decade to find and bring to market, and it is uncertain that substantial resources will ever be found. The 2010 Deepwater Horizon accident in the Gulf of Mexico clearly illustrates the risks.

Can the automobile be reengineered to run effectively on electricity, thus tapping into coal, gas, nuclear power, hydroelectricity, wind, and solar power, and so forth as possible fuels? Hybrid cars, first made popular by the Toyota Prius introduced worldwide in 2001, use onboard electricity generation to augment the gasoline engine and boost fuel efficiency by up to 50 percent. Plug-in electric cars, starting with the Chevy Volt in 2010, can be plugged in overnight. My 2018 Prius Prime travels 20-30 miles on electricity before gasoline kicks in and thus averages nearly 80 miles per gallon overall. Because the majority of driving occurs around town on a daily basis, plug-in hybrid technology can substitute electricity for a large proportion of gasoline use. Fully electric cars, first made popular by Tesla, are now becoming common, with most major auto companies offering lithium-ion batteries with ranges exceeding 200 miles.

Environmental Substitution

A second category of resource problem-solving is substituting a more environmentally friendly natural resource use system for a damaging or polluting one. Though probably not informed about the collapses in Easter Island and the Maya, the Japanese were able to turn a disaster of deforestation and soil erosion into a success of reforestation. Suffering from severe deforestation in the 17th and 18th centuries, as population increases (and therefore the need to clear forests for agriculture) combined with the predominant use of wood for both energy and construction, the Japanese Tokugawa regime implemented strict forest use regulations.

Today, the densely populated, affluent country enjoys 74 percent forest cover but is the leading importer of forestry products with 18–22 million tons of tropical hardwood imports annually in the early 1990s compared with domestic production of only 5 million tons in 1995. The leading source of imports is Indonesia, which exported over 12 million tons of sawed and raw logs and plywood to Japan each year from 1990 to 1995. As a result, Indonesia lost nearly 4,000 square miles of forest per year in a region second only to the Amazon for species diversity. By importing most of its forest products, Japan has gained access to the natural resource value of Indonesian forests while simultaneously preserving the ecological and cultural services provided by its domestic forests. These services accrue to Japan, as flood protection, soil binding, wildlife habitat, recreation, and aesthetics, rather than to Indonesia.

In the Tokugawa period, Japan also deemphasized agriculture as a means to supply protein to its population and took to the seas as one of the world’s great fishing nations. While this shift in food sources helped save the Japanese soil and forests, it placed new burdens upon ocean fisheries, a resource the whole world shares. Thus, Japan has avoided the outcome of Easter Island and the Maya by terminating unsustainable forestry practices at home, but the substitutes they have found may be equally unsustainable – if for different reasons and for different human populations.

As anyone who has watched Mary Poppins (admit it, you’ve seen it) can attest, coal is a dirty fuel that only Dick Van Dyke could be moved to dance about. The rest of us just hope it doesn’t end up in our Christmas stocking. Nevertheless, many of the world’s great cities, not only London a century ago, have relied upon this abundant fossil fuel to heat homes and cook meals, and many still do. In particular, the rapidly growing cities of India and China are heavily dependent on coal, with the result that the world’s 50 most polluted cities lie in these countries. Like London, American cities were also reliant on coal to heat buildings until the mid-20th century. Clean cities, like New York and Boston, were those that burned hard anthracite coal from the seams of northeast Pennsylvania upon which the cities of Wilkes-Barre and Scranton were founded. Dirty cities, like Cleveland and Chicago, were those that burned softer and more abundant bituminous coal.

Fortunately, all these cities began to enjoy markedly better air quality in the 1930s when coal was replaced by cleaner-burning natural gas, delivered through high-quality steel pipelines from fields in the south central region of the country to furnaces and stoves in each home and public building. Emissions of sulfur, soot, mercury, radioactivity, and carbon dioxide from space heating all plummeted—though just in time to be replaced by automobile exhaust, which was, in turn, improved through the use of catalytic converters beginning in the 1970s. The question for us is whether we can repeat this history of air quality improvement through technological progress by replacing energy sources that emit greenhouse gases, especially gasoline and coal-fired electricity, with less polluting alternatives. We will explore this issue in depth in later chapters.

Environmental Cleanup Technology

On June 22, 1969, the Cuyahoga River, which runs through Cleveland, Ohio to Lake Erie, caught fire. Environmental disasters like these, which merit extensive media coverage and draw people’s attention away from their daily activities, are usually the tip of the iceberg below which lie chronic abuses and nagging syndromes that we ordinarily tolerate or ignore. This photogenic evidence of pollution, together with widespread emissions of sewage, phosphates, heavy metals, and other more toxic pollutants, however, sparked a movement which resulted in the passage of the Clean Water Act of 1972.

The Clean Water Act introduced wastewater (i.e. sewage) treatment to nearly every town and city in the U.S. and requiring industries to use “best available technology” to remove waterborne pollutants. This resulted in a steady improvement in water quality and aquatic ecosystems in the Great Lakes and major populous rivers like the Hudson, Potomac, and Ohio. It stands as one prominent example of an environmental success story, which too often goes untold, brought about through widespread application of an environmental clean-up technology.

Changing Human Behavior

After a century of plowing the fields annually, Midwestern farmers began to realize in the 1970s that this is simply unnecessary. Use of conservation tillage (leaving crop residues in the field to hold and build the soil and replanting in furrows often less than an inch wide) expanded steadily and, in various forms, was used on about half of all cropland acres in the U.S. by 2017. Saving soil is only part of the benefit; conservation tillage and no-till also saves energy and labor, generally without sacrificing yields. It stands as evidence that people will change time-honored ways of doing things when the evidence is clear that it furthers their own goals and they are empowered to implement a new way of doing things.

When I was a kid, American roadways were littered with every kind of trash from beer bottles to fast food-wrappers and “recycling” was a word that most people had never heard. What had been considered common and acceptable behavior is now considered to be “low life” behavior—where it’s not illegal. Nearly everyone I know now recycles at least some of their used articles and young people take recycling as the standard way of dealing with used bottles and cans and other everyday items. The definition of “normal” behavior has shifted.

Germany, a nation that has taken a leadership role in sustainable natural resource management, has taken recycling to the next level. By charging high rates for trash pickup and landfill disposal on one hand, while requiring stores to take back packaging returned by customers on the other, Germany has created a system where retailers have a strong incentive to minimize product packaging. They are working at the forefront of the phrase “reduce, reuse, recycle.” The result has been a quadrupling of recycling and a halving in solid consumer waste requiring landfill disposal. People can change—again, if the incentives are right and they are effectively empowered.

Americans love their cars—so much so that most visitors to national parks, where the splendors of nature abound like nowhere else, never leave them by more than a few hundred yards. Passenger trains reduce the energy to move people by 90 percent compared to cars and yield enormous benefits in urban land use, saving cities from being composed mostly of roads and parking lots. Mass transit is therefore a key element in achieving natural resource sustainability.

Here again, technology is on the move. The “hyperloop” uses trains powered by magnetic levitation, thus eliminating ground friction, and places them in vacuum tubes, thus minimizing air friction. Speeds exceeding 500 mph appear to be achievable. Uber and Lyft promise small, efficient robot cars in the near future, promising to greatly reduce the need for parking, which currently dominates urban spaces.

Within cities and towns, will the majority of Americans ever accept robot taxis, electric scooters and bikes, buses, street cars, subways, and trains as their primary mode of travel? What if these forms of transport are far cheaper than using the car, if the buses and trains run frequently and to numerous locations, feature comfortable seating, wireless internet access with free access to a menu of movies, uninterrupted cell phone use, and Hollywood spins them as a great place to meet your true love? Are those the right incentives enabling people to change?

Conclusion

This selective examination of environmental history has shown how humans have arisen as the dominant species on Earth by expanding their ecological niche to the point where, collectively, humans are now a force of nature. While there are numerous instances where humans have undermined nature’s carrying capacity with disastrous results, there are also numerous cases of effective natural resource and environmental problem-solving ranging from resource substitution to technological innovation to changing behavior. This history has much to teach us as we face the even steeper challenges of natural resource sustainability in the 21st century. How will this history read a century from now? That is a question that we will all answer together. I look forward to watching it unfold and maybe even influencing it. I hope you will too.

Further Reading

Crosby, Alfred, 1986. Ecological Imperialism: The Biological Expansion of Europe, 900–1900. Cambridge University Press, New York.

Diamond, Jared, 1999. Guns, Germs, and Steel: The fates of human societies. W.W. Norton & Company, New York.

Diamond, Jared, 2005. Collapse: How societies choose to fail or succeed. Penguin Books, New York.

Egan, Timothy, 2006. The Worst Hard Time: The untold story of those who survived the great American Dust Bowl. Houghton Mifflin Company, New York.

Media Attributions |

|