Design Case: Implementing Gamification with ARCS to Engage Digital Natives

Travis Thurston, Ph.D.

Abstract

Gamification is an emerging topic for both student engagement and motivation in higher education online courses as digital natives become post-secondary students. This design case considers the design, development, and implementation of a higher education online course using the ARCS model for motivational design combined with the four-phase model of interest development as a framework for gamification implementation. Through “designerly ways of knowing,” this design case explores engaging digital native students with a gamified online course design, which will be of interest to instructional designers and instructors in higher education. Overall, students in the pilot course responded favorably to the incorporation of gamification and perceived it to have a positive impact on the overall learning experience. Future iterations can improve upon this approach to plan more targeted gamification strategies.

A design case explores “designerly ways of knowing” (Cross, 1982, p. 223) and thinking (Gray, et al., 2016; Park, 2016; Legler & Thurston, 2017), within the context of “a real artifact or experience that has been intentionally designed” (Boling, 2010, p. 2). This design case includes considerations and analysis of the creation and delivery of an online instructional technology course, using motivational design and interest development as a framework for implementing gamification. Working toward “improving the congruence between the perspectives of students and those creating the learning environment” (Könings, et al., 2014, p. 2), this design case should inform future gamified course design strategies. With implications for intentional teaching (Linder, et al., 2014) and design (Cameron, 2009), this case should be of interest to higher education instructional designers and instructors alike.

As an instructional designer in higher education, I work with many instructors who are searching for student engagement strategies. I encourage instructors to use student-centered and evidence-based practices to improve online courses. Therefore, when I had the opportunity to teach an online course that serves as an introduction to website coding and development for non-computer science majors, I wanted to find a way to make the course more engaging for my students. This explanatory case study is framed by an online course redesign, which aimed to improve levels of student engagement and motivation by introducing a learner-centered, game-like environment to structured course activities. This was done by referencing the attention category of the ARCS model for extrinsic motivation and relying on the four-phase model of interest development to build intrinsic motivation.

Literature Review & Theoretical Framework

More than one in four higher education students in the United States are enrolled in at least one distance course nationwide (Allen & Seaman, 2016). With online enrollments growing, designing engaging architectures in asynchronous course environments becomes paramount (Riggs & Linder, 2016). One way to engage students is through gamification, which utilizes various game-like features (points, levels, quests or challenges, Easter eggs, etc.) in non-game contexts, in order to change learner behavior (Deterding, et al., 2011). As digital natives (both generation z and millennials) become post-secondary students, gamification is emerging as a topic for addressing student engagement and motivation in higher education online courses, (Nevin, et al., 2014; Schnepp & Rogers, 2014; Khalid, 2017).

Digital Natives

Given the fast-paced and technology-connected world in which we live, it’s no surprise that “[t]echnology influences all aspects of everyone’s lifestyle in most developed and developing societies, including their behaviour, learning, socialization, culture, values, and work” (Teo, 2016, p. 1727). Prensky (2001) originally proposed that digital natives be defined as the generation who have grown up immersed in technology, while Tapscott (2009) defines them as those born after 1976, and Rosen (2010) identifies them as those born after 1980. As such, students from generation z and millennials are typically classified as digital natives. However, there is disagreement in the literature on classifying digital natives as a generation, because “some individuals born within the digital native generation may not have the expected access to, or experience with digital technologies, [and] a considerable gap among individuals may exist” (Chen, Teo & Zhou, 2016, p. 51). For that reason, others suggest that the label of “digital native” be used more as a classification of a specific population of students, and not applied broadly to a generation tied to age (Helsper & Eynon, 2010; Margaryan, Littlejohn & Vojt, 2011). According to Palfrey and Gasser (2011), three criteria must be met in order to classify a student as a digital native: the student must be born after 1980, have access to digital technology, and possess digital literacy skills.

A common misconception is that digital natives are not yet old enough to be in college, yet they are considered to make up the dominant population of students currently enrolled in college courses in the United States (Seemiller & Grace, 2016). Our current education system was not specifically designed for digital native students (Pensky, 2001), so it’s “essential that we continue to develop higher education in ways that promote effective forms of student engagement (Kahn, et al., p. 217). Selwyn (2009) acknowledges that digital natives have been found to express enhanced problem-solving and multitasking skills, to enjoy social collaboration, and to learn at a quick pace while engaging with technology. However, it is not realistic to assume that all students will exhibit all of these skills. Digital natives tend to prefer engaging in games and can learn through digitally-based play and interactions (Prensky, 2001; Palfrey & Gasser, 2008). This suggests that providing autonomy-supportive assignments that require the use of problem-solving skills in game-like environments will appeal to digital native students (Mohr & Mohr, 2017).

Gamification

A number of theoretical and practical models for implementing gamification are emerging (Muntean, 2011; Urh, et al., 2015; Kim & Lee, 2015; Mora, et al., 2015), which employ various instructional approaches to motivate learners to engage with course content. Gamification implementation approaches are being attempted in various online course disciplines from the humanities to the physical sciences, and from business to instructional technology (Hanus & Fox, 2015; Chapman & Rich, 2015; Jagoda, 2014; Domínguez, et al., 2013; Stansberry & Hasselwood, 2017). When gamification is implemented effectively, it can provide the impetus for students to become intrinsically motivated to construct knowledge through relevant learning activities (Armstrong, 2013), as well as provide situated contexts in which students can apply knowledge and skills (Dondlinger, 2015). Gamification can increase student engagement by introducing myriad motivational components into the learning environment (Keller, 1987) while also providing for autonomy-support, which affords both choice and structure toward student engagement (Reeve, 2002; Jang, Reeve & Deci, 2010; Lee, et al., 2015). The elements needed in design and development make “motivating students . . . a topic of practical concern to instructional designers” (Paas et al., 2005, p. 75) and instructors, as “a clear design strategy is the key to success in gamification” (Mora, et al., 2015, p. 100).

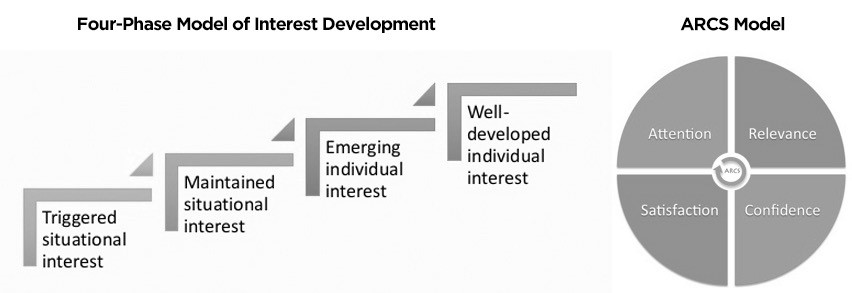

ARCS Model & Interest Development

“Learning as a result of motivation has been attributed to interest” (Dousay, 2014), which makes interest a critical positive emotion in learning and motivational contexts (Schraw, et al., 2001; Schroff & Vogel, 2010). Simply stated, gamification can initially be used as a hook to gain the attention of students in a course, which can then allow students to build interest in course content and become intrinsically motivated to continue to learn. With this concept in mind, the theoretical framework for this design case nests gamification and the four-phase model of interest development (Hidi & Renninger, 2006) within the attention category of the ARCS model (Keller, 1987).

In this framework, “interest refers to focused attention and/or engagement” (Hidi, 2006, p. 72), while the ARCS model refers to a motivational design structure, which includes “how many of what kinds of motivational strategies to use, and how to design them into a lesson or course” (Keller, 1987, p. 1).

Motivational design is considered a subset of instructional design and learning environment design (Keller, 2010). However, by combining motivational design and interest development, “it is possible to incorporate gamification into the ARCS model for gamification of learning” (Hamzah, et al., 2014, p. 291). As depicted in Figure 1, students progress sequentially through the four-phase model of interest development. However, the ARCS Model engages students cyclically, and students can be engaged in multiple sections of ARCS simultaneously. The attention section is discussed extensively in this case study, through perceptual and inquiry arousal, but each of the other sections play important roles in motivational design. Relevance speaks to providing students with a rationale linking to previous experience and giving students choice. The confidence section addresses facilitating student growth, communicating objectives, and providing feedback. Finally, the satisfaction section considers praise or rewards, and immediate application of skills or materials learned.

While gamification provides extrinsic elements to increase student engagement and motivation (Muntean, 2011), it can also be used to gain student attention toward triggered or situational interest, which can develop intrinsic motivation using content and learning environment (Hidi & Renninger, 2006). This process allows students to continue to engage in the content and learn more of their own volition (Schraw, et al, 2001; Banfield & Wilkerson, 2014). While intrinsic motivation typically requires individual interest within students, “some other students without such individual interest may also find the topic interesting because of situational interest factors, like novelty” (Hidi, 2006, p. 73), or in this case, gamification. Therefore, this course design provides the environment in which an individual can become intrinsically motivated (Gagné & Deci, 2005) and thereby “facilitate[s] the development and deepening of well-developed individual interest” (Hidi & Renninger, 2006, p. 115). This course also includes elements of autonomy-support and student choice, as “online environments that offer students further choice may also give teachers a way of leveraging students’ interest for the purposes of increasing their attention and motivation for school tasks” (Magnifico, et al., 2013, p. 486).

Design Context

The author of this design case served as the instructional designer for the redevelopment of the course and taught the gamified version as a pilot course in an adjunct instructor capacity. This positionality affected the overall approach of the design case, as the initial analysis of the course was an instructor-led self-evaluation of course components. This serves well for a complete design case, as the same individual developed and taught the course, providing seamless continuity from its intentional design to its intentional teaching. The development that this design case followed began with an initial analysis of the course, a redesign process that considered rationales for implementing gamification elements, and an instructional piloting of the course, which included the gathering of student feedback to be used in future iterations of this and other gamified classes.

Initial Analysis

The initial review of the course organization, and identification of the major assignments and assessments, found that the course was designed as high-touch for the instructor, requiring a significant time commitment in providing formative feedback to students throughout all course case studies within the learning management system (LMS). The course in this design case provided an introduction to Hypertext Markup Language (html), used to create webpage structure, and Cascading Style Sheets (CSS), used to style visual appearance of webpages. These are two of the main technologies employed in building webpages. Therefore, this high-touch course design was considered necessary. One of the objectives of this introductory class was to train students in a complex technical skill, which requires educators to inhabit the course’s structures by engaging in a significant amount of formative feedback and reinforcement of concepts (Riggs & Linder, 2016). The course was broken into modules, with each module representing one week’s worth of material. Coursework was grounded in relevant case studies from the textbook and required students to apply the learned skills in summative projects. Specifically, the course included twelve case study assignments, five low-stakes quizzes, five class discussion-based assignments, and two personalized projects (midterm & final) with peer reviews.

This course delivery mode was originally designed with a blended objectivist-constructivist approach (Chen, 2014) and was consistent with basic andragogic principles, by requiring immediate application of knowledge and skills learned (Huang, 2002). In other words, this course focused on teaching html and CSS coding to non-computer science majors. The aim was to provide students with a basic understanding of coding that can be applied in a supporting way to any of a variety of future professions that students will pursue. The objectivist-constructivist approach included combining some self-directed learning and skill-building with hands-on and project-based assignments and assessments, to demonstrate learning. Because students in this course only learned the basics of html and CSS, and might never have the opportunity to apply these skills in their professions, there was a potential gap in student motivation that needed to be addressed within the course design.

To identify areas of strength and deficiency in our course design, an instructor self-rating evaluation instrument was utilized. Developed by The California State University system, and formally known as the Quality Online Learning and Teaching (QOLT) Course Assessment – Instructor Self-Rating (2013), the evaluation instrument serves to engage instructors in rating the quality of the course. This is done using 54 objectives, spread over nine sections in the instrument, with a four-point scale based on Chickering and Gamson’s (1987) principles for good practice. Based on the data reported by the instructor, each section of our course was rated as either baseline (minimum), effective (average) or exemplary (above average), and the instrument provided recommended improvements based on the results of the evaluation. Scores, results, and recommended improvements for the course from the QOLT evaluation are displayed in Table 1.

Scores indicated that sections one, four, five, seven and nine were viewed as effective, but still had room for improvement. As anticipated, sections two and three were sound in design and rated at the highest classification as exemplary. Sections six and eight were rated at the lowest classification as baseline. Combining the scores of all nine sections, the overall design of the course was rated as effective at 72%.

| Section | Score | Result | Recommended Improvement |

|---|---|---|---|

| Course Overview and Intro | 17/24 | 91% Exemplary | provide relevant content |

| 2 Assessment of Learning | 17/18 | 94% Exemplary | |

| 3 Instructional Materials | 16/18 | 89% Exemplary | |

| 4 Student Interactions | 17/21 | 81% Effective | increase student engagement |

| 5 Facilitation and Instruction | 18/24 | 75% Effective | increase teacher pressure |

| 6 Technology for Learning | 10/15 | 67% Baseline | focus media elements |

| 7 Learner Support & Resources | 6/12 | 50% Effective | provide additional links |

| 8 Accessibility | 4/21 | 19% Baseline | increase content accessibility |

| 9 Course Summary | 6/9 | 67% Effective | individual student feedback |

| Total Overall Score | 111/156 | 72% Effective |

Nevertheless, there were a number of recommendations from the QOLT instrument to improve the course further by increasing student engagement, providing relevant content, focusing on media elements, and increasing content accessibility. The intentional design changes to the course were based on the recommended improvements on sections one, four, six and eight from the QOLT, and were framed using the ARCS model with a gamification approach. Given the results of this analysis, it was determined that the course design already met criteria for the relevance, confidence and satisfaction categories of the ARCS model (Keller, 1987). The added gamification aspects would therefore correspond with the attention category, with emphasis on interest development, as the course was an introductory-level coding class structured to develop basic html & CSS web-design skills. While the other three categories of ARCS are not explored explicitly in this design case, there tends to be a reasonable amount of overlap between the four categories (Gunter, et al., 2006).

Student Attention

Perceptual Arousal

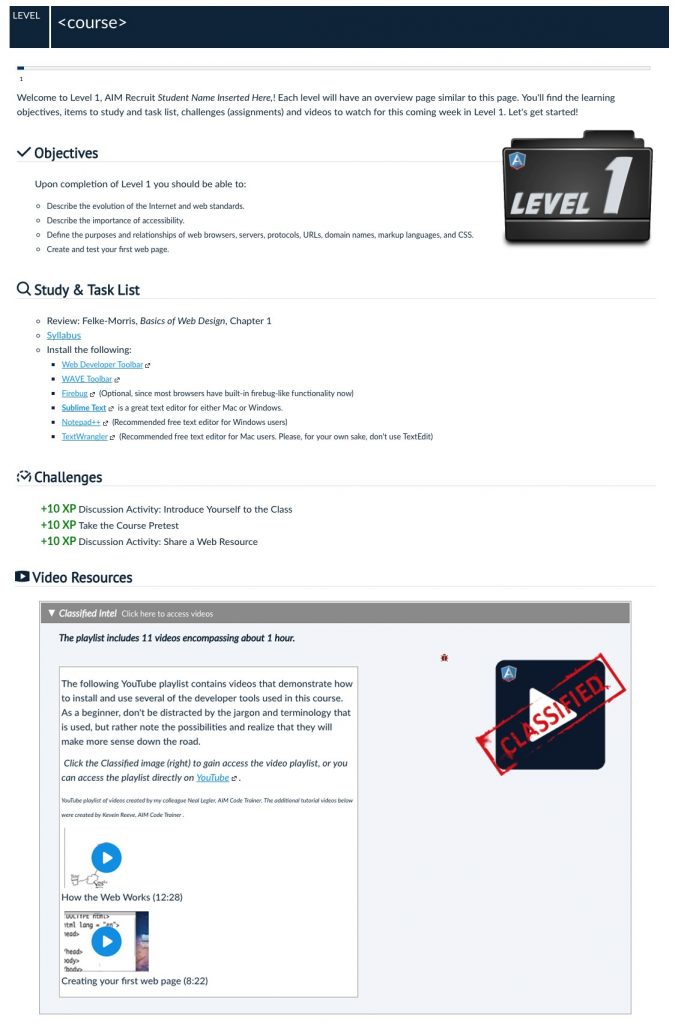

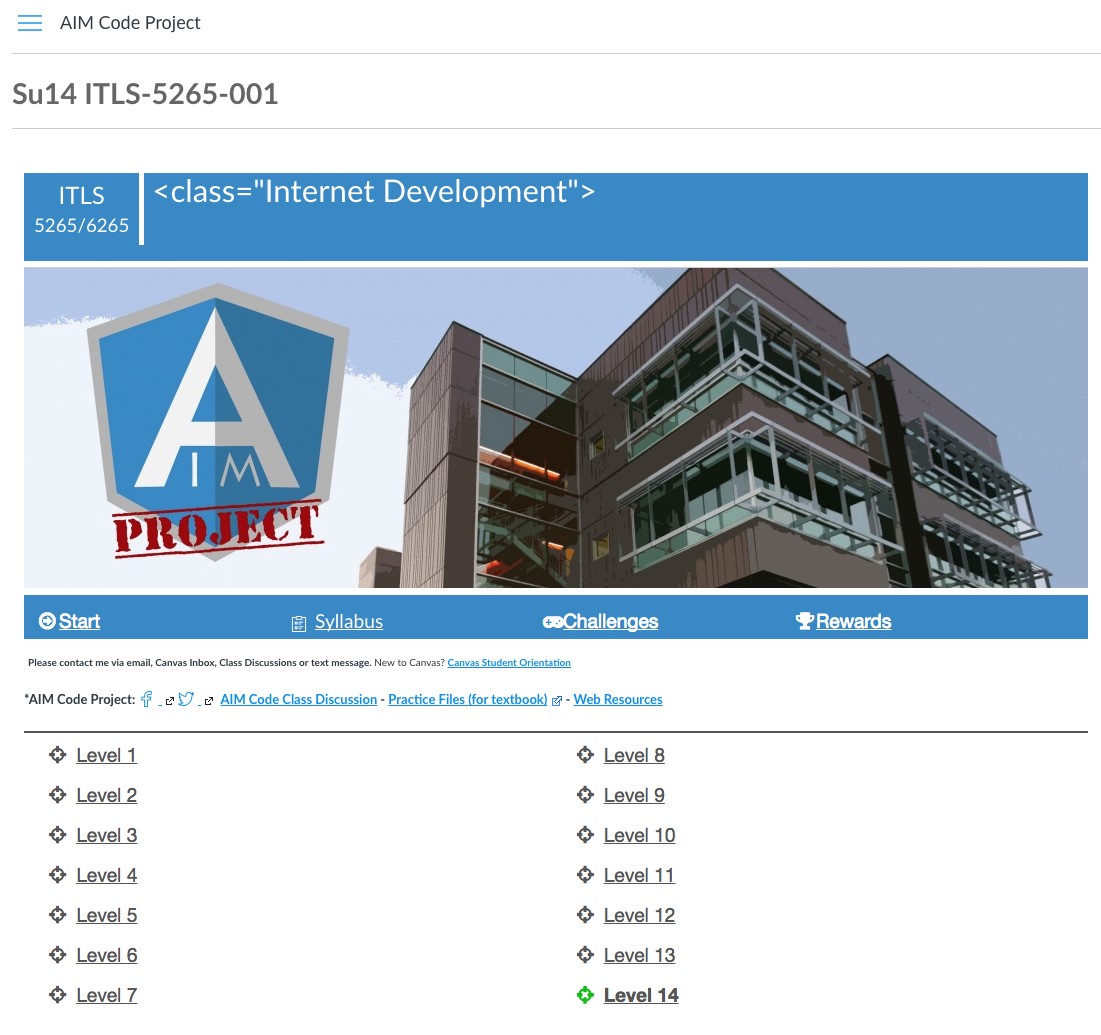

The implementation of gamification in this course aimed first to capture student interest through the novelty of such elements being present in higher education courses. This was accomplished by a change in semantics and the creation of a course theme, as “triggered situational interest can be sparked by environmental or text features” (Hidi & Renninger, 2006, p. 114). A spy theme was selected as the overarching theme of the course, which included altering course semantics. The instructor was referred to as a trainer, students as recruits, the course itself as the AIM Code Project, points for the course as XP (experience points), assignments as challenges, weekly modules as levels, and course videos as classified intel, all of which was portrayed on the module introduction pages (see Appendix B). The name AIM Code Project was selected as a spinoff term derived from WebAIM (web accessibility in mind), which was created at Utah State University (USU) in the Center for Persons with Disabilities. This name played well into the course format and placed a greater emphasis on improving accessibility, as recommended in section eight of the QOLT.

This theme also led to the development of a storyline that included students training for a secret government project to become coding agents. In the course introduction module, students were met with a call to action:

You have been recruited specifically for the AIM Code Project, because of the individual set of skills you bring to our group. We see potential in your abilities, and during this training, you will be called upon to incorporate your current skill set and your background or experience as you learn html and CSS coding.

The Goal: Progress through each level of challenges, gather XP, and access helpful resources to ultimately become an AIM Guild Agent. As your trainer/instructor, I will be with you through this journey to provide assistance when needed. One last thing: watch for opportunities to gain additional XP through gathering clues and accepting special assignments. That’s all for now. Good Luck!

This narrative from the instructor served to immerse students in the gamified elements. Once the students received their call to action, they were presented with a twist. The spy theme allowed leeway to “create a situation that [would] gain the player’s attention via dramatic elements” (Gunter et al., 2006, p. 14), which in serious games is also known as the “dramatic hook” to gain user attention in setting the problem. Students were informed that a spy had infiltrated the AIM Code Project, and they would be gathering clues throughout the course to identify the spy. This placed additional emphasis on students finding a bug icon and accessing the secret clues each week. Details surrounding these clues are explored more in the variability section below.

Inquiry Arousal

Case studies can be used for inquiry arousal to involve students in hands-on, relevant learning activities (Jacob, 2016). While the course already included interesting examples, new videos were created for this iteration, aimed to stimulate an attitude of inquiry by introducing each week’s content in an interesting way. The case studies posed a weekly surmountable challenge that required students to use certain skills and coding elements to build upon a webpage they were creating. Because the skills learned through these case studies were directly implemented in coding a webpage for the final course project, and were applicable to future work in html coding, our course structure provided relevant experience by Keller and Suzuki’s definition: “relevance results from connecting the content of instruction to the learners’ future job or academic requirements” (Keller & Suzuki, 2004, p. 231).

The USU media production team created the introductory video for the course, to provide curricular onboarding, as well as a launching module to set expectations (Mora, et al., 2015). Additional intro videos were produced for each module or level of the course. The course launch video introduced students to the navigation and class structure on Canvas and incorporated the storyline of the gamified theme. Additionally, all of the video resources that had been compiled in previous iterations of the course were presented to the students as “classified intel”, in line with the spy theme and framed as though the students now had access to these resources to support them in their case studies. The media elements added to this course addressed the deficiencies found section one of the QOLT evaluation, and the change in focus for other media elements improved the QOLT score for section six.

Formative quizzes were part of the original class and were used to check understanding throughout the semester. However, for our new course design, these quizzes were changed to low-stakes quizzes or learning activities, allowing students to take them in an open-book format with multiple attempts allowed. This type of low-stakes quizzes can improve student metacognition and knowledge transfer in new contexts (Bowen & Watson, 2016, p. 62). Students earned the “quiz key” by completing an academic integrity module at the beginning of the course. Although the course was predesigned to allow for multiple quiz attempts, students were informed that reattempting quizzes was a privilege they could earn by completing the academic integrity module. Thus, once students had earned the “quiz key” digital badge, they could use it throughout the semester for multiple reattempts on the five quizzes, which became inquiry-based activities rather than traditional assessments.

In terms of gamification, the concept of multiple quiz attempts can be compared to the game concepts of ‘save points’ and ‘multiple lives’, which allow users a safe way to fail and learn from failure to improve performance. “This contrasts with the traditional ‘examination’; a one-shot chance to succeed in a class. Indeed, within virtual environments, the clock can be wound back to the last save point, providing learners with the opportunity to succeed through multiple attempts, resulting in experiential learning, otherwise unobtainable by students doing ‘the best’ they can with one shot” (Wood, et al., 2013, p. 519).

Taking the concept of relevant learning activities a step further, students were required on the last quiz of the semester to apply a coding skill learned in class to our spy context. Using the “quiz key” idea, the LMS feature that required an access code for students to unlock the quiz was activated. Usually this feature only enabled students to take a quiz at an appointed time: for example, when proctoring was available. In this case, however, the access code for the quiz was placed in a hidden div (a function in coding that facilitates hiding content on a page) in the html code of the LMS quiz page. Students were required to inspect the page and search through the html code to find the hidden div and the quiz access code, which was represented as a green key. Students then had to input the access code to be able to take their final quiz. This played well into the spy theme and allowed students to apply a relevant coding skill into the context of the course.

Variability

This section focuses on maintaining student attention, which was perhaps the most difficult task. Identifying a strategy that utilizes a novelty like gamification to initially capture student attention and then maintain that attention over 15 weeks is challenging, because “no matter how interesting a given tactic is, [students] will adapt to it and lose interest over time” (Keller & Suzuki, 2004, p. 231). This led to the inclusion of two gamification elements that would introduce variety over the duration of the semester.

The first element was the inclusion of secret clues, which in gamification terms would be considered Easter eggs or hidden tips. In this case, the clue was accessed by finding a small bug icon that was located somewhere in the content pages or video page for each module. Once students found the secret clue, they were awarded one bonus point, one tip to help on their case study for that week, and another tip to identify the AIM Code spy. This aligned with section one of QOLT by providing relevant content. The next element was the inclusion of bonus levels, which were only offered in every other module. These levels provided an opportunity for social engagement on a current-event topic (e.g., net neutrality) in a discussion thread. This improved upon section four of the QOLT and provided variability to the course flow.

Student Evaluation

Upon completing our course development with added gamification elements, the class was offered as a pilot course to a mixed enrollment of undergraduate and graduate students, with the author serving as the instructor. Based on demographic information, the students in the course fit the previously-discussed criteria to be classified as digital natives (Palfrey & Gasser, 2011). To help improve future iterations of the course, at the semester’s conclusion, students were asked to complete an anonymous survey to provide overall course feedback, as well as feedback specific to the gamification aspects of the class design. Among other questions, the survey included one Likert-style inquiry about the impact that gamification elements had on the learning experience, as well as one open-ended question asking for additional feedback about the course in general.

Results

Student Survey Responses

In the anonymous student survey at the end of the semester, one question specifically addressed the course’s gamification elements. For this, students were asked to indicate on a 1-to-5 Likert scale how gamification contributed to their learning experience. On average, students rated this item at 4.14 (n = 21, SD = 0.85, SEM = 0.19, Min = 2.00, Max = 5.00). Perception data showed that 17 of the 21 students reported that the course’s gamification aspects either somewhat (rating of 4.0) or significantly (rating of 5.0) enhanced their learning experience. It should be noted that one student indicated that the gamification aspects somewhat reduced the learning experience (rating of 2.0), while three students indicated that the gamification aspects neither enhanced nor reduced the learning experience (rating of 3.0). Although a strong majority reported a rating of 4.0 or 5.0, the results speak to the point that gamification was not effective for all students.

The open-ended narrative responses were analyzed using the “describe, compare, relate” formula (Bazeley, 2009, p.10), with organized themes from the ARCS model implemented for the gamification portion: perceptual arousal, inquiry arousal, and variability.

Perceptual Arousal

This theme relates to the design objective of captivating student attention with novelty and triggering initial interest in course content. Overall, students indicated that in general, they enjoyed how the course included elements of gamification. However, feedback ranged across a spectrum, from one student who found gamification to be distracting, to others who reported that it significantly enhanced their learning experience:

- “I enjoyed the gamification… making the assignments more interesting.”

- “At first the gamification was pretty exciting and fun. It motivated me to spend more time in the course.”

- “I have always felt that gamification has aided my ability to learn. I love the idea that we are learning while having fun.”

- “When I first read the syllabus, I became excited for the course because of the gamification aspect. Striving to do my best in my classes is something I’ve always done, but the gamification led to a greater desire to not only do my best on the assignments but to work to find the spy who was leaking the information to others.”

Student narratives revealed that while they enjoyed gamification overall, they also thought that additional instructions or a rationale for the gamification elements would have been beneficial. The narrative exposed mixed results, as some students struggled with taking it seriously as part of a college course, while others felt that it was a positive factor in capturing their interest and impacting their engagement:

- “I think that I engaged a little more in this class because of gamification. It was kind of silly at times, but I liked it.”

- “The storyline was fine, but I think you should push it more.”

- “Initially I was skeptical about the plot set up for this course. I didn’t see how it would be integrated. As I got into it, though, I especially appreciated the pattern of each week or ‘level’.”

- “As for the gamification, I thought it was fun! I’ll be honest however; it was a little bit confusing. I think it was well planned out, but in the future, I think greater effort could be made to highlight the aspect of the gaming. Maybe making it a little simpler would be beneficial.”

These student narratives underline the importance of additional scaffolding and of providing a more explicit rationale (in the course syllabus and introduction module) for including gamification elements. Overall, students touched on the idea that they approached gamification with an established schema that appeared to have influenced them in multiple ways. Some students perceived gamification as fun, while others viewed it as a gimmick and out-of-place in a college setting.

Inquiry Arousal

This theme speaks to engaging students in relevant activities that promote inquiry. Focusing on the videos and media elements was a subject of emphasis for the improvement of the course design from the QOLT analysis, and was implemented to raise the level of inquiry for students using gamification. Student responses touched on two main aspects of the videos: (1) the gamified feature of listing them as “classified” content, and (2) the weekly intro videos that provided context for the case studies while also playing on the course theme:

- “In our class I really enjoyed how our teacher put short games, and fun videos for us to view or play as we worked on our projects.”

- “The videos were helpful and it was nice to have them available.”

- “I liked the little videos at the beginning of units. It’s good to have an introduction, and the spy music and secretive nature made the videos more interesting.”

- “It was interesting to look forward to what video would be put forth each week.”

Another aspect of inquiry arousal was the mention of the applied activity of searching for the hidden green key in the quiz html. Students cited this activity as being relevant to the objective of learning coding, which fits into QOLT section one. One student took it a step further, recommending the implementation of more activities that were relevant to html skills and that played on the spy theme of the course:

- “I liked looking in the source code for the green key.”

- “While the assignments, discussions, and quizzes were taken seriously, there was an element of fun to it (like the green key).”

- “The activity where we had to look at the source code was a good example of relevant tasks, b/c that’s something we actually have to do [in html coding].”

- “[I] felt like there was a disconnect between the spy elements and the work I was actually doing. Like, quick example, what if you acted like the spy was ruining all your web pages by altering the code, so you sent me the damaged HTML file to find what went wrong, or the spy removed the images, so I had to put them back in, or the spy stole a whole page, and I had to code it from scratch.”

The responses in this section speak to the impact that inquiry arousal had on engaging students in relevant tasks, and to how the gamification aspects of the course played a factor in directing student attention to the importance of these events.

Variability

This theme centers on concepts from the design that focus on maintaining student attention. This was a difficult area to address, as sustaining attention must be done by conveying relevance over the initial novelty of the gamification elements. Students responded to this theme by recognizing the engagement aspects inherent to finding secret clues each week:

- “I liked that the secret clues were also helpful to the overall project, that encouraged me to pay more attention to them.”

- “Looking for clues was great.”

- “One thing that I found very useful about the gamification aspects of this course is that it helped make sure I was not just glazing over the lesson content. I have found with other online courses [that] my mind starts to wander as I read the course content or unintentionally skip over content. But when looking for secret clues, it helped me make sure I was accessing all the content and not skipping over anything.”

The use of the secret clues (Easter eggs) was purposely designed to encourage sustained attention while providing relevance. Offering tips on the weekly case studies within the context of the spy theme seemed to work well. It was also encouraging to see a student report that the existence of the clues became a signal for the student to be attentive while engaging in course content. This was unintended in the design, but certainly a positive result. The bonus levels and overall reactions to gamification also fit well into the theme of variability:

- “I enjoyed the bonus levels added after some of the modules. They were fun, but I liked specifically that it was fun AND relevant.”

- “I thought the gamification experience was quite fun! This was actually my first time experiencing a “gamified” classroom, and I wish more of my instructors had tried to implement gamification into their courses.”

- “Review activities like [bonus levels] made it seems like it’s less of a class, and more fun. Plus, it reinforced the concepts nicely.”

- “At first the gamification was pretty exciting and fun. It motivated me to spend more time in the course. However, the novelty kind of wore off part way through the semester. I think it is hard to maintain that type of motivation over several months.”

This final section of comments not only addressed how important it was to students that gamification elements be fun, but also that they provide a frame for relevance in the coursework. The final student comment points to the challenge of using a novelty like gamification to engage students for a 15-week semester. The intention was that students would initially find extrinsic value in the gamified content, but through triggered interest development, students would shift toward intrinsic value through relevant activities. This certainly did not seem to be the case for all of the students in the course.

Discussion and Conclusion

Discussion and Conclusion

This design case contributes to the emerging body of literature that surrounds engaging digital native students with gamified instruction (de Byl, 2012; Kiryakova, et al., 2014; Özer, et al., 2018; Annansingh, 2018) and provides an example of a motivational design strategy, created to improve student engagement. Instructional designers and instructors have been provided with an evidence-based framework for implementing gamification in higher education online courses. As the instructional designer and instructor for this course, I found that the design and facilitation of a gamified online class could be an effective way to engage students.

Similar to studies on student perceptions of gamification in online courses (Leong & Luo, 2011; O’Donovan, et al., 2013; Jacobs, 2016), this design case revealed that students had an overall favorable view of the gamification elements of the course. In terms of class quality improvement based on the QOLT evaluation, emphasis was placed on improving sections one, four, six and eight, which included providing relevant content, increasing student engagement, placing focus on media elements, and increasing content accessibility. Based on the QOLT scores from the initial analysis, as well as improvements made from the QOLT instrument’s recommendations, metrics for each of these sections were improved, which increased the overall score for course quality. Additionally, student idiographic responses indicated that the videos and relevant activities in particular became a focal point for student engagement, which justifies the instructional emphasis that was placed on these resources.

Implementing gamification elements into a course and providing relevant learning opportunities with autonomy-support is appealing to digital native learners (Mohr & Mohr, 2017), and gamification appears to be an engaging way to gain student attention. In this design case, students responded favorably to the inclusion of gamification in the course and the impact it had on the overall learning experience, which confirms similar work on this topic (Prensky, 2001; Palfrey & Gasser, 2008). Idiographic responses also indicate positive impact in terms of perceptual arousal, inquiry arousal, and variability in gaining student attention with gamification elements. Students indicated that additional scaffolding for the gamification would be helpful, and recommended adding or adapting relevant learning activities that directly relate to the spy theme and overall course narrative.

Perceptual Arousal

The gamification elements were added in part to capture student attention through novelty, which can be used to trigger initial interest in the four-phase model of interest development. Overall, student narratives indicated that the gamification elements were interesting and fun, and they initially appeared to engage students in the course. However, while the gamified aspects of the course caught their attention, some students also indicated that they were somewhat confused by this new approach to an online course in higher education. Students suggested that this confusion could be mitigated with additional scaffolding in the syllabus and the introduction module.

Inquiry Arousal

This theme was approached by focusing videos and media elements to improve the course design (as recommended by the QOLT analysis) and to engage students in relevant activities that promote inquiry. Student narratives indicated that these videos were engaging in bringing students into the gamified theme, and in incorporating course content. Overall, students responded positively to the quiz that required them to apply the skill of searching through a webpage’s html code to find a hidden access code. Students reported that this activity was not only relevant to the course content, but also engaged the gamified spy theme in the course. One student in particular felt a disconnect between the case studies and the spy theme, and recommended that there could have been more applied activities similar to finding the hidden access code. This was an interesting comment, as the student indicated an openness to seeing more assignments that played into the gamified theme, despite a perceived disconnect in some of the assignments. Moreover, this student also provided a very specific example that spoke to the acceptance of gamification as a tool for student engagement.

Variability

The concept of providing variability to maintain student attention was of concern, as the novelty of the gamification elements could wear off and students could lose interest. However, responses indicated that the implementation of secret clues (Easter eggs) was an element that resonated with students. An unintended result was that students indicated that the secret clues encouraged them to pay closer attention to content to avoid missing the clues. This aspect of secret clues also connected well with the gamified spy theme of the course. Students indicated further that the bonus levels provided a certain amount of variability and engagement throughout the semester. As expected, some feedback confirmed that the initial novelty and excitement of gamification wore off over the semester.

Recommendations

According to Armstrong:

Gamification in [online education] is awaiting those who are willing to explore, experiment, and iterate – and it’s these trail-blazers who are likely to find themselves in the best position to meet the evolving needs of an ever-increasing population of digital native students (Armstrong, 2013, p. 256).

We accordingly affirm that in order to create more robust and clear gamification design strategies for gamified courses (Mora, et al., 2015), future iterations of this and other online classes will greatly benefit by utilizing and considering the designerly ways of knowing, the course structural description, and the rich student feedback provided by this case study (Könings, et al., 2014)

Instructors

This design case speaks to the role the instructor plays in the development of relevant assignments, providing timely and engaging media elements, and providing scaffolding. Instructors should commit to collaboratively engage in the backwards-design process of course development with instructional designers, which leads to a better understanding of intentional teaching (Linder, et al., 2014). It is also recommended that instructors acknowledge that a gamified course will require tweaks and honing through an iterative process from semester-to-semester, through intentional design (Cameron, 2009). This requires gathering and implementing student recommendations for improvement. In this design case, students identified a need for additional scaffolding and more relevant assignments.

It is recommended that instructors consider how to best support our new digital native learners by providing problem-based activities (Selwyn, 2009) with constructive, formative feedback. One way instructors can accomplish this is by acknowledging that with new learners, instructors should consider how to use media elements and digital tools of communication more effectively, to bridge the generational gap. At minimum, instructors can work with instructional designers to learn communication features within or outside of the LMS. One emerging and innovative approach is the use of gamified dashboards that utilize learning analytics to provide students with immediate feedback related to performance on assignments and quizzes (de Freitas, et al., 2017).

Finally, instructors should use their content expertise to identify relevant assignments, and work with instructional designers to incorporate these assignments into a gamification design strategy in the LMS. These types of gamified learning activities have been found to produce positive effects on the knowledge acquisition and engagement of digital native learners (Ibáñez, et al., 2014). Instructors with an interest in student success are essential in the development and facilitation of teaching in gamified learning environments.

Instructional Designers

This design case speaks to the role of the instructional designer as an advocate of the student to the instructor (Hopper & Sun, 2017) in assembling autonomy-supportive learning materials, and in getting instructors to buy into the educational viability of gamified problem-solving activities for digital native learners (Gros, 2015). Improving congruence between student perspectives and those of instructional designers and instructors is identified by Könings, Seidel and van Merriënboer (2014) as participatory design. Such structured collaboration can lead to improved quality of learning within the LMS.

It is recommended that instructional designers teach instructors and serve as advocates for innovative approaches and evidence-based instructional design methods. These efforts include providing autonomy-support to instructors by teaching them how to facilitate gamified learning experiences within the LMS. This process can be described as faded scaffolding, which uses instructional supports that are gradually removed as the expertise level of the learner improves in a specific teaching strategy or skill (Clark and Feldon, 2005). This concept is not only relevant for learning in online courses, but specifically in gamified instruction, as “scaffolding in games is used to bridge the gap between the player’s current skills and those needed to be successful . . . [and] proper scaffolding provides a satisfying game experience for players” (Kao, et al., 2017, p. 296). It makes sense that student feedback in this design case recommended the inclusion of additional scaffolding. However, instructional designers must also keep in mind that some types of scaffolding, or too much scaffolding in general, can actually become learning barriers (Sun, et al., 2011). Instructional designers must also be prepared for the inevitable necessity of gathering student feedback, and of improving the design of gamified courses in an iterative process over multiple offerings of a course. This design case illustrates that instructional designers can and should play a crucial role in the preparation and design of instruction for gamified learning environments.

Future Directions

Based on the findings of this design case, future studies on formulating online courses for digital native students will explore the use of scaffolding and autonomy-support in different formats. These include, but not limited to: learner preference, self-directed learning, and student choice. Additionally, our findings on the implementation of relevant assignments will lead to the exploration of making online discussions more relevant and of engaging students through scaffolding and autonomy-support with Bloom’s revised taxonomy.

References

Allen, I. E., & Seaman, J. (2016). Online Report Card: Tracking Online Education in the United States. Babson Survey Research Group.

Annansingh, F. (2018). An Investigation Into the Gamification of E-Learning in Higher Education. In Information Resource Management Association (Ed.), Gamification in Education: Breakthroughs in Research and Practice, (pp. 174-190) Hershey, PA: IGI Global.

Armstrong, D. (2013). The new engagement game: the role of gamification in scholarly publishing. Learned Publishing, 26(4), 253-256.

Banfield, J., & Wilkerson, B. (2014). Increasing student intrinsic motivation and self-efficacy through gamification pedagogy. Contemporary Issues in Education Research (Online), 7(4), 291.

Bazeley, P. (2009). Analysing qualitative data: More than ‘identifying themes’. MalaysianJournal of Qualitative Research, 2(2), 6-22.

Boling, E. (2010). The need for design cases: Disseminating design knowledge. International Journal of Designs for Learning, 1(1).

Bowen, J. A., & Watson, C. E. (2016). Teaching naked techniques: A practical guide to designing better classes. John Wiley & Sons.

Cameron, L. (2009). How learning design can illuminate teaching practice.

Chapman, J. R., & Rich, P. (2015, January). The Design, Development, and Evaluation of a Gamification Platform for Business Education. In Academy of Management Proceedings (Vol. 2015, No. 1, p. 11477). Academy of Management.

Chan, S. (2010). Applications of andragogy in multi-disciplined teaching and learning. Journal of adult education, 39(2), 25.

Chen, S. J. (2014). Instructional design strategies for intensive online courses: An objectivist-constructivist blended approach. Journal of interactive online learning, 13(1).

Chen, P. H., Teo, T., & Zhou, M. (2016). Relationships between digital nativity, value orientation, and motivational interference among college students. Learning and Individual Differences, 50, 49-55.

Chickering, A. W., & Gamson, Z. F. (1987). Seven principles for good practice in undergraduate education. AAHE bulletin, 3, 7.

Clark, R. E., & Feldon, D. F. (2005). Five common but questionable principles of multimedia learning. The Cambridge handbook of multimedia learning, 6.

Cross, N. (1982). Designerly ways of knowing. Design studies, 3(4), 221-227.

California State University. (2013). Quality online learning and teaching evaluation instrument. Retrieved from http://courseredesign.csuprojects.org/wp/qolt-nonawards-instruments/.

de Byl, P. (2012). Can digital natives level-up in a gamified curriculum. Future challenges, sustainable futures. Ascilite, Wellington, 256-266.

de Freitas, S., Gibson, D., Alvarez, V., Irving, L., Star, K., Charleer, S., & Verbert, K. (2017). How to use gamified dashboards and learning analytics for providing immediate student feedback and performance tracking in higher education. In Proceedings of the 26th International Conference on World Wide Web Companion (pp. 429-434). International World Wide Web Conferences Steering Committee.

Deterding, S., Dixon, D., Khaled, R., & Nacke, L. (2011, September). From game design elements to gamefulness: defining gamification. In Proceedings of the 15th international academic MindTrek conference: Envisioning future media environments (pp. 9-15). ACM.

Domínguez, A., Saenz-de-Navarrete, J., De-Marcos, L., Fernández-Sanz, L., Pagés, C., & Martínez-Herráiz, J. J. (2013). Gamifying learning experiences: Practical implications and outcomes. Computers & Education, 63, 380-392.

Dondlinger, M. (2015). Games & Simulations for Learning: Course Design Case. International Journal of Designs for Learning, 6(1), 54-71. Retrieved from http://scholarworks.iu.edu/journals/index.php/ijdl/.

Dousay, T. A. (2014). Multimedia Design and Situational Interest: A Look at Juxtaposition and Measurement. In Educational Media and Technology Yearbook (pp. 69-82). Springer International Publishing.

Gagné, M., & Deci, E. L. (2005). Self‐determination theory and work motivation. Journal of Organizational behavior, 26(4), 331-362.

Gray, C. M., Seifert, C. M., Yilmaz, S., Daly, S. R., & Gonzalez, R. (2016). What is the content of “design thinking”? Design heuristics as conceptual repertoire. International Journal of Engineering Education, 32.

Gros, B. (2015). Integration of digital games in learning and e-learning environments: Connecting experiences and context. In Digital Games and Mathematics Learning (pp. 35-53). Springer, Dordrecht.

Gunter, G., Kenny, R. F., & Vick, E. H. (2006). A case for a formal design paradigm for serious games. The Journal of the International Digital Media and Arts Association, 3(1), 93-105.

Hamzah, W. A. F. W., Ali, N. H., Saman, M. Y. M., Yusoff, M. H., & Yacob, A. (2014, September). Enhancement of the ARCS model for gamification of learning. In User Science and Engineering (i-USEr), 2014 3rd International Conference on (pp. 287-291). IEEE.

Hanus, M. D., & Fox, J. (2015). Assessing the effects of gamification in the classroom: A longitudinal study on intrinsic motivation, social comparison, satisfaction, effort, and academic performance. Computers & Education, 80, 152-161.

Helsper, E. J., & Eynon, R. (2010). Digital natives: where is the evidence? British educational research journal, 36(3), 503-520.

Hidi, S. (2006). Interest: A unique motivational variable. Educational research review, 1(2), 69-82.

Hidi, S., & Renninger, K. A. (2006). The four-phase model of interest development. Educational psychologist, 41(2), 111-127.

Huang, H. M. (2002). Toward constructivism for adult learners in online learning environments. British Journal of Educational Technology, 33(1), 27-37.

Ibáñez, M. B., Di-Serio, A., & Delgado-Kloos, C. (2014). Gamification for engaging computer science students in learning activities: A case study. IEEE Transactions on learning technologies, 7(3), 291-301.

Jacobs, J. A. (2016). Gamification in an Online Course: Promoting student Achievement through Game-Like Elements (Doctoral dissertation, University of Cincinnati).

Jagoda, P. (2014). Gaming the humanities. differences, 25(1), 189-215.

Jang, H., Reeve, J., & Deci, E. L. (2010). Engaging students in learning activities: It is not autonomy support or structure but autonomy support and structure. Journal of educational psychology, 102(3), 588.

John, R. (2014). Canvas LMS Course Design. Packt Publishing Ltd.

Kahn, P., Everington, L., Kelm, K., Reid, I., & Watkins, F. (2017). Understanding student engagement in online learning environments: the role of reflexivity. Educational Technology Research and Development, 65(1), 203-218.

Kao, G. Y. M., Chiang, C. H., & Sun, C. T. (2017). Customizing scaffolds for game-based learning in physics: Impacts on knowledge acquisition and game design creativity. Computers & Education, 113, 294-312.

Khalid, N. (2017). Gamification and motivation: A preliminary survey. 4th international research management & innovation conference (IRMIC 2017).

Keller, J. M. (1987). The systematic process of motivational design. Performance+ Instruction, 26(9-10), 1-8.

Keller, J. M. (1999). Using the ARCS motivational process in computer‐based instruction and distance education. New directions for teaching and learning, 1999(78), 37-47.

Keller, J. M. (2010). What is motivational design? In Motivational Design for Learning and Performance (pp. 21-41). Springer US.

Keller, J., & Suzuki, K. (2004). Learner motivation and e-learning design: A multinationally validated process. Journal of educational Media, 29(3), 229-239.

Kim, J. T., & Lee, W. H. (2015). Dynamical model for gamification of learning (DMGL). Multimedia Tools and Applications, 74(19), 8483-8493.

Kiryakova, G., Angelova, N., & Yordanova, L. (2014). Gamification in education. Proceedings of 9th International Balkan Education and Science Conference.

Könings, K. D., Seidel, T., & van Merriënboer, J. J. (2014). Participatory design of learning environments: integrating perspectives of students, teachers, and designers. Instructional Science, 42(1), 1-9.

Lee, E., Pate, J. A., & Cozart, D. (2015). Autonomy support for online students. TechTrends, 59(4), 54-61.

Legler, N., & Thurston, T. (2017). About This Issue. Journal on Empowering Teaching Excellence, 1(1), 1.

Leong, B., & Luo, Y. (2011). Application of game mechanics to improve student engagement. In Proceedings of International Conference on Teaching and Learning in Higher Education.

Linder, K. E., Cooper, F. R., McKenzie, E. M., Raesch, M., & Reeve, P. A. (2014). Intentional teaching, intentional scholarship: Applying backward design principles in a faculty writing group. Innovative Higher Education, 39(3), 217-229.

Magnifico, A. M., Olmanson, J., & Cope, B. (2013). New Pedagogies of Motivation: reconstructing and repositioning motivational constructs in the design of learning technologies. E-Learning and Digital Media, 10(4), 483-511.

Margaryan, A., Littlejohn, A., & Vojt, G. (2011). Are digital natives a myth or reality? University students’ use of digital technologies. Computers & education, 56(2), 429-440.

Rosen, L. D. (2010). Rewired: Understanding the I-generation and the way they learn. New York, NY: Palgrave Macmillan.

Mohr, K. A. & Mohr, E. S. (2017). Understanding Generation Z Students to Promote a Contemporary Learning Environment. Journal on Empowering Teaching Excellence, 1(1), 9.

Mora, A., Riera, D., Gonzalez, C., & Arnedo-Moreno, J. (2015, September). A literature review of gamification design frameworks. In Games and virtual worlds for serious applications (VS-Games), 2015 7th international conference on (pp. 1-8). IEEE.

Muntean, C. I. (2011, October). Raising engagement in e-learning through gamification. In Proc. 6th International Conference on Virtual Learning ICVL (No. 42, pp. 323-329).

Nevin, C. R., Westfall, A. O., Rodriguez, J. M., Dempsey, D. M., Cherrington, A., Roy, B., Patel, M., & Willig, J. H. (2014). Gamification as a tool for enhancing graduate medical education. Postgraduate medical journal, postgradmedj-2013.

O’Donovan, S., Gain, J., & Marais, P. (2013). A case study in the gamification of a university-level games development course. Proceedings of South African Institute for Computer Scientists and Information Technologists Conference (pp. 245–251).

Özer, H. H., Kanbul, S., & Ozdamli, F. (2018). Effects of the Gamification Supported Flipped Classroom Model on the Attitudes and Opinions Regarding Game-Coding Education. International Journal of Emerging Technologies in Learning (iJET), 13(01), 109-123.

Paas, F., Tuovinen, J., van Merriënboer, J., & Darabi, A. (2005). A motivational perspective on the relation between mental effort and performance: Optimizing learner involvement in instruction. Educational Technology Research and Development, 53(3), 25–34.

Park, K. (2016). A Development of Instructional Design Model Based on the Nature of Design Thinking. Journal of Educational Technology, 32(4), 837-866.

Prensky, M. (2001). Digital natives, digital immigrants. On the Horizon, 9(5), 1–6.

Reeve, J. (2002). Self-determination theory applied to educational settings. In E. L. Deci & R. M. Ryan (Eds.), Handbook of self-determination research (pp. 183-203). Rochester, NY: University of Rochester Press.

Rickes, P. C. (2016). Generations in flux: how gen Z will continue to transform higher education space. Planning for Higher Education, 44(4), 21.

Riggs, S. A., & Linder, K. E. (2016). Actively Engaging Students in Asynchronous Online Classes. IDEA Paper# 64. IDEA Center, Inc.

Schnepp, J. C., & Rogers, C. (2014). Gamification Techniques for Academic Assessment.

Schraw, G., Flowerday, T., & Lehman, S. (2001). Increasing situational interest in the classroom. Educational Psychology Review, 13(3), 211-224.

Seemiller, C., & Grace, M. (2016). Generation Z goes to college. John Wiley & Sons.

Selwyn, N. (2009, July). The digital native–myth and reality. In Aslib Proceedings (Vol. 61, No. 4, pp. 364-379). Emerald Group Publishing Limited.

Shroff, R., & Vogel, D. (2010). An investigation on individual students’ perceptions of interest utilizing a blended learning approach. International Journal on E-learning, 9(2), 279-294.

Stansberry, S. L., & Haselwood, S. M. (2017). Gamifying a Course to Teach Games and Simulations for Learning. International Journal of Designs for Learning, 8(2).

Sun, C. T., Wang, D. Y., & Chan, H. L. (2011). How digital scaffolds in games direct problem-solving behaviors. Computers & Education, 57(3), 2118-2125.

Tapscott, D. (2009). Grown up digital: How the Net Generation is Changing Your World. New York, NY: McGraw-Hill.

Teo, T. (2016). Do digital natives differ by computer self-efficacy and experience? An empirical study. Interactive Learning Environments, 24(7), 1725-1739.

Thompson, P. (2013). The digital natives as learners: Technology use patterns and approaches to learning. Computers & Education, 65, 12-33.

Thurston, T. (2014, February 4). 5 Keys to Rapid Course Development in Canvas Using Custom Tools. eLearning Industry.

Urh, M., Vukovic, G., & Jereb, E. (2015). The model for introduction of gamification into e-learning in higher education. Procedia-Social and Behavioral Sciences, 197, 388-397.

Wood, L., Teras, H., Reiners, T., & Gregory, S. (2013). The role of gamification and game-based learning in authentic assessment within virtual environments. In Research and development in higher education: The place of learning and teaching (pp. 514-523). Higher Education Research and Development Society of Australasia, Inc.

Appendix A: Course Homepage

Appendix B: Course Module Page