Data Collection in Research

This chapter will focus on how researchers collect data from their sample, whether that be participants, publicly available data, secondary data, big data, or biological data.

Content:

- Accessible and Novel Data

- Data Collection Methods

- Secondary (Existing) Data Methods in Data Collection

- Data Collection Strategy

- EBP Considerations in Data Collection

- The Purpose of Big Data

Objectives:

- Identify and explain different data collection methods, including primary and secondary (existing) data methods.

- Differentiate between accessible and novel data sources in the context of research.

- Assess the advantages and limitations of using secondary data sources in research, including considerations of data quality, accessibility, and applicability to evidence-based practice (EBP).

- Identify a comprehensive data collection strategy that aligns with research objectives, includes appropriate data collection methods, and addresses potential ethical and practical considerations in data collection.

- Discuss key EBP considerations in data collection, including the importance of data integrity, reliability, validity, and ethical guidelines, and how these considerations impact the strength of evidence in research.

- Explore the purpose and implications of big data in research, including how large datasets can be leveraged to inform evidence-based decisions, predict trends, and enhance data-driven practice in healthcare.

- Demonstrate the ability to identify and integrate novel data sources into research, considering the potential challenges and benefits of using unconventional or innovative data collection methods in advancing nursing practice.

Key Terms:

Primary Data: Data collected directly from first-hand sources by the researcher for a specific purpose. Examples include surveys, interviews, and observations specifically conducted for the study.

Secondary Data: Data that was originally collected for another purpose but is used by a researcher for a new study. This includes data from existing sources like databases, government reports, and previously conducted studies.

Sampling: The process of selecting a subset of individuals from a population to participate in a study. The sample should represent the population to ensure that findings can be generalized.

Survey: A method of data collection that involves asking participants a series of questions to gather information on their attitudes, behaviors, or characteristics. Surveys can be conducted in person, by phone, online, or through paper questionnaires.

Interview: A qualitative data collection method involving direct, face-to-face, telephone, or online questioning of participants to explore their thoughts, feelings, or experiences in depth.

Observation: A method of data collection where the researcher records behaviors or events as they occur in a natural setting. This can be done overtly (participants know they are being observed) or covertly (participants are unaware).

Focus Group: A qualitative data collection method that involves guided group discussions to explore participants’ perceptions, opinions, beliefs, and attitudes about a specific topic.

Reliability: The consistency of a measurement tool or data collection method. A reliable tool will produce the same results under consistent conditions.

Validity: The extent to which a tool or data collection method measures what it is intended to measure. Validity ensures the accuracy and truthfulness of the data.

Informed Consent: A process that involves informing participants about the purpose, procedures, risks, and benefits of the study, and obtaining their voluntary agreement to participate.

Bias: A systematic error that can affect the validity of the data. Bias can occur due to the way data is collected, the sampling method, or the researcher’s influence.

Confidentiality: A principle in research that ensures that the information provided by participants is kept private and not disclosed without their consent, protecting their identity and personal information.

Introduction

Data collection is a pivotal step in the research process, as it directly impacts the accuracy, reliability, and validity of the research findings. The approach a researcher takes in gathering data is influenced by the research question, the study design, and the availability of resources. This chapter will explore how researchers differentiate between accessible and novel data, categorize various data collection methods, and discuss the role of Big Data in research.

Differentiating Accessible Data and Novel Data

When embarking on a research project, one of the first decisions a researcher must make is whether to use accessible data or collect novel data. Accessible data refers to pre-existing information that can be used for analysis without the need for new data collection. This data may come from previous studies, databases, government reports, or organizational records. The use of accessible data can save time and resources, and it is particularly valuable in secondary research, meta-analyses, and systematic reviews (Hall & Roussel, 2014).

For example, a researcher studying the long-term effects of a specific drug might use accessible data from existing clinical trial databases or electronic health records. This approach allows the researcher to analyze large volumes of data without the need to conduct new trials, which can be costly and time-consuming.

In contrast, novel data is newly collected information gathered directly from subjects or experiments specifically for the research at hand (Boswell & Cannon, 2020). This type of data collection is essential when existing data is insufficient, outdated, or irrelevant to the current research questions. Novel data is particularly important in studies requiring specific measurements, such as biophysiological data collected in vivo or in vitro.

Consider a study aiming to assess the efficacy of a new nursing intervention. The researcher might collect novel data through interviews, questionnaires, or direct observation of patient outcomes. This data would be unique to the study and provide fresh insights into the intervention’s effectiveness.

Categorizing Data Collection Methods

There are various methods of data collection, each suited to different research needs and contexts. These methods can be broadly categorized into quantitative and qualitative approaches, though many studies use a combination of both to provide a more comprehensive analysis.

Primary Data Methods in Data Collection

1. Surveys and Questionnaires: Surveys and questionnaires are commonly used in quantitative research to gather data from a large number of respondents (Houser, 2018). These tools often employ closed-ended questions, which provide specific response options, making the data easy to quantify and analyze. However, open-ended questions can also be included to allow respondents to provide more detailed answers, offering qualitative insights. According to Wood and Ross (2011), questionnaires limit the replies possible because they involve directed answers to prearranged questions.

Example: A researcher investigating patient satisfaction with nursing care might use a questionnaire with both closed-ended questions (e.g., rating scales) and open-ended questions (e.g., “Please describe any areas where you felt the care could be improved.”).

2. Interviews: Interviews are a versatile data collection method that can be structured, semi-structured, or unstructured, depending on the research objectives. Structured interviews use a predetermined set of questions, ensuring consistency across all participants. Unstructured interviews, on the other hand, are more conversational, allowing the researcher to explore topics in depth based on the participant’s responses.

Structured interviews follow a predetermined set of questions, ensuring that each participant is asked the same questions in the same order. This consistency facilitates the comparison of responses across participants, making structured interviews particularly useful in studies where uniformity is essential. For example, a researcher evaluating patient satisfaction with healthcare services might use a structured interview to ensure that all participants provide feedback on the same aspects of care.

Semi-structured interviews offer more flexibility, allowing the researcher to probe deeper into certain topics based on the participant’s responses. While there is still a guiding framework of questions, the researcher can adapt the interview in real-time, making this approach ideal for exploring nuanced issues. For instance, in a study on nurses’ experiences with workplace stress, a semi-structured interview could allow the researcher to explore specific stressors that emerge during the conversation, providing a richer understanding of the issue.

Unstructured interviews are the most flexible, with no predefined questions or structure. Instead, the interview is more like a conversation, allowing participants to guide the discussion. This method is particularly useful in exploratory research where the researcher seeks to understand an issue from the participant’s perspective without imposing any preconceived notions. However, the lack of structure can make it challenging to compare data across participants and requires skilled interviewers to manage the flow of conversation effectively.

In all interview methods, the researcher must be mindful of potential biases that can influence the data. Interviewer bias, where the researcher’s own beliefs or expectations affect the responses, can be mitigated by maintaining a neutral tone and being open to all answers. Additionally, the setting of the interview—whether it is conducted face-to-face, over the phone, or via video conferencing—can influence the data collected, and researchers should choose the method that best suits the study’s objectives and participants’ comfort.

Example: In a study on nursing staff’s perceptions of workplace safety, a researcher might conduct semi-structured interviews to explore both specific safety concerns and broader issues related to the work environment.

3. Observation: Observation is a method where the researcher systematically watches and records behaviors, events, or interactions as they occur in their natural setting. This method is particularly useful when studying phenomena that are best understood through direct experience rather than self-reported data. Observation can be either participant (where the researcher is actively involved in the group being studied) or non-participant (where the researcher remains a passive observer).

Participant observation allows the researcher to gain a deep understanding of the group’s dynamics and behaviors by becoming part of the group. This method is often used in ethnographic studies where the researcher immerses themselves in the culture or community being studied. For example, a researcher studying the daily routines of nurses in a hospital might work alongside them to observe how they manage their workload, interact with patients, and collaborate with colleagues. The insider perspective gained through participant observation can provide valuable insights, but it also carries the risk of the researcher becoming too involved and losing objectivity.

Non-participant observation is less intrusive, with the researcher observing from the sidelines without directly engaging with the group. This approach is useful when the presence of the researcher needs to be minimized to avoid influencing the behavior being studied. For instance, a researcher observing patient-staff interactions in a clinic might position themselves in a waiting area, recording how staff members greet and assist patients without participating in the interaction. Non-participant observation can provide a more objective view of behaviors, but it may also limit the researcher’s understanding of the context behind those behaviors.

The method of recording observations—whether through notes, video recordings, or checklists—depends on the study’s objectives and the type of data being collected. Detailed, descriptive notes capture the richness of the observed behaviors and interactions, while checklists may be used to quantify specific actions or events. The choice of observational method should align with the research question and the level of detail required for analysis.

Example: A researcher observing hand hygiene practices in a hospital setting might record how often and how thoroughly staff wash their hands before and after patient contact.

4. Focus Groups: Focus groups are a qualitative data collection method where a small group of participants discusses a specific topic under the guidance of a moderator. The group dynamic is a key feature of focus groups, as the interaction between participants can generate data that might not emerge in individual interviews. Focus groups are particularly useful for exploring how people think and feel about an issue and for understanding the range of perspectives that exist within a population.

In a focus group, the moderator plays a crucial role in facilitating discussion, ensuring that all participants have the opportunity to contribute, and keeping the conversation on track. The moderator must be skilled in managing group dynamics, as some participants may dominate the discussion while others may be reluctant to share their views. By creating a supportive environment, the moderator can encourage open and honest dialogue, leading to richer data.

The composition of the focus group is also critical to the quality of the data collected. Groups are typically homogeneous with regard to certain characteristics (e.g., age, gender, profession) to ensure that participants feel comfortable sharing their views. However, researchers must also consider the potential for groupthink, where participants conform to a dominant opinion rather than expressing their own views. To mitigate this, the moderator can encourage participants to share different perspectives and validate all contributions.

Focus group discussions are usually recorded and transcribed for analysis, with themes and patterns identified across the group’s responses. The interactive nature of focus groups can reveal collective opinions, norms, and beliefs that might not be evident in individual interviews. For example, a focus group of nurses discussing the implementation of a new electronic health record system might reveal shared concerns about the system’s usability that could be overlooked in a one-on-one interview.

Example: To explore patients’ experiences with telehealth services, a researcher might conduct focus groups with patients from different demographics to understand their varying perspectives and concerns.

5. Biophysiological Methods: Biophysiological methods involve the collection of biological and physiological data to understand the relationships between physiological processes and health outcomes. These methods are often used in clinical and medical research and can be classified into in vivo and in vitro techniques.

In vivo methods involve measuring physiological processes within a living organism. These measurements can include vital signs (e.g., heart rate, blood pressure), biochemical markers (e.g., blood glucose levels), or imaging techniques (e.g., MRI scans). In vivo data collection is essential for understanding how physiological processes operate in real-time and under natural conditions. For example, a researcher studying the effects of stress on cardiovascular health might monitor participants’ heart rates and cortisol levels during a stressful task to observe the physiological response.

In vitro methods involve measuring biological processes outside the living organism, typically in a laboratory setting. These methods include analyzing blood samples, conducting genetic tests, or examining cell cultures. In vitro techniques allow researchers to study biological processes in a controlled environment, isolating specific variables to understand their effects. For instance, a researcher investigating the cellular response to a new drug might use in vitro techniques to observe how the drug affects cell growth and survival.

The accuracy and reliability of biophysiological data are critical for producing valid research findings. Researchers must ensure that the methods used are standardized, calibrated, and appropriate for the research question. Additionally, ethical considerations are paramount in biophysiological research, particularly when involving human subjects. Informed consent, privacy, and the potential risks to participants must be carefully managed to protect the well-being of those involved in the study.

Example: In a study on the effects of a new drug on heart rate, a researcher might use in vivo measurements of participants’ heart rates at different time intervals following drug administration.

Sensitivity and specificity are key measures of accuracy and reliability in biophysical testing. Sensitivity refers to a test’s ability to detect true positives, minimizing false negatives, making it crucial for screening tests (e.g., detecting early-stage diseases). Specificity, on the other hand, measures a test’s ability to identify true negatives, reducing false positives, which is essential for confirmatory tests (e.g., ruling out misdiagnosed conditions). While high sensitivity ensures fewer missed cases, high specificity prevents unnecessary treatments. Balancing both improves overall diagnostic accuracy, ensuring tests are both effective and reliable in clinical and research settings.

6. Tests and Assessments: Standardized tests and assessments are commonly used in both educational and clinical research to measure specific variables, such as knowledge, skills, or psychological states. These tools provide objective data that can be analyzed quantitatively.

Example: A researcher evaluating the impact of a nursing education program might use pre- and post-tests to measure changes in students’ knowledge and clinical skills.

Reliability and Validity in Data Collection: Quantitative vs. Qualitative Research

Quantitative Data Collection

In quantitative research, reliability ensures that data collection methods consistently produce the same results under similar conditions. A reliable survey, measurement tool, or experimental procedure should yield consistent data when repeated. For example, a digital thermometer should give the same reading for the same person under identical conditions. Reliability in data collection is strengthened through standardized protocols, well-calibrated instruments, and inter-rater consistency (when multiple researchers collect data).

Validity, in quantitative research, determines whether the data collection method accurately measures what it intends to measure. For instance, a blood pressure cuff is valid for measuring blood pressure but not for assessing stress levels. Valid data collection instruments must demonstrate content validity (covering all aspects of a concept), construct validity (measuring the intended variable), and criterion validity (aligning with an established gold standard). Poorly designed surveys, inaccurate measurement tools, or biased sampling techniques can reduce validity, leading to misleading conclusions.

Qualitative Data Collection

In qualitative research, reliability (often called dependability) ensures that data collection methods are consistent and well-documented, allowing others to follow the same approach and obtain similar findings. Since qualitative data collection involves interviews, observations, and open-ended surveys, reliability depends on structured interview guides, detailed field notes, and consistent questioning techniques. Ensuring transparency in how data is collected and recorded helps improve dependability.

Validity (or trustworthiness) in qualitative data collection ensures that the collected data accurately reflects participants’ true experiences, thoughts, and emotions. Unlike quantitative validity, which focuses on numerical accuracy, qualitative validity is enhanced through credibility (accurate representation of participants’ views), transferability (applicability to other contexts), and confirmability (ensuring data is not influenced by researcher bias). Methods such as member checking (having participants verify transcripts), triangulation (using multiple sources or methods), and thick descriptions (providing rich, contextual detail) strengthen the validity of qualitative data collection.

While quantitative research emphasizes precision and repeatability in data collection, qualitative research focuses on depth, authenticity, and consistency in capturing human experiences. Both require careful planning to ensure data is accurate, meaningful, and free from bias.

Ethical Dilemma Example

:During a study on patient experiences in a hospital setting, researchers plan to collect primary data through in-depth interviews with patients undergoing treatment for chronic illnesses. Some patients begin sharing highly personal and sensitive information that goes beyond the scope of the study, including details about other individuals not involved in the research. Although the researchers have obtained informed consent and assured participants of confidentiality, the inclusion of this unsolicited sensitive information raises concerns about privacy and the ethical handling of data that was not anticipated. The researchers must navigate the ethical challenge of respecting the participants’ disclosures while ensuring that their data collection methods do not inadvertently harm the privacy or rights of others.

Secondary (Existing) Data Methods in Data Collection

Secondary data refers to data that was collected by someone else for a different purpose but can be repurposed for a new research question. This type of data is widely used in research due to its cost-effectiveness and accessibility. Secondary data can include a range of sources, such as census data, administrative records, previous research studies, and data from surveys or clinical trials.

One of the main advantages of using secondary data is that it can provide researchers with large datasets that would be difficult or impossible to collect on their own. For example, a researcher studying the social determinants of health might use census data to analyze the relationship between income levels and access to healthcare services. Secondary data also allows researchers to conduct longitudinal studies by analyzing data collected over time, providing insights into trends and changes within a population.

However, there are also challenges associated with secondary data. Researchers must critically assess the quality and relevance of the data to ensure it is suitable for their study. Issues such as outdated data, missing information, and differences in data collection methods can impact the validity of the research findings.

Additionally, the researcher has no control over how the data was originally collected, which can limit the ability to tailor the data to the specific research question.

Despite these challenges, secondary data remains a valuable resource in research, particularly in fields like epidemiology, sociology, and economics, where large-scale data is essential for analysis. By carefully selecting and analyzing secondary data, researchers can uncover new insights and contribute to the existing body of knowledge without the need for extensive primary data collection.

Systematic Analysis in Data Collection

Systematic analysis is a type of secondary data analysis and is a very rigorous approach to data collection and analysis that involves a comprehensive and structured process to ensure the validity and reliability of research findings. This method is particularly important in systematic reviews and meta-analyses, where researchers synthesize data from multiple studies to draw broader conclusions.

Systematic Reviews: In systematic reviews, researchers follow a predefined protocol to identify, evaluate, and summarize the evidence from relevant studies on a specific research question. The process involves a thorough literature search, the selection of studies based on inclusion and exclusion criteria, and the assessment of the quality of the evidence. By systematically reviewing the existing literature, researchers can provide a clear and unbiased summary of the current state of knowledge on a topic.

Meta-Analyses: Meta-analysis is a statistical technique used within systematic reviews to combine the results of multiple studies. By pooling data from different studies, meta-analysis increases the statistical power and provides a more precise estimate of the effect size. For example, a meta-analysis of randomized controlled trials on the effectiveness of a particular drug can provide stronger evidence of its efficacy than any single study alone. The systematic nature of this approach minimizes bias and helps ensure that the conclusions drawn are based on the best available evidence.

Conducting a systematic analysis requires meticulous attention to detail. Researchers must carefully document each step of the process, from the search strategy used to identify relevant studies to the criteria for selecting studies for inclusion. Transparency in this process allows other researchers to replicate the study, enhancing the reliability of the findings. Additionally, the use of standardized tools and frameworks, such as the Cochrane Handbook for Systematic Reviews, helps ensure that the analysis is conducted rigorously and consistently.

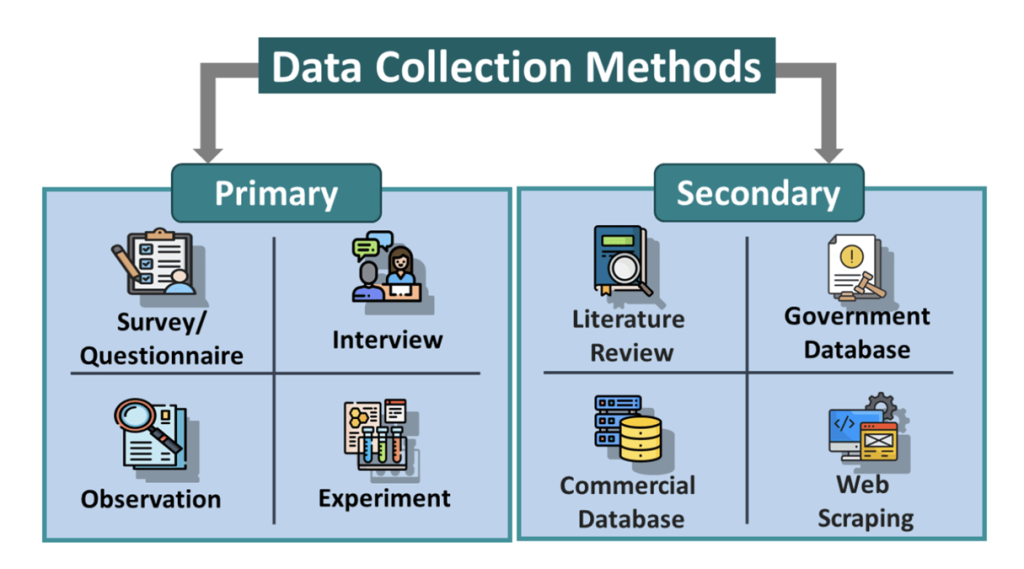

Above Figure: Data Collection Methods. Desai, S. (n.d.). https://www.educba.com/data-collection-methods/

| Data Collection Method | Pros | Cons |

| Surveys & Questionnaires | Cost-effective, quick, allows for large sample sizes, can collect both qualitative and quantitative data. | May suffer from low response rates, potential for biased or inaccurate self-reported data. |

| Interviews (Structures, Semi-Structured, Unstructured) | Provides in-depth, detailed insights, allows for clarification of responses, useful for exploring complex topics. | Time-consuming, requires trained interviewers, potential for interviewer bias. |

| Observations (Participant & Non-Participant) | Captures real-time behaviors in a natural setting, avoids reliance on self-reports. | Observer bias may occur, can be intrusive, may not explain underlying motivations. |

| Focus Groups | Encourages discussion, reveals group dynamics and shared experiences, provides rich qualitative data. | Risk of groupthink, dominant participants may overshadow others, may not represent the broader population. |

| Biophysiological Measures (In Vivo and In Vitro) | Provides objective, reliable data, minimizes self-report bias, essential for clinical and medical research. | Requires specialized equipment, ethical concerns regarding invasiveness, may not capture social or psychological factors. |

| Tests & Assessments | Standardized, reliable for measuring knowledge, skills, or psychological traits, allows for statistical comparisons. | May not capture broader content or individual experiences, potential for test anxiety affecting results. |

| Social Media Data Collection | Access to large, diverse populations, cost-effective, allow real-time trend analysis. | Ethical concerns about privacy and consent, data may be biased or misleading due to platform algorithms. |

| Secondary Data (Existing Databases, Records, Literature Reviews) | Saves time and resources, allows analysis of large datasets, useful for longitudinal studies. | Limited control over data quality, potential for outdates or incomplete information, may not fully align with research objectives. |

| Systematic Reviews & Meta-Analyses | Synthesizes findings from multiple studies, strengthens evidence base, reduces individual study bias. | Time-consuming, requires rigorous methodology, may be limited by the quality of included studies. |

Achieving the Data Collection Strategy

Polit and Beck (2021) emphasize that researchers should identify the most relevant data to answer the research question, outline the characteristics of the sample, implement strategies to manage extraneous variables, assess potential biases, account for subgroup influences, and ensure data integrity by checking for any manipulation.

Before beginning data collection, researchers must have a clear understanding of how data will be gathered, measured, and analyzed. Proper planning ensures that the data collected is relevant, accurate, and appropriate for answering the research question. Failing to establish clear measurement criteria beforehand can lead to inconsistent, incomplete, or unusable data, ultimately compromising the study’s validity.

Knowing how data will be measured is especially critical in quantitative research, where variables must be precisely defined. For instance, if a study examines blood pressure changes after a nursing intervention, researchers must determine whether systolic and diastolic blood pressure readings will be recorded, what measurement device will be used, and at what time intervals data will be collected. Similarly, in qualitative research, researchers must decide how interviews, observations, or focus groups will be documented and analyzed to ensure credibility and consistency.

Additionally, researchers should understand the statistical or thematic analysis techniques required to interpret the data. Without this foresight, valuable information may be collected incorrectly, omitted, or misinterpreted. By planning ahead, researchers can ensure that their data collection methods align with study objectives, leading to reliable findings that contribute meaningful insights to the field.

Achieving an effective data collection strategy requires careful planning and consideration of several key factors. The researcher must align the data collection methods with the research question, ensuring that the chosen methods will yield the data necessary to answer the question comprehensively. This involves selecting the appropriate tools and techniques, considering the study population, and being mindful of practical constraints such as time, budget, and resources.

A well-structured data collection strategy begins with clearly operationalizing the variables, where the researcher defines how each variable will be measured or observed. For example, in a study examining patient satisfaction, the researcher might operationalize satisfaction through a combination of self-reported questionnaire responses and observational data on patient interactions with healthcare staff.

The next step is to develop a data collection plan that outlines the methods, instruments, and procedures to be used. This plan should include details such as the sampling strategy, the timing and frequency of data collection, and the steps to be taken to ensure data quality and consistency. Pre-testing or piloting the data collection instruments can help identify potential issues and refine the approach before full-scale data collection begins.

| Step | Description | Example |

| Define Research Question | Ensure that the data collection method aligns with the study’s objectives. | A study on patient satisfaction must determine whether surveys, interviews, or observations will best capture meaningful data. |

| Operationalize Variables | Clearly define how each variable will be measured or observed. | In a study on nursing interventions and blood pressure, decide whether systolic and diastolic readings will be used and at what time intervals. |

| Select Data Collection Method | Choose appropriate tools and techniques for gathering data (e.g., surveys, interviews, observations, biophysiological measures). | Researchers may use electronic health records, structured interviews, or focus groups depending on the study design. |

| Develop a Data Collection Plan | Outline sampling strategy, timing, frequency, and procedures to ensure quality and consistency. |

A study on medication adherence might collect data weekly through patient-reported logs. |

| Pilot Test Instruments | Pre-test surveys, questionnaires, or measurement tools to identify issues before full-scale data collection. | Testing a patient satisfaction survey on a small group to refine unclear questions. |

| Consider Ethical Issues | Obtain informed consent, ensure privacy, and minimize risks to participants. | In clinical research, biophysiological data should be non-invasive and ensure confidentiality. |

| Data Storage and Management | Plan for secure data storage, backups, and access control to protect integrity and confidentiality. | Use encrypted electronic records or locked physical files for sensitive data. |

| Choose Analysis Techniques | Determine how data will be analyzed – quantitative (statistical analysis) or qualitative (thematic analysis). | Use SPSS for statistical analysis in quantitative research or NVivo for qualitative coding. |

Above Table: Key Components of an Effective Data Collection Strategy

Ethical considerations are also paramount in developing a data collection strategy. Researchers must obtain informed consent from participants, protect their privacy and confidentiality, and minimize any potential harm. In clinical research, this might involve ensuring that biophysiological data collection is non-invasive or poses minimal risk to participants.

Finally, the researcher must consider how the data will be managed and analyzed once collected. This includes developing a plan for data storage, ensuring that data is securely stored and backed up, and choosing the appropriate analytical techniques to answer the research question. By carefully planning and executing the data collection strategy, researchers can ensure that the data collected is robust, reliable, and fit for purpose.

Practical Application: Developing a Qualitative Study

A research team is designing a study to explore the factors influencing medication adherence among elderly patients with chronic conditions in a community health setting. They aim to collect primary data through surveys and focus group discussions to gather detailed information on patients’ behaviors, beliefs, and barriers to adherence.

Activity:

The team develops a data collection plan that includes creating structured survey questions and organizing focus groups with participants recruited from local clinics. They carefully design the questions to be clear and relevant, and they schedule sessions to accommodate the participants’ availability, ensuring they can capture a broad range of experiences and insights.

Ethical Dilemma:

During the focus group discussions, some participants begin sharing personal health information and stories that are outside the scope of the study and involve other family members who have not consented to participate. The researchers face the ethical challenge of managing these disclosures while maintaining the privacy and confidentiality of all involved, including individuals who have not provided consent.

Conclusion

This scenario highlights the importance of having a flexible yet ethically sound data collection plan that not only gathers valuable data but also anticipates and addresses potential ethical issues. Researchers must be prepared to handle sensitive disclosures appropriately, ensuring that their data collection methods protect the privacy and dignity of all individuals, whether directly involved in the study or mentioned during data collection.

![]() Hot Tip! Clearly define your data collection timeline and stick to it. Consistent timing in data collection helps minimize variability due to external factors and ensures that your data accurately reflects the conditions you intend to study.

Hot Tip! Clearly define your data collection timeline and stick to it. Consistent timing in data collection helps minimize variability due to external factors and ensures that your data accurately reflects the conditions you intend to study.

Evidence-Based Practice (EBP) Considerations in Data Collection

In the context of evidence-based practice (EBP), data collection is crucial for making clinical decisions and improving patient outcomes. EBP integrates the best available research evidence with clinical expertise and patient values to guide decision-making in healthcare. As such, the data collected in EBP-related research must be of high quality, relevant, and applicable to clinical practice.

Relevance to clinical practice is a key consideration in EBP-related data collection. Researchers must ensure that the data they collect is directly applicable to the clinical questions being addressed. For example, when studying the effectiveness of a new intervention, the data collected should include outcomes that are meaningful to both patients and clinicians, such as improvements in symptoms, quality of life, or patient satisfaction.

Another important consideration is the integration of patient preferences and values into the data collection process. In EBP, patient-centered outcomes are prioritized, and researchers must ensure that the data collected reflects the experiences and preferences of the patient population. This might involve using qualitative methods, such as interviews or focus groups, to gather data on patient perspectives or incorporating patient-reported outcome measures into quantitative studies.

The timeliness of data collection is also critical in EBP. Healthcare practices and guidelines evolve rapidly, and researchers must collect and analyze data promptly to ensure that the findings remain relevant to current practice. This might involve using real-time data collection methods, such as electronic health records or mobile health technologies, to capture data as it is generated in clinical settings.

Finally, translating research findings into practice is a key goal of EBP. Researchers must consider how the data collected will be used to inform clinical guidelines, protocols, and decision-making processes. This involves ensuring the data’s quality and relevance and effectively communicating the findings to clinicians, policymakers, and other stakeholders. By aligning data collection with the principles of EBP, researchers can contribute to developing evidence-based interventions that improve patient care and outcomes.

![]() Hot Tip! Always pilot-test your data collection instruments and procedures with a small, representative sample before full deployment. This helps identify any issues with the questions, instructions, or process, ensuring your data collection runs smoothly and yields reliable results.

Hot Tip! Always pilot-test your data collection instruments and procedures with a small, representative sample before full deployment. This helps identify any issues with the questions, instructions, or process, ensuring your data collection runs smoothly and yields reliable results.

The Purpose of Big Data in Research

Big Data refers to the vast and complex datasets that are beyond the capabilities of traditional data processing tools. In research, Big Data can be a powerful resource for identifying patterns, trends, and associations that might not be apparent in smaller datasets. The management of Big Data involves sophisticated techniques such as data mining and predictive analytics, which allow researchers to extract meaningful insights from large volumes of information.

Data mining involves exploring large datasets to identify patterns or relationships, often using algorithms and statistical models. Predictive analytics takes this a step further by using historical data to predict future outcomes (Fenush & Barry, 2017). These techniques are particularly useful in healthcare research, where Big Data from electronic health records, genomic studies, and patient monitoring systems can be analyzed to improve patient outcomes, predict disease outbreaks, or personalize treatments.

Example: A researcher using Big Data to study the prevalence of diabetes might analyze millions of electronic health records to identify trends in diagnosis, treatment, and patient outcomes across different populations. Predictive analytics could then be used to forecast the future burden of diabetes and guide public health interventions.

The Role of Social Media in Data Collection

Social media has become an increasingly valuable tool for data collection in research, offering access to large, diverse populations and real-time insights. Platforms like Facebook, Twitter, Instagram, LinkedIn, and Reddit provide researchers with the ability to collect quantitative and qualitative data through surveys, polls, sentiment analysis, and user interactions.

One of the primary advantages of using social media for data collection is its broad reach and accessibility. Researchers can engage with specific demographics that may be difficult to reach through traditional methods, such as marginalized communities, international participants, or individuals with niche interests. Additionally, social media enables cost-effective and rapid data collection, allowing researchers to gather large datasets without the expenses of in-person recruitment.

Beyond surveys and polls, researchers can use social media analytics to analyze user-generated content, including comments, hashtags, and engagement patterns. Sentiment analysis tools can assess public attitudes and opinions on healthcare issues, policies, or societal trends. For instance, researchers studying mental health awareness can analyze Twitter discussions to identify trends in public perception and stigma.

However, there are ethical and reliability concerns when using social media for data collection. Issues such as informed consent, data privacy, and potential biases must be carefully managed. Researchers must follow ethical guidelines, ensuring that data collected from social media platforms is used responsibly and respects users’ privacy.

Despite these challenges, social media remains a powerful tool for modern research, offering innovative ways to collect and analyze data while reaching a wide range of participants in real time.

In summary, data collection is a critical component of the research process, with the chosen method significantly influencing the study’s outcomes. By understanding the differences between accessible and novel data, researchers can make informed decisions about the most appropriate data collection approach for their study. Additionally, the rise of Big Data offers new opportunities and challenges in managing and analyzing vast datasets, underscoring the importance of advanced data management techniques in modern research. The careful selection and implementation of data collection methods are essential for producing reliable, valid, and impactful research findings (Dearholt & Dang, 2012). Each method discussed plays a crucial role in ensuring that the data collected is both robust and relevant, ultimately contributing to the advancement of knowledge and the improvement of clinical practice.

![]() Critical Appraisal! Data Collection Methods

Critical Appraisal! Data Collection Methods

- Are the data collection methods clearly described, and are they appropriate for the research objectives and study design?

- Is the sampling method used for data collection clearly defined, and does it ensure a representative sample of the population?

- Were the instruments or tools used for data collection validated and reliable?

- How were data collectors trained, and what steps were taken to minimize bias during data collection?

- Were ethical considerations, such as informed consent and confidentiality, adequately addressed in the data collection process?

- Were the data collection methods pilot-tested or pre-tested, and if so, what were the results?

- Were there any challenges or limitations reported in the data collection process, and how were they addressed?

- Did the timing and setting of data collection impact the results, and were these factors appropriately managed?

Summary Points

- Data collection is crucial in research as it directly impacts the accuracy, reliability, and validity of study findings.

- Accessible data refers to pre-existing information (e.g., previous studies, databases, government reports), while novel data is newly collected for a specific research study.

- Using accessible data can save time and resources, making it valuable for secondary research, meta-analyses, and systematic reviews.

- Novel data collection is necessary when existing data is outdated, insufficient, or does not answer the research question.

- Primary data collection methods include surveys, interviews, observations, focus groups, biophysiological measures, and tests/assessments.

- Surveys and questionnaires are commonly used in quantitative research to gather large-scale data, often using closed-ended and open-ended questions.

- Interviews can be structured, semi-structured, or unstructured, with each method offering varying levels of flexibility and depth in data collection.

- Observation methods can be participant (researcher is involved) or non-participant (researcher observes from a distance), depending on the study’s needs.

- Focus groups allow participants to discuss a topic in a guided setting, offering insights into shared beliefs, attitudes, and perceptions.

- Biophysiological data collection involves measuring biological or physiological factors through in vivo (within the body) or in vitro (in a lab) methods.

- Secondary data methods involve using pre-existing data sources like electronic health records, census data, and previous research findings.

- Systematic analysis, including systematic reviews and meta-analyses, allows researchers to combine and synthesize findings from multiple studies.

- Ethical considerations in data collection include obtaining informed consent, protecting confidentiality, and minimizing potential harm to participants.

- A well-planned data collection strategy aligns with the research question, ensures consistency, and considers resource constraints such as time and budget.

- Pilot testing data collection instruments with a small sample helps identify potential issues before full deployment.

- Big Data in research allows for the analysis of large datasets using data mining and predictive analytics to identify trends and improve decision-making.

- EBP (Evidence-Based Practice) relies on high-quality data that is relevant, timely, and applicable to clinical settings to improve patient outcomes.

- Real-time data collection methods, such as electronic health records and mobile health technologies, enhance the speed and relevance of research.

- Challenges in secondary data analysis include data quality issues, missing information, and lack of control over original data collection methods.

- The choice of data collection method should be based on research objectives, study design, and the type of data required for analysis.

References & Attribution

“Green check mark” by rawpixel licensed CC0.

Alekseyev S, Byrne M, Carpenter A, Franker C, Kidd C, Hulton L. (2012). Prolonging the life of a patient’s IV: An integrative review of intravenous securement devices. Medsurg Nurs, 21(5), 285-92.

Boswell, C. & Cannon, S. (2020). Introduction to nursing research: Incorporating evidence-based practice (5th ed.). Jones & Bartlett Learning.

Dearholt, S.L., & Dang, D. (2012). Johns Hopkins nursing evidence-based practice: Model and guidelines (2nd Ed.). Indianapolis, IN: Sigma Theta Tau International.

Fenush, J., & Barry, R. M. (2017). Predictive analytics empower nurses: Quality care requires robust staff scheduling tools. American Nurse Today, 12(11), 26–28. https://www.myamericannurse.com/wp-content/uploads/2017/11/ant11-Focus-0n-Staffing-1030.pdf

Hall, H. & Roussel, L. (2014). Evidence-based practice: An integrative approach to research, administration, and practice. Jones & Bartlett: Burlington, MA.

Houser, J. (2018). Nursing research: Reading, using, and creating evidence (4th ed.). Burlington, MA: Jones & Bartlett Learning.

Polit, D. & Beck, C. (2021). Lippincott CoursePoint enhanced for Polit’s essentials of nursing research (10th ed.). Wolters Kluwer Health.

Wagner, A.C., McShane, K.E., Hart, T.A. (2016). A focus group qualitative study of HIV stigma in the Canadian healthcare system. The Canadian Journal of Human Sexuality, 25(1), 61-71.

Wood, M. J., & Ross-Kerr, J. C. (2011). Basic steps in planning nursing research: From question to proposal (7th ed.). Jones & Bartlett Learning.

Zadvinskis, I. M., & Melnyk, B. M. (2019). Making a case for replication studies and reproducibility to strengthen evidence-based practice. Worldviews on Evidence-Based Nursing, 16(1), 2–3. https://doi.org/10.1111/wvn.12349